Yao Dou

@yaooo01

PhD student @GeorgiaTech, previously @uwnlp, @allen_ai.

ID: 912061597413122048

https://yao-dou.github.io/ 24-09-2017 21:10:04

50 Tweet

211 Takipçi

287 Takip Edilen

Upcoming tutorial at ACL 2024 on Automatic & Human-AI Interactive Text Generation tutorial with Yao Dou Philippe Laban Claire Gardent. Stay tuned :) arxiv.org/pdf/2310.03878

Excited to be in Thailand ☀️😎 🌺🥥 to attend #ACL2024! If you run into my PhD students from Georgia Tech NLP group - Yao Dou Yao Dou, Tarek Naous Tarek Naous, and Jonathan Zheng Jonathan Zheng, please say hi! 👋 Please also say hi to my other collaborators!

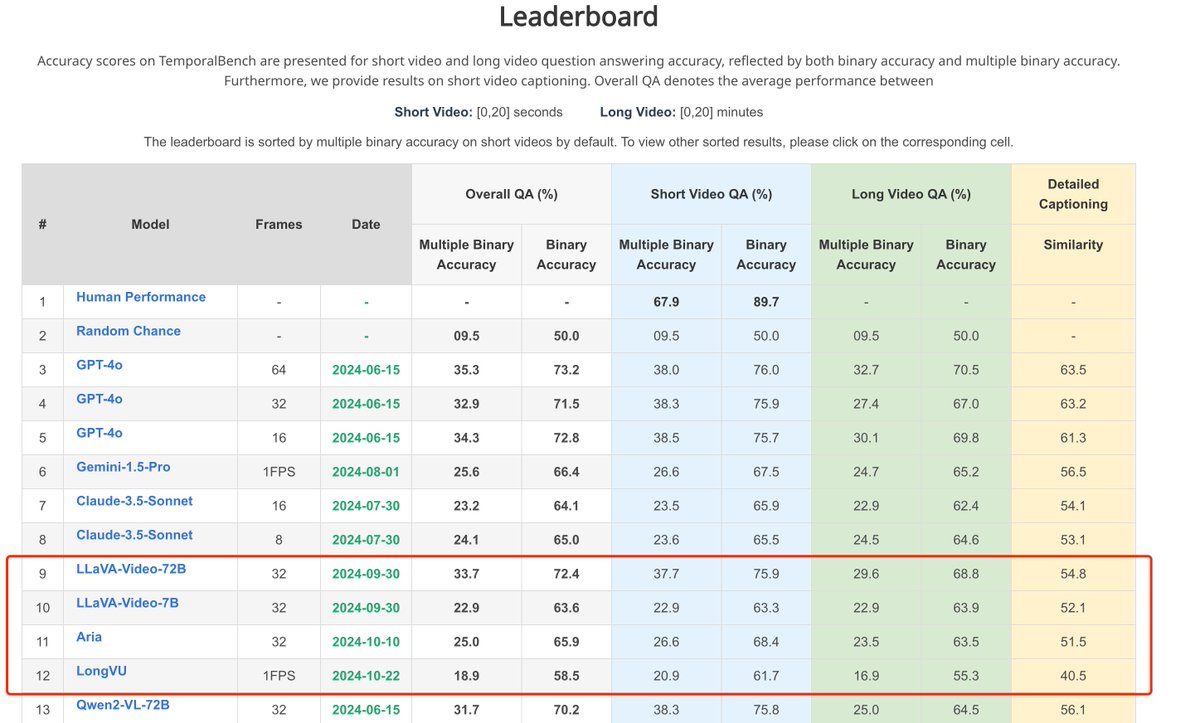

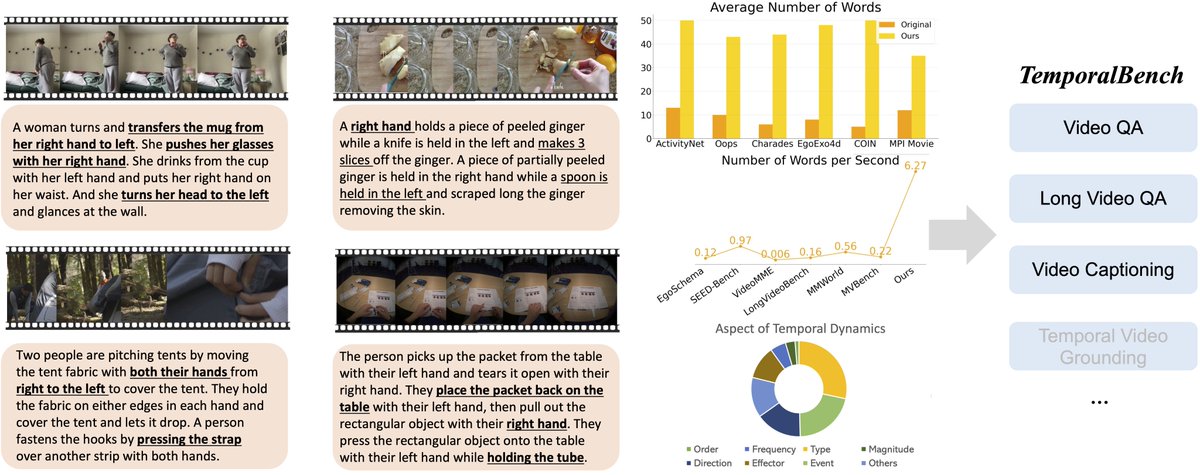

Now TemporalBench is fully public! See how your video understanding model performs on TemporalBench before CVPR! 🤗 Dataset: huggingface.co/datasets/micro… 📎 Integrated to lmms-eval (systematic eval): github.com/EvolvingLMMs-L… (great work by Chunyuan Li Yuanhan (John) Zhang ) 📗 Our