Xinyi Wang @ ICLR

@xinyiwang98

Final year UC Santa Barbara CS PhD student trying to understand LLMs. She/her.

ID: 1329401770213183489

https://wangxinyilinda.github.io/ 19-11-2020 12:31:59

120 Tweet

1,1K Followers

417 Following

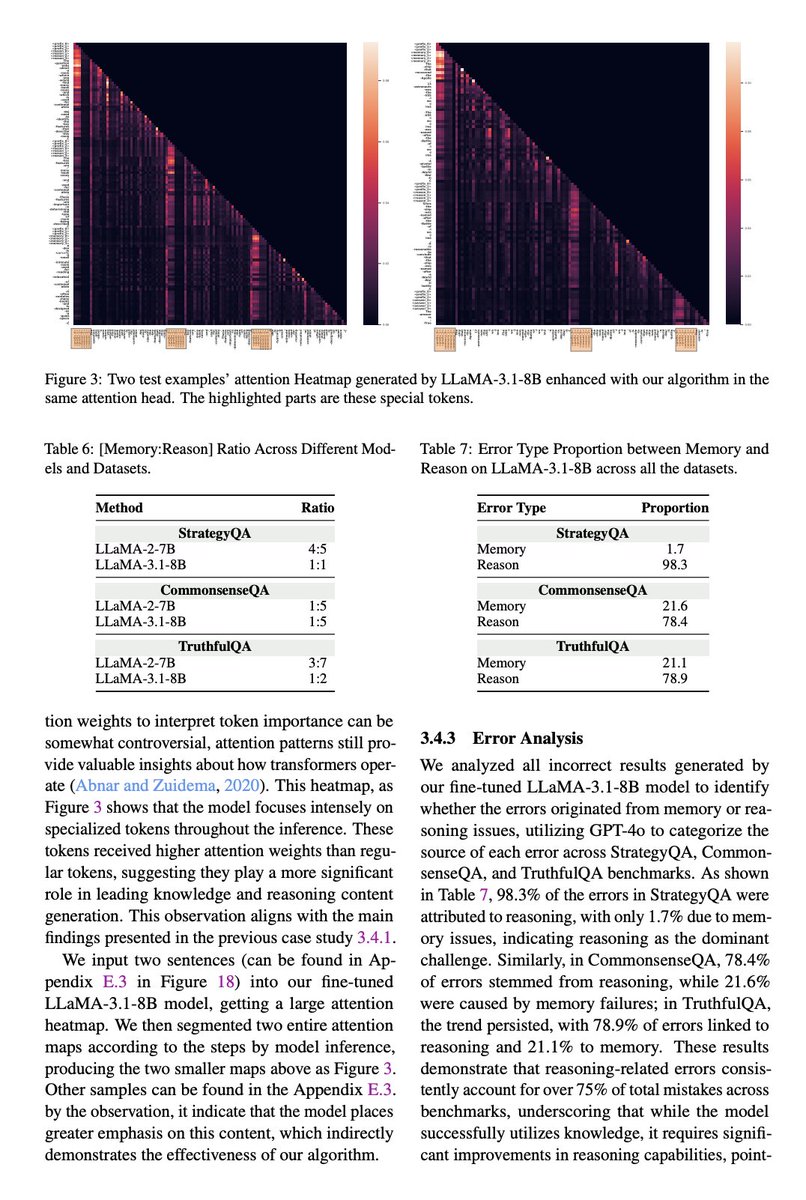

We have few intern positions open in our ML team @ MSR Montreal, come work with Marc-Alexandre Côté Minseon Kim Lucas Caccia Matheus Pereira Eric Xingdi Yuan on reasoning, interactive envs/coding and LLM modularization.. 🤯 Matheus Pereira and I will also be at #NeurIPS2024 so we can chat about this

🙌We are calling for submissions and recruiting reviewers for the Open Science for Foundation Models (SCI-FM) workshop at ICLR 2025! Submit your paper: openreview.net/group?id=ICLR.… (deadline: Feb 13) Register as a reviewer: forms.office.com/e/SdYw5U75U3 (review submission deadline: Feb 28)