Xingyu Fu

@xingyufu2

PhD student @Penn @cogcomp. | Focused on Vision+Language | Previous: @MSFTResearch @AmazonScience B.S. @UofIllinois | ⛳️😺

ID: 1305996908264075270

https://zeyofu.github.io/ 15-09-2020 22:28:30

116 Tweet

879 Followers

514 Following

😌Been wanting to post since March but waited for the graduation photo….Thrilled to finally share that I’ll be joining Princeton University as a postdoc Princeton PLI this August! Endless thanks to my incredible advisors and mentors from Penn, UW, Cornell, NYU, UCSB, USC,

Research with amazing collaborators Jize Jiang, Meitang Li, and Jingcheng Yang, guided by great advisors and supported by the generous help of talented researchers Bowen Jin, Xingyu Fu ✈️ ICML25, and many open-source contributors (easyr1, verl, vllm... etc).

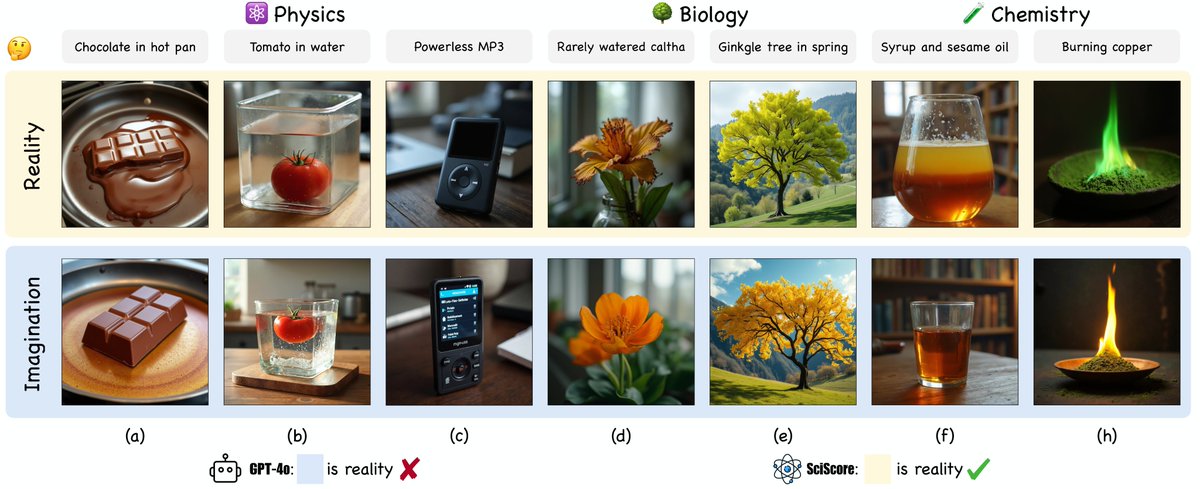

![Jialuo Li (@jialuoli1007) on Twitter photo 🚀 Introducing Science-T2I - Towards bridging the gap between AI imagination and scientific reality in image generation! [CVPR 2025]

📜 Paper: arxiv.org/abs/2504.13129

🌐 Project: jialuo-li.github.io/Science-T2I-Web

💻 Code: github.com/Jialuo-Li/Scie…

🤗 Dataset: huggingface.co/collections/Ji…

🔍 🚀 Introducing Science-T2I - Towards bridging the gap between AI imagination and scientific reality in image generation! [CVPR 2025]

📜 Paper: arxiv.org/abs/2504.13129

🌐 Project: jialuo-li.github.io/Science-T2I-Web

💻 Code: github.com/Jialuo-Li/Scie…

🤗 Dataset: huggingface.co/collections/Ji…

🔍](https://pbs.twimg.com/media/GpHIUILa4AIUXTq.jpg)