William Wang

@WilliamWangNLP

UCSB NLP Lab + ML Center. https://t.co/6TOnqbk6YT https://t.co/KJYhnav3Et Mellichamp Chair Prof. at UCSB CS. PhD @ CMU SCS. Areas: #NLProc, Machine Learning, AI.

ID:503452360

http://www.cs.ucsb.edu/~william 25-02-2012 19:40:12

2,2K Tweets

13,9K Followers

716 Following

open.spotify.com/show/1zpZxyZOQ…

Can off-the-shelf large language models help translate low-resource and endangered languages despite not seeing these languages in their training data? Maybe! I spoke to Kexun Zhang about LingoLLM, a workflow and pipeline that helps upgrade the language…

Call me biased, but Wenhu Chen and Huan Sun (OSU) Yu Su are doing some of the most impressive research in generative AI these days.

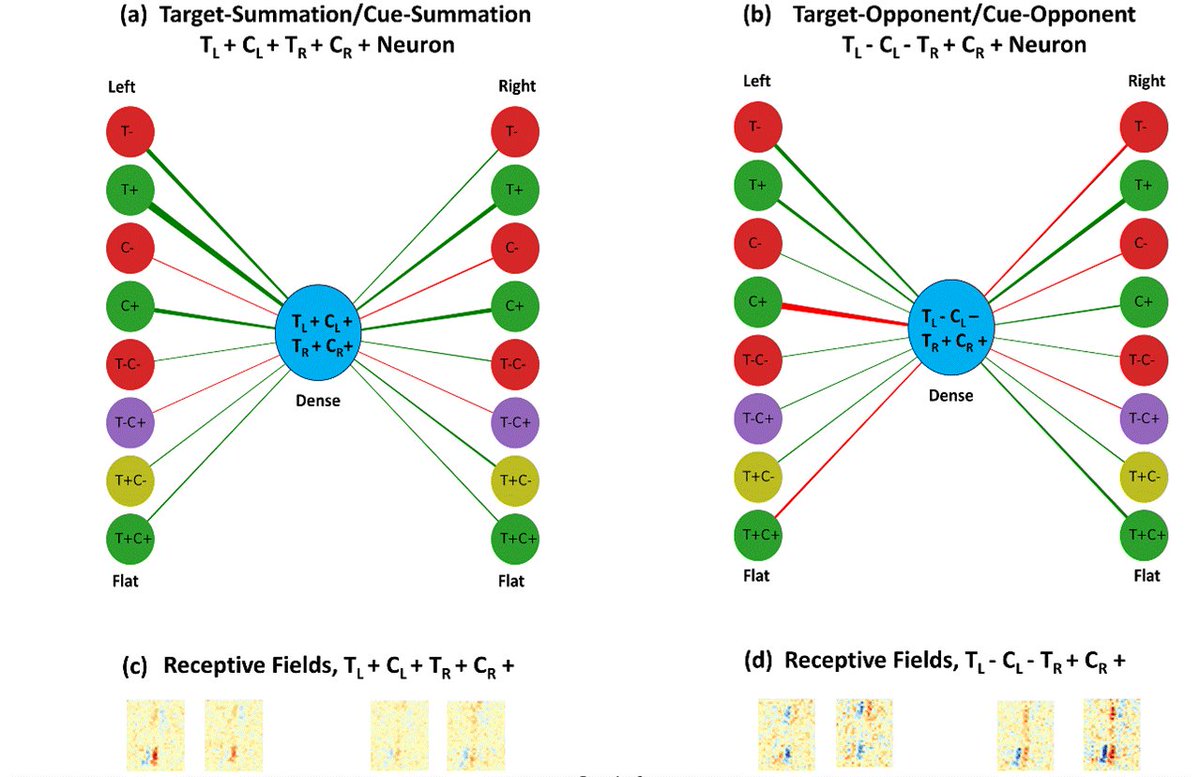

New preprint analyzing 1.8 million neurons of CNNs with emergent behavioral and neuronal signatures of covert attention without incorporating any explicit attention mechanism. With Sudhanshu Srivastava William Wang biorxiv.org/content/10.110…

Why would you register #NeurIPS2024 now to apply for a visa? Well, if your visa is denied, then you can apply for full refund of your registration: neurips.cc/FAQ/Cancellati…

When LLMs make mistakes, can we build a model to pinpoint error, indicate its severity and error type? Can we incorporate this fine-grained info to improve LLM? We introduce LLMRefine [NAACL 2024], a simulated annealing method to revise LLM output at inference. Google AI UCSB NLP Group

![Wenda Xu (@WendaXu2) on Twitter photo 2024-04-02 18:39:14 When LLMs make mistakes, can we build a model to pinpoint error, indicate its severity and error type? Can we incorporate this fine-grained info to improve LLM? We introduce LLMRefine [NAACL 2024], a simulated annealing method to revise LLM output at inference. @GoogleAI @ucsbNLP When LLMs make mistakes, can we build a model to pinpoint error, indicate its severity and error type? Can we incorporate this fine-grained info to improve LLM? We introduce LLMRefine [NAACL 2024], a simulated annealing method to revise LLM output at inference. @GoogleAI @ucsbNLP](https://pbs.twimg.com/media/GKLjXF9XQAEizzm.jpg)