Fan Vincent Mo

@vincentmo6

Senior Researcher at @Huawei; Alumni at @ImperialCollege @ImperialSysAL; Working in Safety of language models, Privacy-preserving machine learning.

ID: 799560089963114496

http://mofanv.github.io 18-11-2016 10:29:15

109 Tweet

157 Takipçi

258 Takip Edilen

Due to several requests, we are extending the MAISP deadline ACM MobiSys to 14/5. If you work on Security/Privacy of ML on mobile/edge/network devices, check it out: maisp.gitlab.io Ilias Leontiadis,Kleomenis Katevas,Cristian Canton Great keynotes/program! concordia-h2020.eu ACCORDION Project H2020

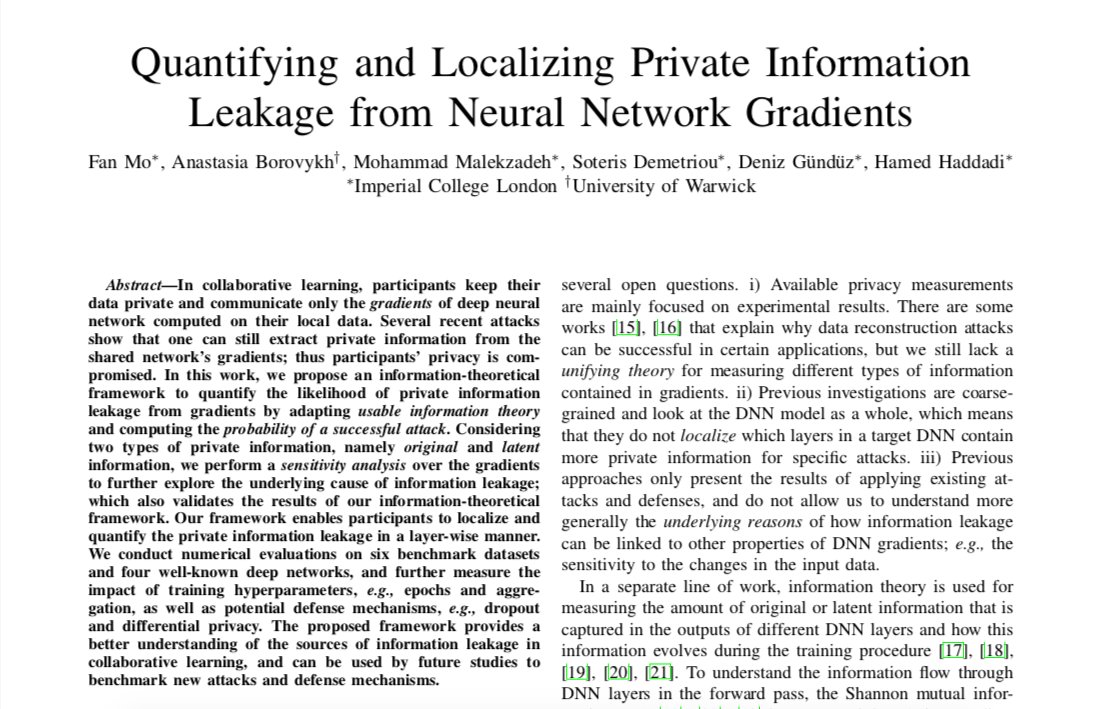

If you work on privacy-preserving ML and especially Federated Learning, check out our paper and code that we made public ahead of our ACM MobiSys 2021 presentation! Powered by: Telefónica Scientific Research, concordia-h2020.eu, ACCORDION Project H2020, PIMCity Project EU H2020 #dataprivacy #CyberSecurity

ACM SIGMOBILE MobiSys 2021 has started. Heartiest Congratulations to the author of the two best paper awards this year. Well done! 🍷Now, on to the first keynote by Shree K. Nayar (Columbia) on "Future Cameras: Redefining the Image." Nice start😀

I'm very excited and really honored to get the best paper award. Massive thanks to all the authors and my group/teams. Imperial SysAL Telefónica Scientific Research

I should not trust my Federated Learning provider.But I should still trust my coauthors Fan Vincent Mo,Kleomenis Katevas,Hamed,@_EduardMarin_ & Diego Perino! Feels great to get best paper ACM MobiSys for PPFL privacy-preserving FL system! Telefónica Scientific Research concordia-h2020.eu ACCORDION Project H2020

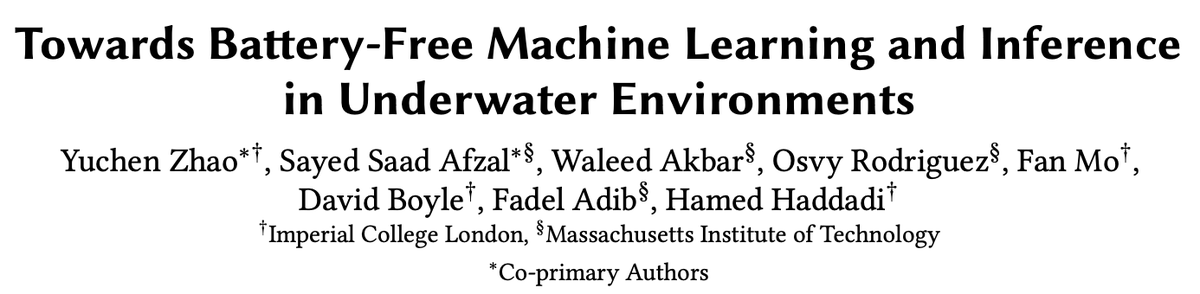

Excited about our upcoming work on "battery-free machine learning for the ocean!", just accepted to ACM HotMobile’24 2022 - a cross-pond collaboration between our groups MIT Signal Kinetics Group and Imperial SysAL. Stay tuned!

Super excited to start my internship at Nokia Bell Labs Cambridge today! I will be working on device intelligence in Akhil Mathur and Fahim Kawsar's team. (meanwhile, I have been using my Nokia phone for 15 years 😃)

I hit a bug in the Attention formula that’s been overlooked for 8+ years. All Transformer models (GPT, LLaMA, etc) are affected. Researchers isolated the bug last month – but they missed a simple solution… Why LLM designers should stop using Softmax 👇 evanmiller.org/attention-is-o…