Varun Chandrasekaran

@VarunChandrase3

Opinions are my own. Professing @ECEILLINOIS. Alumnus: @MSFTResearch @WisconsinCS, @nyuniversity. Interested in *most* things S&P. he/him

ID:1037032402403708929

http://pages.cs.wisc.edu/~chandrasekaran/ 04-09-2018 17:39:26

2,0K Tweets

828 Followers

350 Following

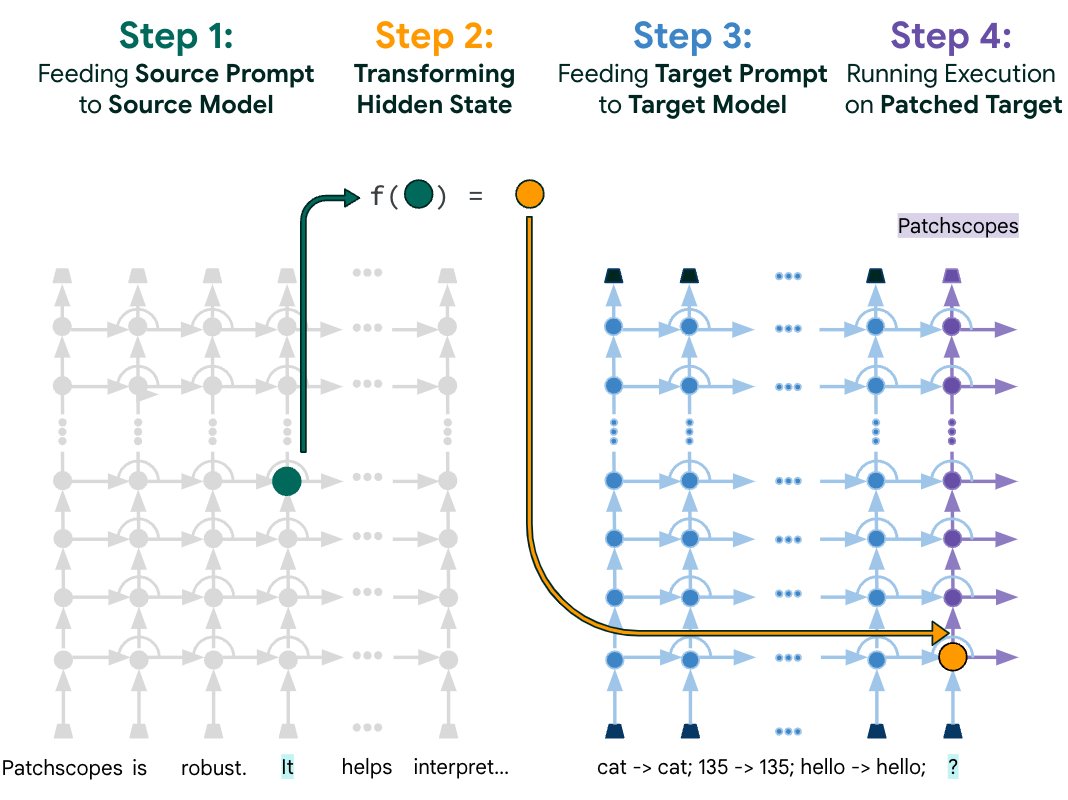

Should we trust LLM evaluations on publicly available benchmarks?🤔

Our latest work studies the overfitting of few-shot learning with GPT-4.

with Harsha Nori Vanessa Rodrigues Besmira Nushi 💙💛 and Rich Caruana

Paper: arxiv.org/abs/2404.06209

More details👇 [1/N]

![Sebastian Bordt (@s_bordt) on Twitter photo 2024-04-19 15:50:21 Should we trust LLM evaluations on publicly available benchmarks?🤔 Our latest work studies the overfitting of few-shot learning with GPT-4. with @HarshaNori Vanessa Rodrigues @besanushi and Rich Caruana Paper: arxiv.org/abs/2404.06209 More details👇 [1/N] Should we trust LLM evaluations on publicly available benchmarks?🤔 Our latest work studies the overfitting of few-shot learning with GPT-4. with @HarshaNori Vanessa Rodrigues @besanushi and Rich Caruana Paper: arxiv.org/abs/2404.06209 More details👇 [1/N]](https://pbs.twimg.com/media/GLifyinWUAAUa63.png)

Lujo (Lujo Bauer) and I are seeking nominations for service on the program committee for USENIX Security '25. You may nominate yourself or someone else by Friday, May 24, 2024: forms.gle/VAWFhYzuBQo6kP….

The new SaTML pc chairs Konrad Rieck 🌈 and Somesh Jha are looking for a general chair & venue for the 2025 conference.

If you're interested in hosting the conference in April 2025 (the exact date/month is flexible), submit a bid here:

tinyurl.com/hostsatml

Soft deadline: May 15 2024

The first SaTML Conference tutorial by Yves-A. de Montjoye on detecting copyright material in LLMs is now starting!

'Now, Later, and Lasting: 10 Priorities for AI Research, Policy, and Practice,' forthcoming in CACM, with Vincent Conitzer Sheila McIlraith Peter Stone

#AI100 Communications of the ACM Association for Computing Machinery Stanford HAI Partnership on AI White House Office of Science & Technology Policy Carnegie Mellon University University of Toronto CIFAR arxiv.org/abs/2404.04750