UKP Lab

@UKPLab

The Ubiquitous Knowledge Processing Lab researches Natural Language Processing, Text Mining, eLearning, and Digital Humanities · @CS_TUDarmstadt · @TUDarmstadt

17-06-2014 09:30:24

1,0K Tweets

2,3K Followers

398 Following

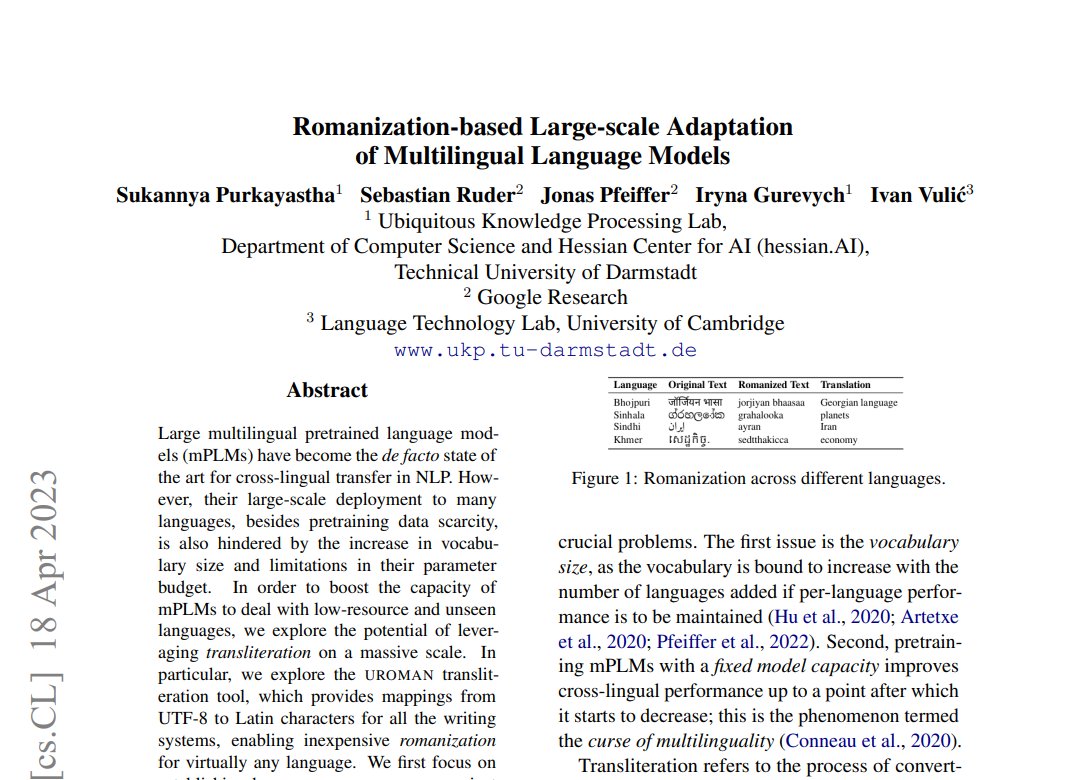

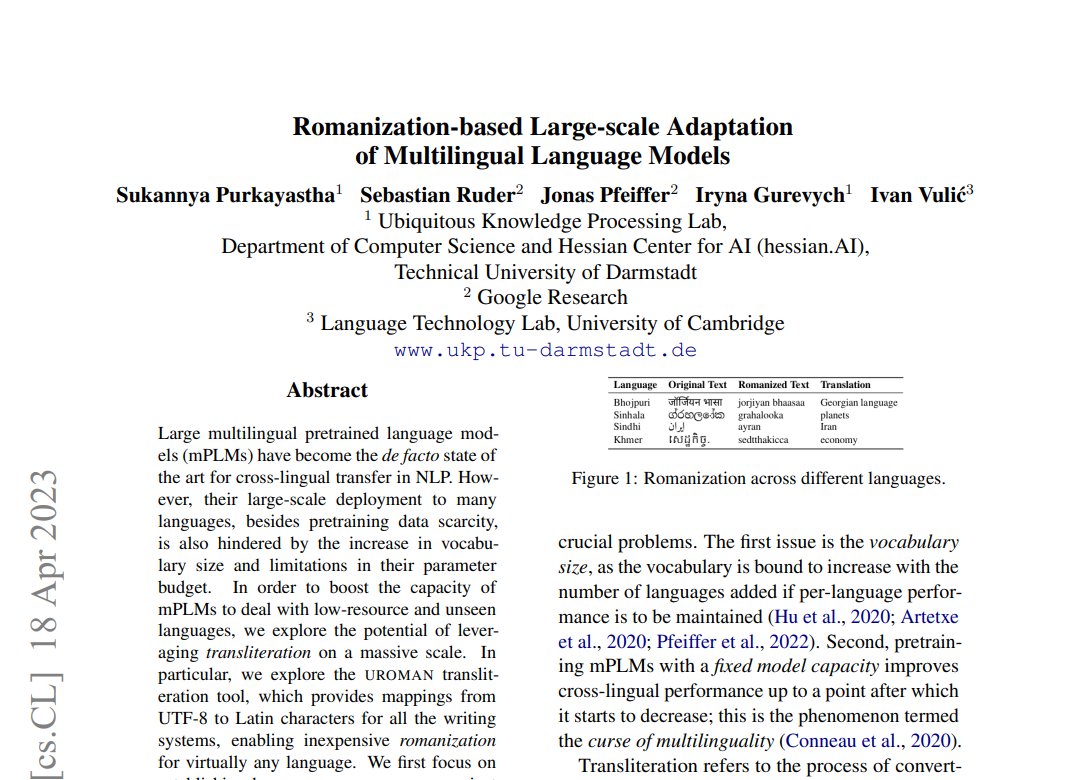

Large mPLMs are the gold standard for cross-lingual transfer. But deployment to many languages faces challenges – like pretraining data scarcity, vocabulary size, and parameter limitations. (2/🧵) #NLProcessing #Multilingual #EMNLP2023

🤔 Breaking Down the Challenges 🌐

The Vocabulary size and parameter budget are real roadblocks to deploying mPLMs across diverse languages.

The key? 🗝️ Transliteration on a massive scale! Using UROMAN (Hermjakob et al. 2018)! A massive transliteration library! (3/🧵) #EMNLP2023

🔄 Data and Parameter Efficiency: The Magic Behind Adaptation 🧙♂️

How do we adapt mPLMs to romanized and non-romanized corpora of 14 low-resource languages? We explore a plethora of strategies – data and parameter-efficient ones! (5/🧵) #EMNLP2023

💡 Results Are In: UROMAN Shines in the Toughest Scenarios! 🌟

UROMAN-based transliteration offers strong performance, especially in challenging setups - languages with unseen scripts and limited training data. Discover how this tool outshines the competition! (6/🧵) #EMNLP2023

🪄 The Tokenizer Twist: Outperforming Non-Transliteration Methods 🔄

But wait, there's more! An improved tokenizer based on romanized data can even outperform non-transliteration methods in most languages. (7/🧵) #EMNLP2023

You can find our paper here:

📃arxiv.org/abs/2304.08865

Consider following our authors Sukannya Purkayastha (সুকন্যা পুরকায়স্থ), Sebastian Ruder (@GoogleAI), Jonas Pfeiffer (@GoogleDeepMind), Iryna Gurevych, and Ivan Vulić (@CambridgeLTL) (9/🧵)

See you in 🇸🇬! #EMNLP2023