TheStage AI

@thestageai

A full-stack AI platform 👽 Trusted voice in AI, we grindin', no sleep ✨

ID: 1655522257555320832

https://www.thestage.ai/ 08-05-2023 10:38:05

43 Tweet

392 Followers

24 Following

🥐 Bon appétit, developers. New Mistral AI models for self-hosting accelerated by TheStage AI: - New LLM: Mistral Small 24B - New VLM: Mistral Small 3.1 24B - Achieves speeds up to 90 tok/s on a single H100! - Available in our standard 4 tiers: S, M, L, XL Models follow

Bonjour, Paris 🇫🇷 Just wrapped 2 amazing days at @NVIDIA #GTCParis at Viva Technology — AI infra, agentic systems, and robots walking around. Great convos with ElevenLabs, @mistralai, Nebius, Recraft & more. Still in town — DM us if you wanna talk AI (IRL in Paris ☕🥐)

▚▞▚▞ DATA LOG: AI EUROPE ▚▞▚▞ For years, AI talk was all Silicon Valley. After @NVIDIA #GTCParis, one thing became clear: Europe’s AI ecosystem has already kicked into high gear. 🇫🇷 Mistral AI’s dropping open weights that actually run. 🇩🇪 Aleph Alpha building native

⌁ EUROPE SIGNAL: ACTIVE ⌁ ↳ Want to accelerate your model’s inference? ↳ These guys sure do. ✦ Berlin: mapped next steps with our investors Christophe Maire and Lukas Erbguth of Atlantic Labs. ✦ Paris: NVIDIA GTC showed us what’s possible. ✦ Germany: more investor talks

Meet Elastic MusicGen Large — our optimized fork of AI at Meta's MusicGen, powered by ANNA (TheStage AI’s Automated Neural Network Accelerator): huggingface.co/TheStageAI/Ela… Ye ye used AI for vocals on "Bully," calling it the "next Auto-Tune." He switched up later, but tracks

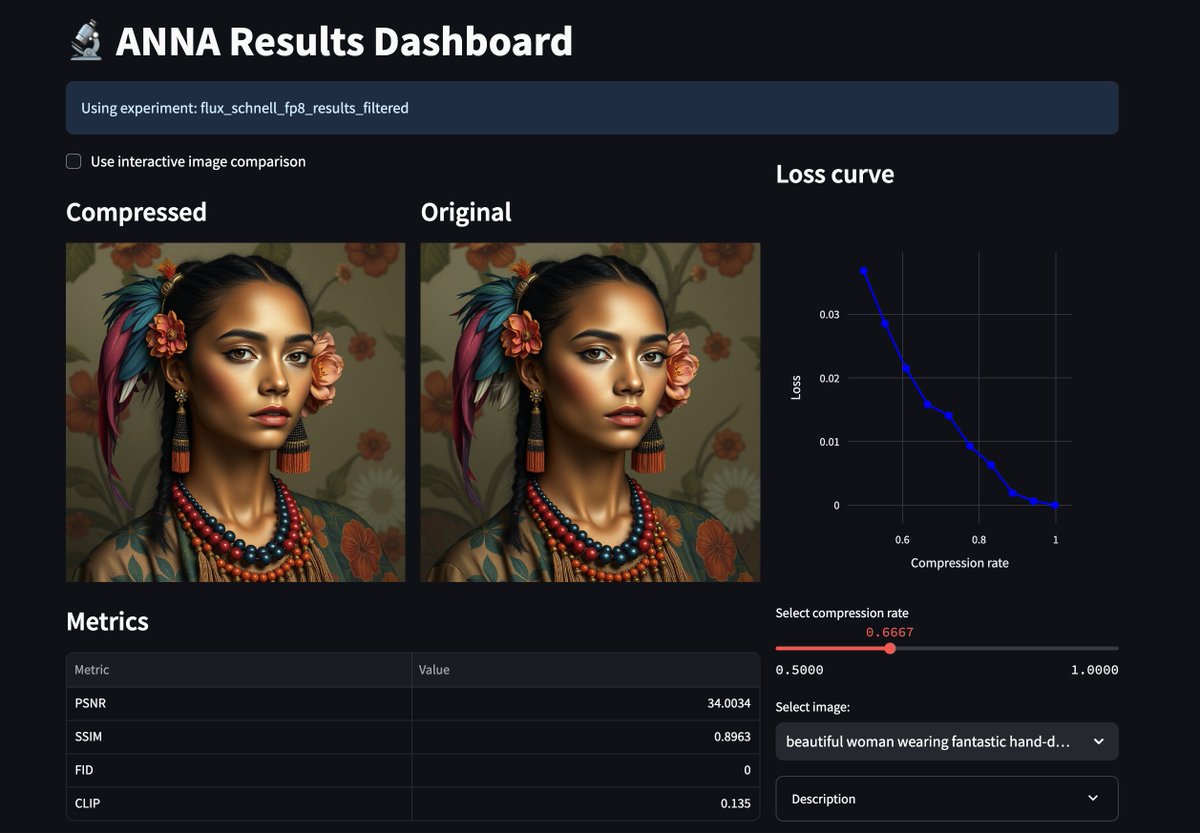

🥗 What if you could generate 10,000+ AI images for $1 — each in just 1.2 seconds? We made it happen — 2.4× faster than most RTX 4090 pipelines, at a fraction of the cost. Check it out here: app.thestage.ai/models/FLUX.1-… ⟿ How? We tuned Black Forest Labs's FLUX.1 [schnell] model with our ANNA

Our research team took AI at Meta LLaMA-8B, quantized it with QLIP using post-training int8, applied SmoothQuant, and used pre-defined compiler-compatible NVIDIA configs. Why do this? Up to 2× fewer weights and 3.6× faster on one GPU. Try it with our simple Jupyter Notebook.

Self-hosted text-to-image on H100 with TheStage AI Elastic Models, accelerated from FLUX.1-schnell Black Forest Labs. Our fastest model S generates a high-quality image in 0.5 s. Precompiled and ready-to-deploy – minimal cold start. Tutorial + access token inside if you want to try.

Imagine paying $30 for 10k images when Salad Cloud + ANNA does it for $1 💀 FLUX.1-schnell ~1.2 s/image, high-quality output ANNA auto-tunes models to balance speed and quality OpenAI-compatible API, fully self-hosted. Quick guide shows how to run your own endpoint

How to measure the quality of text-to-image models? Our research team TheStage AI put together a comprehensive guide to check perceptual quality, sharpness, color, prompt alignment, and more. All the tricky image quality questions researchers usually ask are covered here↓

Validation is a key step when compressing or accelerating models. It shows if the network still performs well. Our research team TheStage AI shared evaluation methods for sharpness, tone, color, object placement, and more