Teddi Worledge

@teddiworledge

(she/her) Computer Science PhD Student @Stanford. Formerly @Berkeley.

ID: 1720864110483853312

04-11-2023 18:03:39

21 Tweet

103 Followers

108 Following

I’m fighting… against vague notions of LLM attributions. 😤 Check out our paper (w. Teddi Worledge, Nicole, Caleb and Carlos) here: arxiv.org/abs/2311.12233

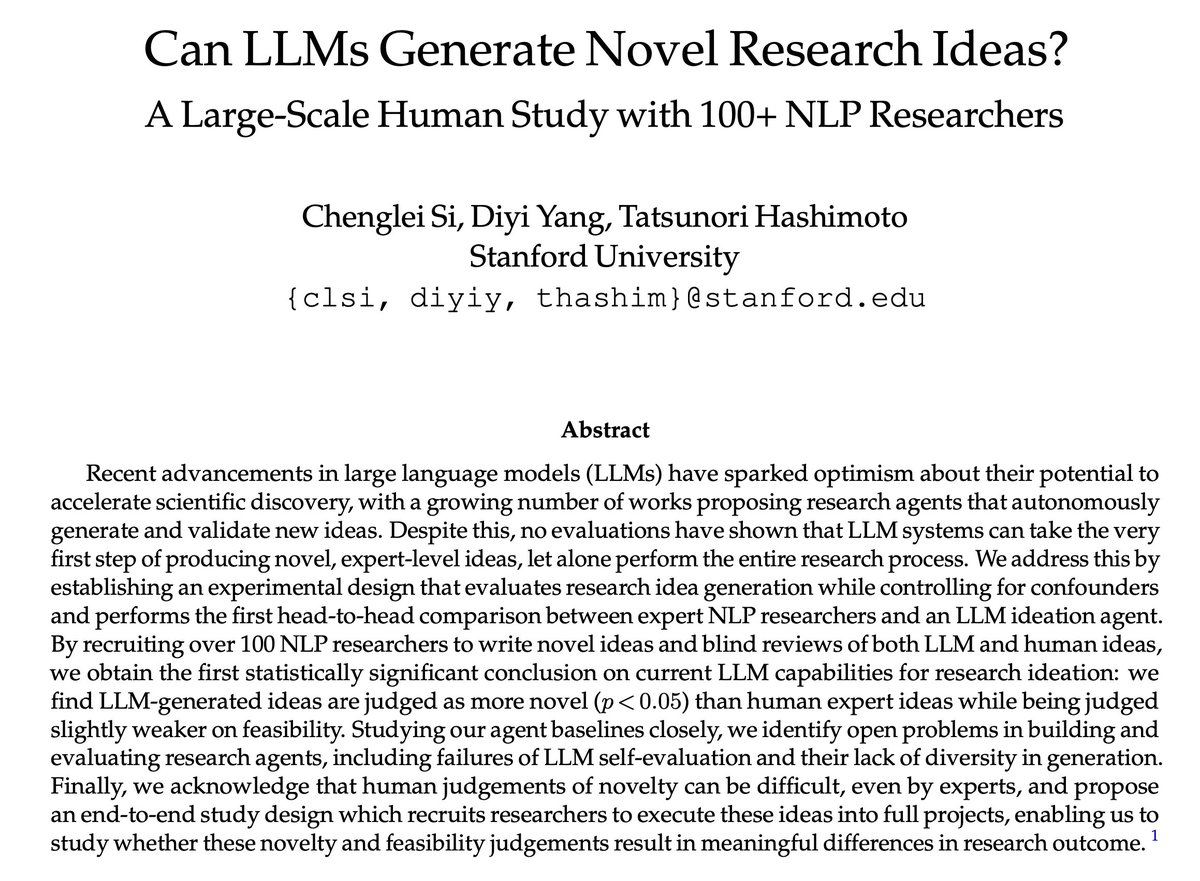

![Nicole Meister (@nicole__meister) on Twitter photo Prior work has used LLMs to simulate survey responses, yet their ability to match the distribution of views remains uncertain.

Our new paper [arxiv.org/pdf/2411.05403] introduces a benchmark to evaluate how distributionally aligned LLMs are with human opinions.

🧵 Prior work has used LLMs to simulate survey responses, yet their ability to match the distribution of views remains uncertain.

Our new paper [arxiv.org/pdf/2411.05403] introduces a benchmark to evaluate how distributionally aligned LLMs are with human opinions.

🧵](https://pbs.twimg.com/media/GcNrru3bMAAic66.jpg)