Tamar Rott Shaham

@tamarrottshaham

Postdoctoral fellow at @MIT_csail

ID: 1028916177584762880

https://tamarott.github.io/ 13-08-2018 08:08:27

151 Tweet

500 Followers

279 Following

How do LMs track what humans believe? In our new work, we show they use a pointer-like mechanism we call lookback. Super proud of this work by Nikhil Prakash and team! This is the most intricate piece of LM reverse engineering I’ve seen!

The new "Lookback" paper from Nikhil Prakash contains a surprising insight... 70b/405b LLMs use double pointers! Akin to C programmers' double (**) pointers. They show up when the LLM is "knowing what Sally knows Ann knows", i.e., Theory of Mind. x.com/nikhil07prakas…

Check out our paper at arxiv.org/abs/2506.22957 Work led by my awesome students Younwoo (Ethan) Choi Changling Li Yongjin Yang at U of T Department of Computer Science Vector Institute Schwartz Reisman Institute Intelligent Systems ETH Zurich!

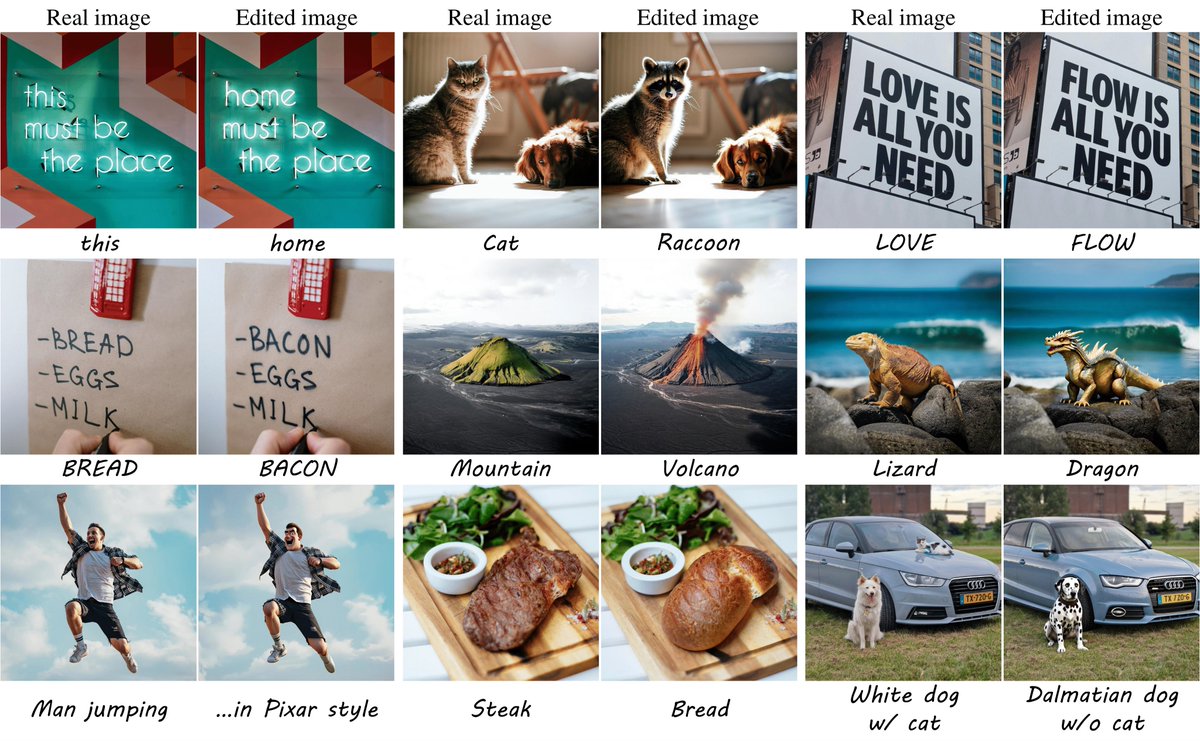

📷 FlowEdit has been accepted to #ICCV2025 Edit real images with text-to-image flow models! Check out: code github.com/fallenshock/Fl… webpage matankleiner.github.io/flowedit/ space to edit your images - huggingface.co/spaces/fallens… great ComfyUI plugins (logtd) matankleiner.github.io/flowedit/#comfy

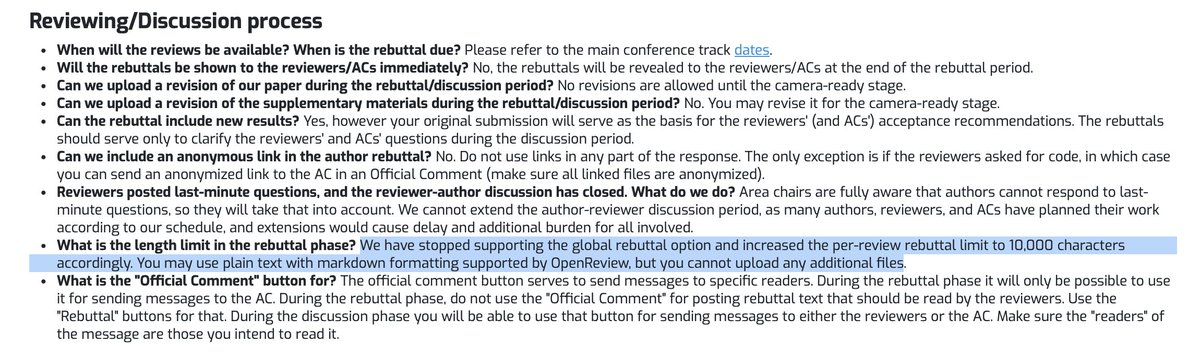

NeurIPS Conference, why take the option to provide figures in the rebuttals away from the authors during the rebuttal period? Grounding the discussion in hard evidential data (like plots) makes resolving disagreements much easier for both the authors and the reviewers. Left: NeurIPS

![Zhijing Jin✈️ ICLR Singapore (@zhijingjin) on Twitter photo [Implicit Personalization of #LLMs] How do we answer the question "What colo(u)r is a football?" Answer 1: "Brown🏈 ". Answer 2: "Black and white⚽". We propose a #Causal framework to test if LLMs adjust its answers depending on the cultural background inferred from the question. [Implicit Personalization of #LLMs] How do we answer the question "What colo(u)r is a football?" Answer 1: "Brown🏈 ". Answer 2: "Black and white⚽". We propose a #Causal framework to test if LLMs adjust its answers depending on the cultural background inferred from the question.](https://pbs.twimg.com/media/GwJKk5yWAAAEGoP.jpg)