Nikolay Savinov 🇺🇦

@savinovnikolay

Research Scientist at @GoogleDeepMind

Work on LLM pre-training in Gemini ♊

Lead 10M context length in Gemini 1.5 📈

ID: 973916582530420736

https://www.nsavinov.com 14-03-2018 13:39:42

876 Tweet

2,2K Takipçi

0 Takip Edilen

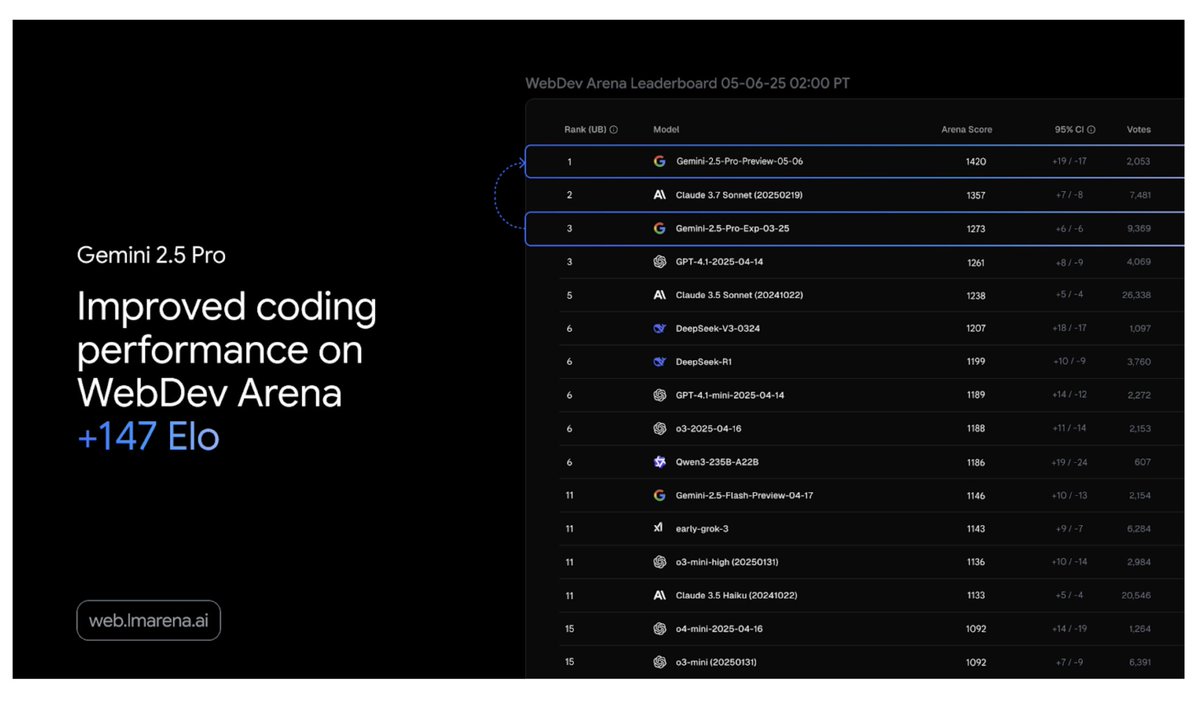

We’re releasing an updated Gemini 2.5 Pro (I/O edition) to make it even better at coding. 🚀 You can build richer web apps, games, simulations and more - all with one prompt. In Google Gemini App, here's how it transformed images of nature into code to represent unique patterns 🌱

🚨Breaking from Arena: Google DeepMind's new Gemini-2.5-Flash climbs to #2 overall in chat, a major jump from its April release (#5 → #2)! Highlights: - Top-2 across major categories (Hard, Coding, Math) - #3 in WebDev Arena, #2 in Vision Arena - New model at the

I'm speaking at Cambridge University next Wednesday, please join me if you are interested in long context! Thanks Petar Veličković for organizing this!