Sam Duffield

@sam_duffield

ID: 478800992

30-01-2012 17:42:30

87 Tweet

471 Takipçi

601 Takip Edilen

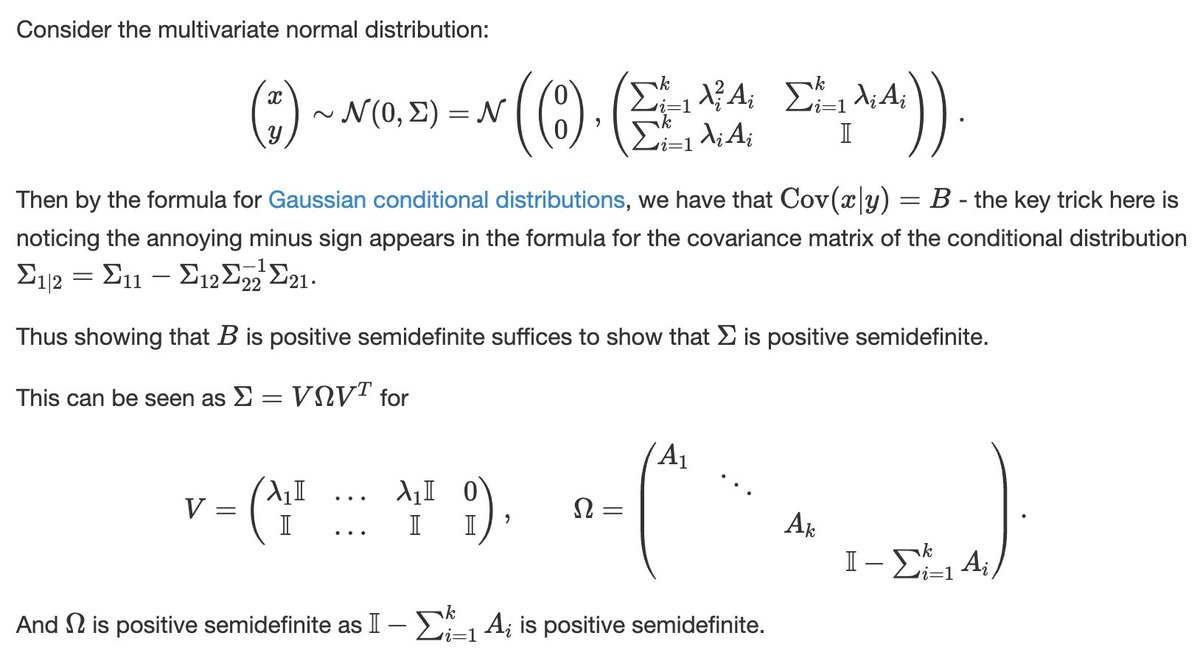

An equally brilliant solution comes to us from Sam Duffield, who deploys a kind of statistical voodoo to reach the goal

during NYC deep tech week, i gave a talk about how Normal Computing 🧠🌡️ is scaling our thermodynamic computing paradigm to tackle large scale AI and scientific computing workloads today, we're releasing a blog post based on that talk! ⬇️

Really exciting progress in silicon happening now at Normal Computing 🧠🌡️. Check out Zach's blog post to read more about what we're building. 👇

Come out and join us, so we can talk about physical world AI and thermodynamic chips. Looking forward to hosting with Nathan Benaich.

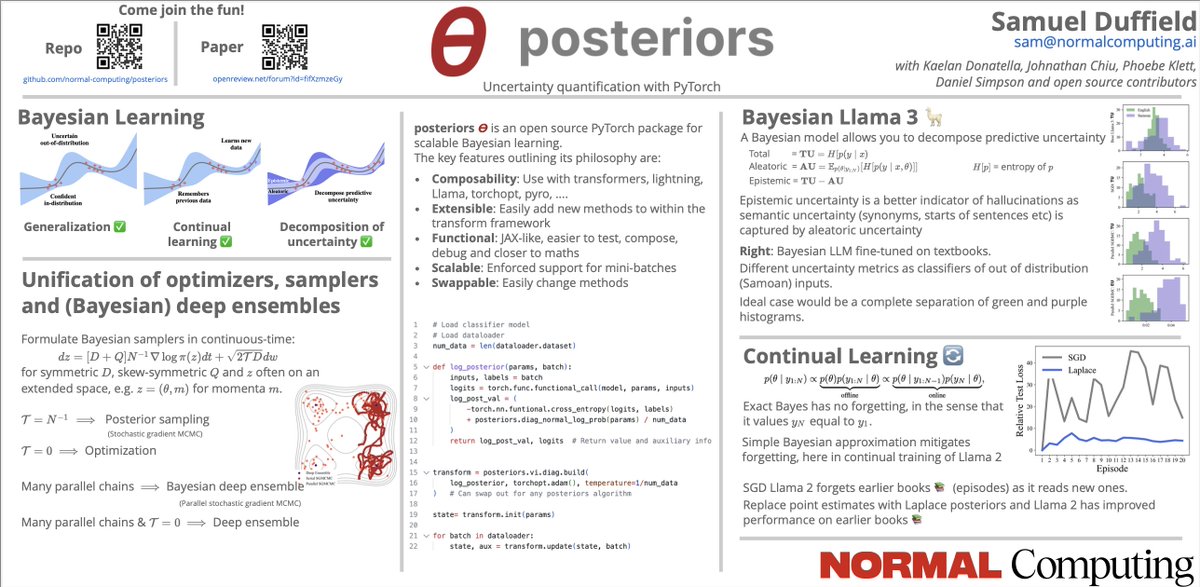

posteriors 𝞡, our open source Python library for Bayesian computation, will be presented at #ICLR2025! posteriors provides essential tools for uncertainty quantification and reliable AI training. Join Sam Duffield at his poster session 'Scalable Bayesian Learning with

Luca Ambrogioni Cohere Labs ML Collective Hmm. To me it makes sense that first-order methods are ones that use first derivatives and an analytical model of second-order structure. Second-order methods should use first *and* second-derivatives (and ideally an analytical model of third-order structure)

![Sam Duffield (@sam_duffield) on Twitter photo So simple!

Normally, we order our minibatches like

a, b, c, ...., [shuffle], new_a, new_b, new_c, ....

but instead, if we do

a, b, c, ...., [reverse], ...., c, b, a, [shuffle], new_a, new_b, ....

The RMSE of stochastic gradient descent reduces from O(h) to O(h²) So simple!

Normally, we order our minibatches like

a, b, c, ...., [shuffle], new_a, new_b, new_c, ....

but instead, if we do

a, b, c, ...., [reverse], ...., c, b, a, [shuffle], new_a, new_b, ....

The RMSE of stochastic gradient descent reduces from O(h) to O(h²)](https://pbs.twimg.com/media/GoKVnRxWIAA8lR9.png)