Ziteng Sun

@sziteng

Responsible and efficient AI.

Topics: LLM efficiency; LLM alignment; Differential Privacy; Information Theory. Research Scientist @Google; PhD @Cornell

ID: 3020905377

http://zitengsun.com 06-02-2015 03:04:03

67 Tweet

428 Followers

388 Following

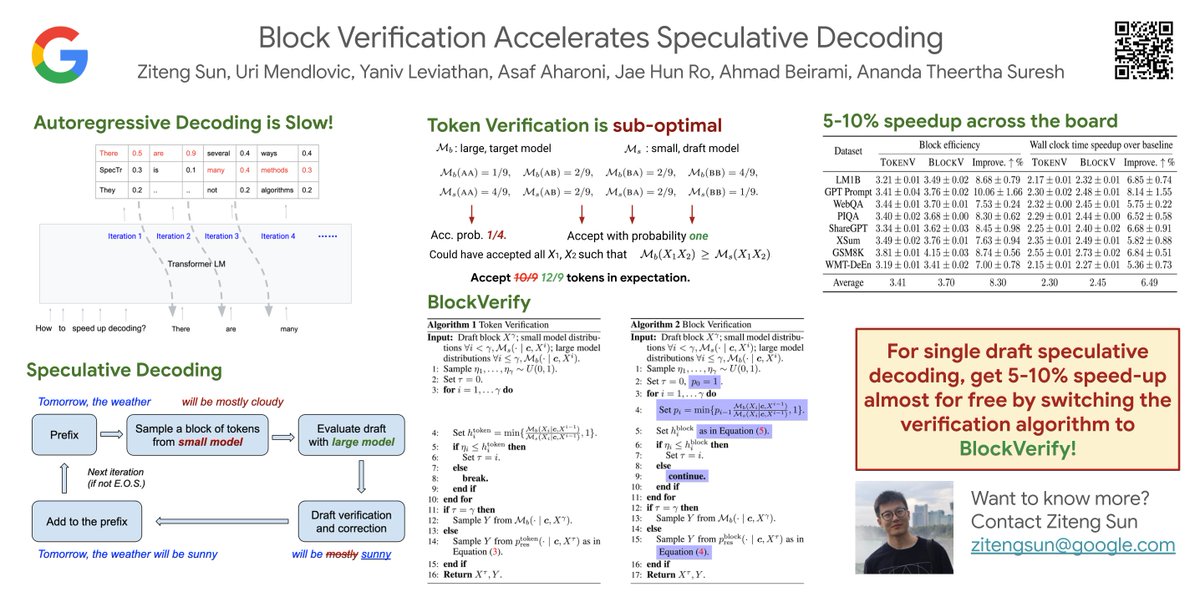

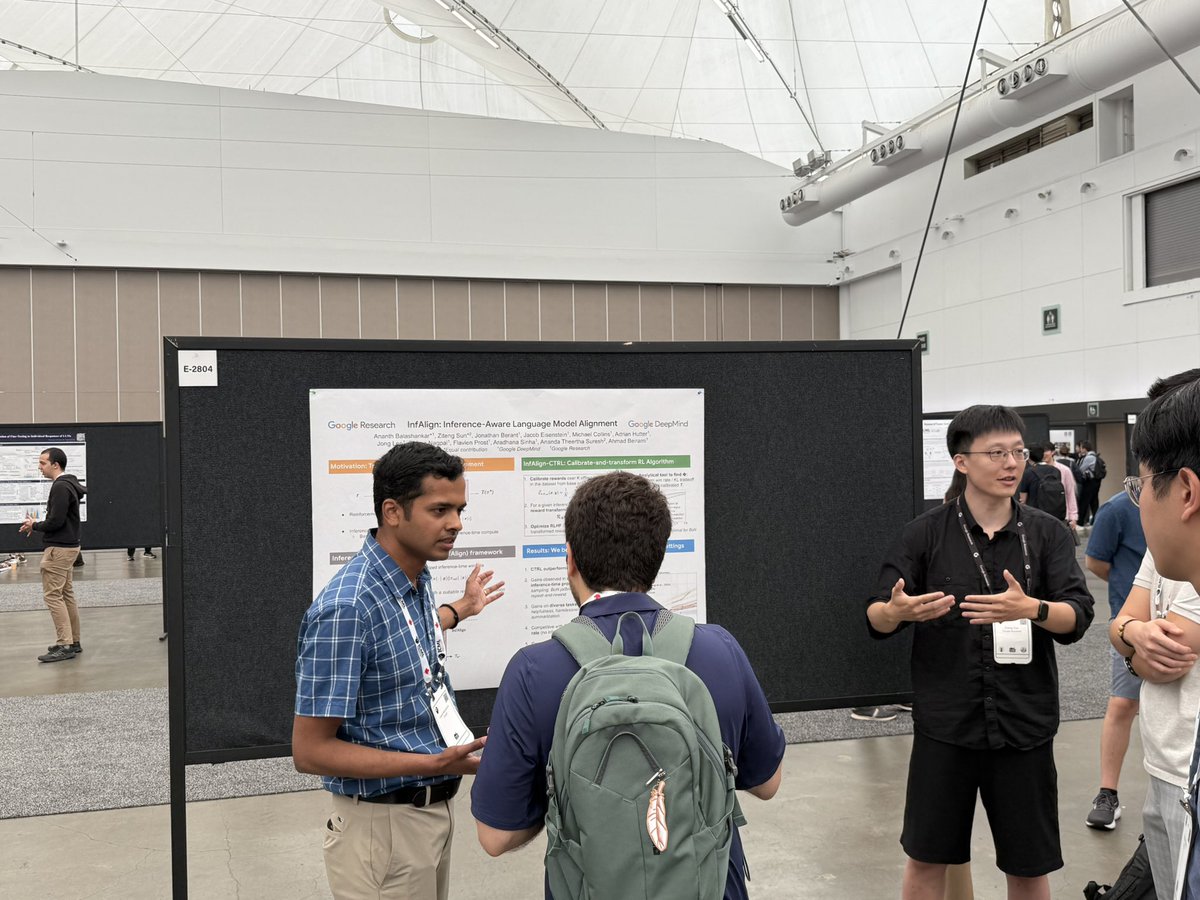

Today at 10am I will present Ziteng Sun's paper "block verification accelerates speculative decoding"