Roy Bar Haim

@roybarhaim

Senior Technical Staff Member, NLP at IBM Research AI. Opinions are my own

ID: 1058255708

https://research.ibm.com/people/roy-bar-haim 03-01-2013 16:54:36

24 Tweet

42 Followers

36 Following

NAACL'21 main conference is starting today! Meet our researchers and recruiting team at the IBM Research virtual booth: underline.io/events/122/exp…, and learn more about IBM Research's presence at @NAACLHLT, careers and booth schedule at ibm.biz/naacl2021

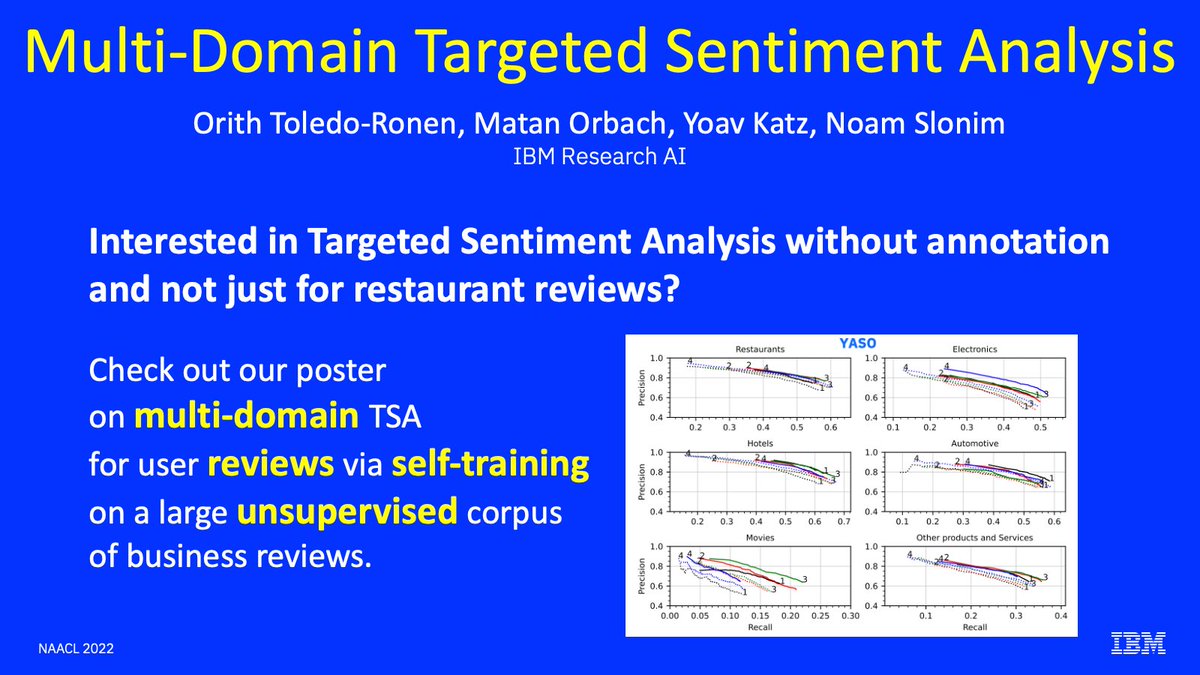

Interested in TARGETED #SentimentAnalysis beyond restaurant reviews? In #NAACL2022 we suggest a robust multi-domain model relying on self-training, with no extra annotation -- arxiv.org/abs/2205.03804 Orith Toledo-Ronen matan orbach Yoav Katz Noam Slonim 🟢 #NLProc #IBMResearch (1/5)

NAACL 2022 is starting on Sunday! Visit our website ibm.biz/naacl2022 to learn about the exciting NLP work from IBM Research that will be presented at this conference. IBM Research NAACL HLT 2027 #NAACL2022

Welcome PrimeQA at #NAACL2022! Replicate the state-of-the-art on multilingual open QA quickly! Here’s a new open-source repo in collab with with Stanford NLP Group, Hugging Face, Uni Stuttgart @NLPIllinois1. Link: github.com/primeqa/primeqa Talk to me or read: research.ibm.com/blog/primeqa-f… 🧵

Want to build a text classifier in a few hours? Even if you don’t have any: labeled data #machineLearning knowledge programing skills Label Sleuth ibm.biz/label-sleuth a new open-source no-code system for annotations 🧵 IBM Research University of Notre Dame Stanford Human-Computer Interaction Group UT Dallas #NLProc

Curious to see how can we summarize opinions beyond plain text summaries? Check out our #ACL2023 paper: From Key Points to Key Point Hierarchy: Structured and Expressive Opinion Summarization with Lilach Eden, yoav kantor Roy Bar Haim from IBM Research IBM BIU NLP >>