Roberto López Castro

@robertol_castro

Costa da Morte - A Coruña - Wien | Postdoc Researcher @ ISTA

ID: 1225736906

27-02-2013 18:12:39

49 Tweet

98 Followers

216 Following

📰Xa dispoñible no #RUC e #Zenodo o traballo do grupo #GAC da Facultade de Informática da UDC: "STuning-DL: Model-Driven Autotuning of Sparse GPU Kernels for Deep Learning" (doi.org/10.1109/ACCESS…). hdl.handle.net/2183/36810 & zenodo.org/uploads/114893…. #cienciaaberta

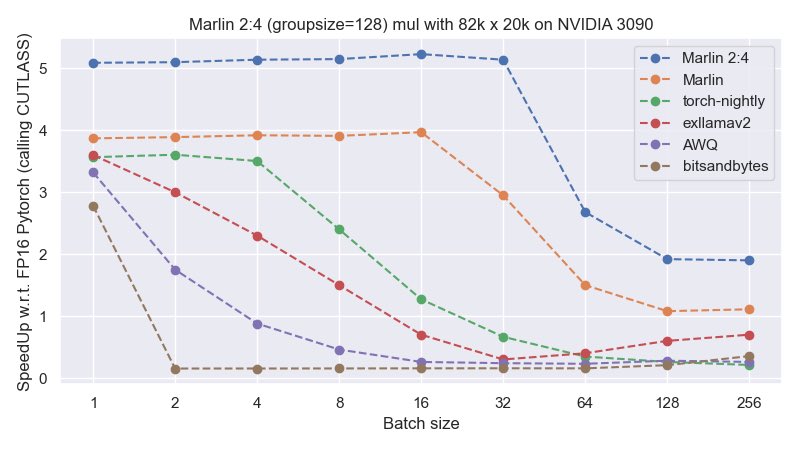

Happy to release the write-up on the MARLIN kernel for fast LLM inference, now supporting 2:4 sparsity! Led by Elias Frantar & Roberto López Castro Paper: arxiv.org/abs/2408.11743 Code: github.com/IST-DASLab/Spa… MARLIN is integrated with vLLM thanks to @neuralmagic!

Code: github.com/IST-DASLab/Spa… Paper: arxiv.org/abs/2408.11743 Made possible by: Dan Alistarh, Elias Frantar, Roberto López Castro. Shout out to Neural Magic engineers who swiftly integrated Sparse-Marlin into #vLLM for immediate use.

Today, Red Hat completed the acquisition of Red Hat AI (formerly Neural Magic), a pioneer in software and algorithms that accelerate #GenAI inference workloads. Read how we are accelerating our vision for #AI’s future: red.ht/408kJ8K.

Happy to release #HALO, a Hadamard-Assisted Lower-Precision scheme that enables INT8/FP6 full #finetuning (FFT) of LLMs. with @mmnnn76 Rush Tabesh Roberto López Castro Torsten Hoefler 🇨🇭 Dan Alistarh Paper: arxiv.org/pdf/2501.02625 Code: github.com/IST-DASLab/HALO 1/4

Yesterday, Jiale and Roberto presented the paper MARLIN: Mixed-Precision Auto-Regressive Parallel Inference on Large Language Models at PPoPP 2025 in Las Vegas! Want to know more? Check out the paper here👇dl.acm.org/doi/10.1145/37… #HPC Torsten Hoefler 🇨🇭 ETH CS Department

Our QuEST paper was selected for Oral Presentation at ICLR Sparsity in LLMs Workshop at ICLR 2025 workshop! QuEST is the first algorithm with Pareto-optimal LLM training for 4bit weights/activations, and can even train accurate 1-bit LLMs. Paper: arxiv.org/abs/2502.05003 Code: github.com/IST-DASLab/QuE…

![Dan Alistarh (@dalistarh) on Twitter photo Introducing MoE-Quant, a fast version of GPTQ for MoEs, with:

* Optimized Triton kernels and expert&data parallelism

* Quantizes the 671B DeepSeekV3/R1 models in 2 hours on 8xH100

* ~99% accuracy recovery for 4bit R1 on *reasoning* tasks, and 100% recovery on leaderboards

[1/3] Introducing MoE-Quant, a fast version of GPTQ for MoEs, with:

* Optimized Triton kernels and expert&data parallelism

* Quantizes the 671B DeepSeekV3/R1 models in 2 hours on 8xH100

* ~99% accuracy recovery for 4bit R1 on *reasoning* tasks, and 100% recovery on leaderboards

[1/3]](https://pbs.twimg.com/media/GoAg9K2XoAEeYWB.png)

![Dan Alistarh (@dalistarh) on Twitter photo We are introducing Quartet, a fully FP4-native training method for Large Language Models, achieving optimal accuracy-efficiency trade-offs on NVIDIA Blackwell GPUs! Quartet can be used to train billion-scale models in FP4 faster than FP8 or FP16, at matching accuracy.

[1/4] We are introducing Quartet, a fully FP4-native training method for Large Language Models, achieving optimal accuracy-efficiency trade-offs on NVIDIA Blackwell GPUs! Quartet can be used to train billion-scale models in FP4 faster than FP8 or FP16, at matching accuracy.

[1/4]](https://pbs.twimg.com/media/Gr4-TfhX0AEa_WW.jpg)