Pooyan Rahmanzadehgervi

@pooyanrg

CS PhD student @AuburnU

ID: 1732457691434360832

06-12-2023 17:51:38

14 Tweet

25 Followers

82 Following

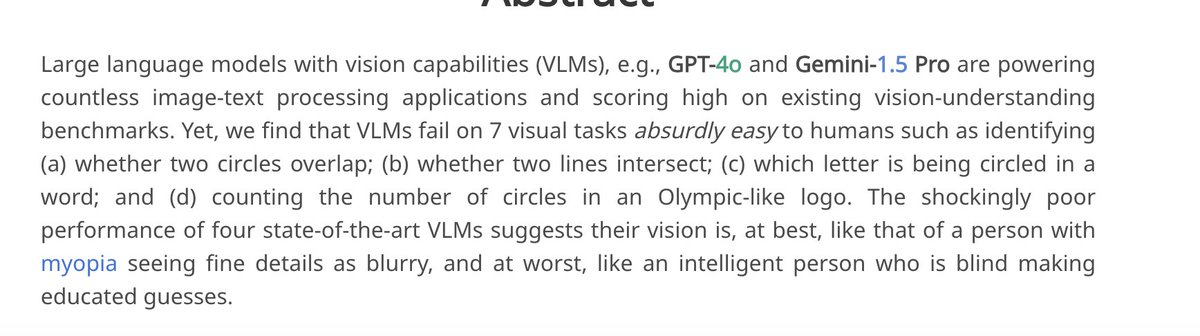

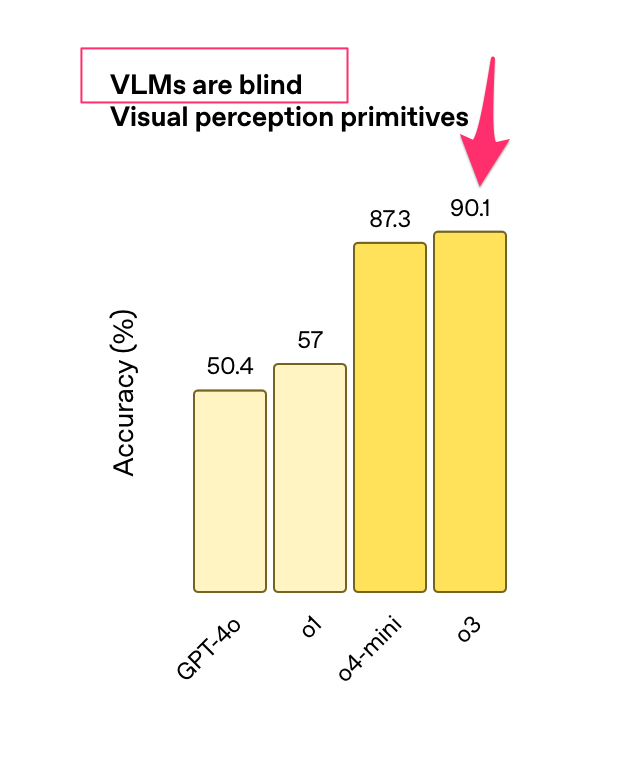

Counting the number of intersections in this simple image turns out to be surprisingly challenging for Vision-Language Models (VLMs). This task was introduced in the 'VLMs are blind' paper, and I discovered it through an insightful blog post by Lucas Beyer (bl16). [1/N]

![Aritra R G (@arig23498) on Twitter photo Counting the number of intersections in this simple image turns out to be surprisingly challenging for Vision-Language Models (VLMs).

This task was introduced in the 'VLMs are blind' paper, and I discovered it through an insightful blog post by <a href="/giffmana/">Lucas Beyer (bl16)</a>.

[1/N] Counting the number of intersections in this simple image turns out to be surprisingly challenging for Vision-Language Models (VLMs).

This task was introduced in the 'VLMs are blind' paper, and I discovered it through an insightful blog post by <a href="/giffmana/">Lucas Beyer (bl16)</a>.

[1/N]](https://pbs.twimg.com/media/GeVP68jbEAEFK56.png)

How do best AI image editors 🤖 GPT-4o, Gemini 2.0, SeedEdit, HF 🤗 fare ⚔️ human Photoshop wizards 🧙♀️ on text-based 🏞️ image editing? Logan Logan Bolton and Brandon Brandon Collins shared some answers at our poster today! #CVPR2025 psrdataset.github.io A few insights 👇

Pooyan Pooyan Rahmanzadehgervi presenting our Transformer Attention Bottleneck paper at #CVPR2026 💡 We **simplify** MHSA (e.g. 12 heads -> 1 head) to create an attention **bottleneck** where users can debug Vision Language Models by editing the bottleneck and observe expected VLM text outputs.