Pinyuan Feng (Tony)

@pinyuan3

PhD student @ColumbiaPSYC 🦁 @KriegeskorteLab | Prev @BrownCSDept 🐻 @serre_lab | Brain 🧠 Mind 💭 Machine 🤖

ID: 1183016486367039488

12-10-2019 13:48:20

13 Tweet

46 Followers

366 Following

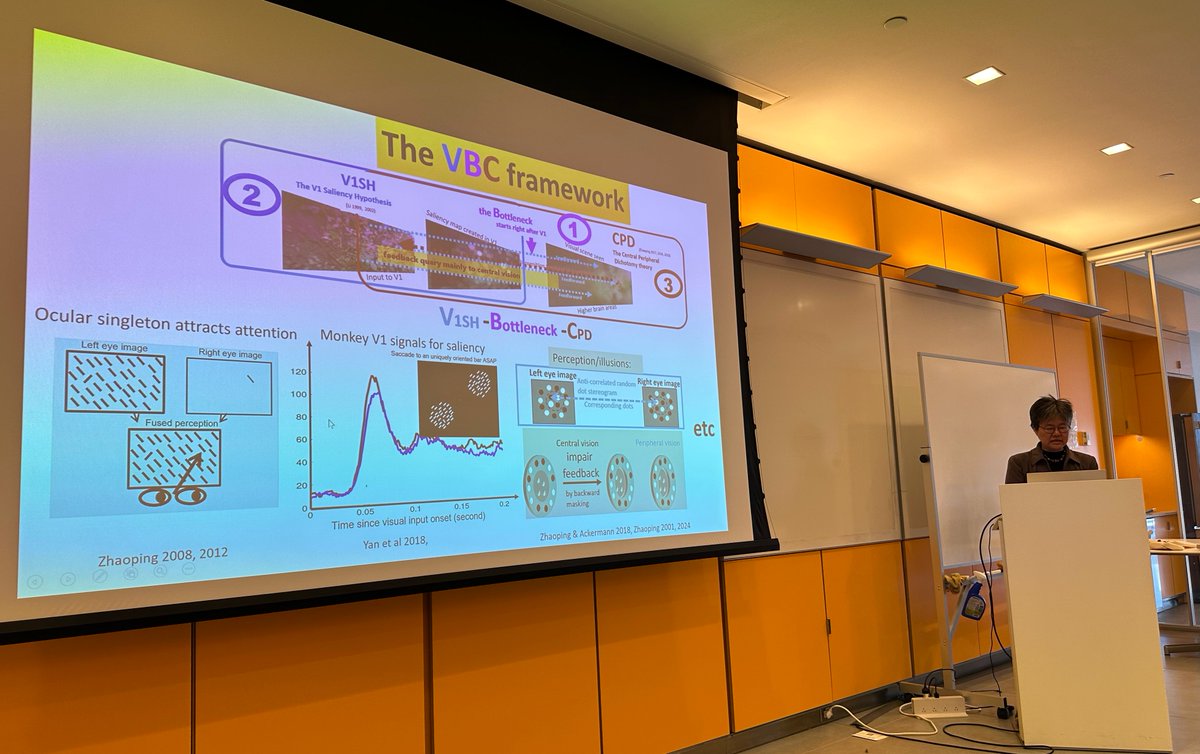

Amazing talk by Li Zhaoping (Li Zhaoping) on: "Looking and Seeing through a Bottleneck: a VBC Framework for Vision, from the Perspective of Primary Visual Cortex" See her 2024 paper "Peripheral vision is mainly for looking rather than seeing": sciencedirect.com/science/articl…

Finally finish making our website GrowAIlikeAChild growing-ai-like-a-child.github.io 🙌 with William Yijiang Li Dezhi Luo Martin Ziqiao Ma Zory Zhang Pinyuan Feng (Tony) Pooyan Rahmanzadehgervi and many others 🚶🚶♂️🚶♀️

Congrats to Columbia University scientist Paul Linton who received the David Marr Medal from the Applied Vision Association for "pioneering research on visual experience." 🏆👁️🧠

Ready to present your latest work? The Call for Papers for #UniReps2025 NeurIPS Conference is open! 👉Check the CFP: unireps.org/2025/call-for-… 🔗 Submit your Full Paper or Extended Abstract here: openreview.net/group?id=NeurI… Speakers and panelists: Danica Sutherland David Alvarez Melis Nikolaus Kriegeskorte

Great to present my work "Five Illusions Challenge Our Understanding of Visual Experience" at the European Conference on Visual Perception (#ECVP2025) Project Website + Preprint in link below 👇 The Italian Academy Nikolaus Kriegeskorte Columbia University's Zuckerman Institute Center for Science and Society Columbia Science

Encoding models predict visual responses to novel images in cortical areas. But can these models offer new insights about categorical representations? If so, we should be able to generate new hypotheses from them to be tested in future experiments. NeurIPS Conference #NeurIPS2025 1/15

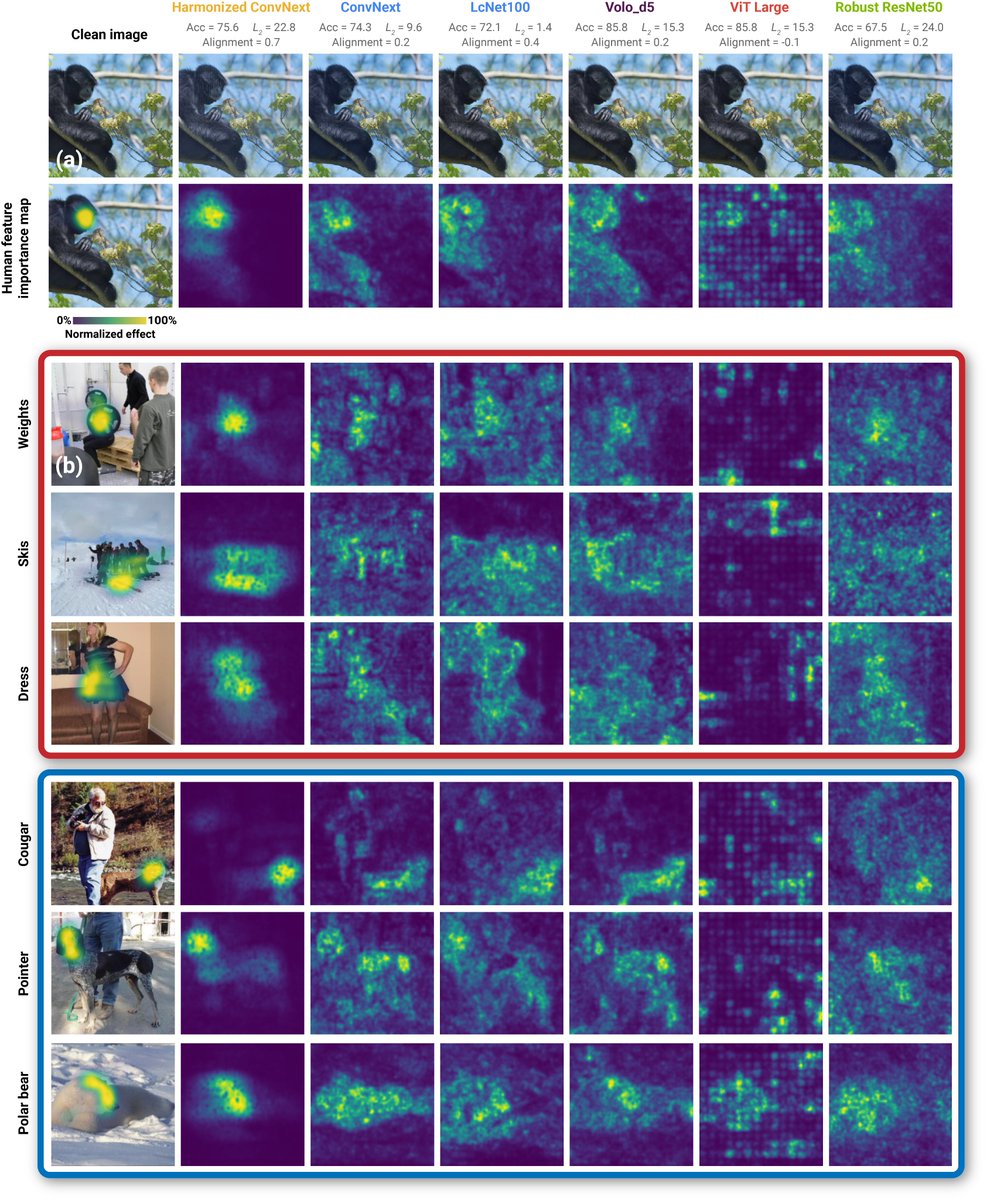

![CBMM (@mit_cbmm) on Twitter photo [video] Aligning deep networks with human vision will require novel neural architectures, data diets and training algorithms

Thomas Serre, Brown University

cbmm.mit.edu/video/aligning… [video] Aligning deep networks with human vision will require novel neural architectures, data diets and training algorithms

Thomas Serre, Brown University

cbmm.mit.edu/video/aligning…](https://pbs.twimg.com/media/GkkpuDwbkAIGfr6.jpg)