OpenPipe

@openpipeai

OpenPipe: Fine-tuning for production apps. Train higher quality, faster models. (YC S23)

ID: 1686408031268200448

https://openpipe.ai/ 01-08-2023 16:06:36

94 Tweet

2,2K Followers

2 Following

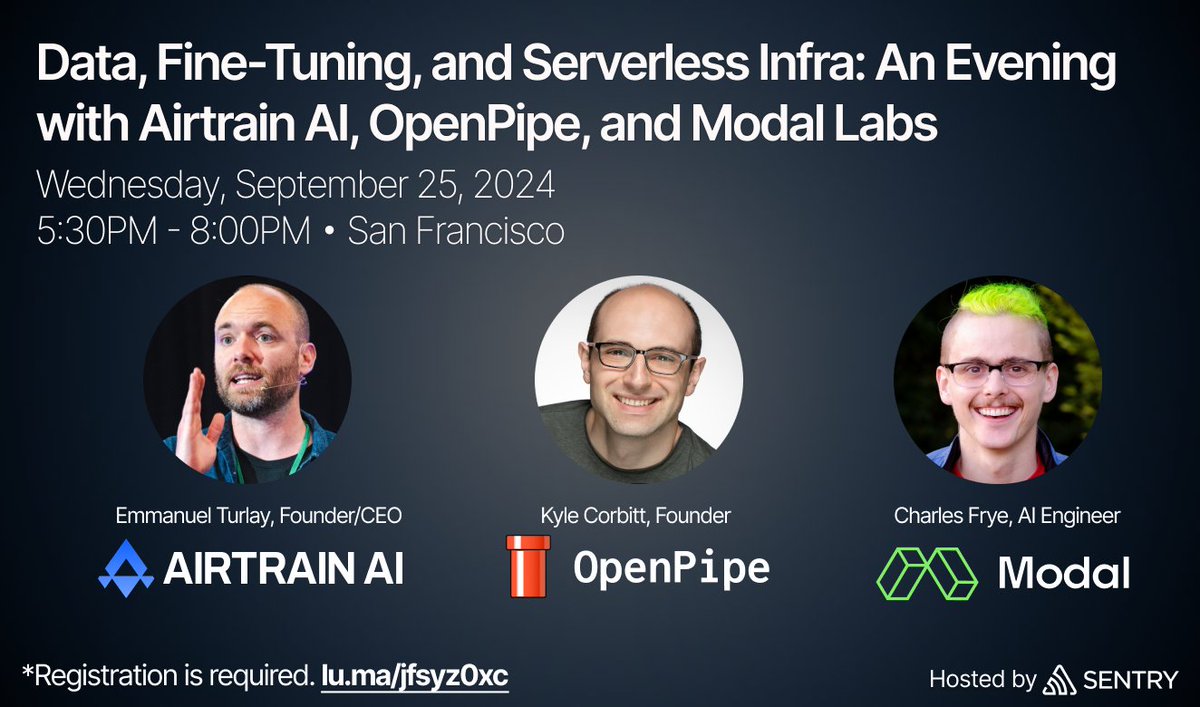

Our CEO Kyle Corbitt is giving a talk next Wed in SF w/ Emmanuel Turlay and Charles 🎉 Frye. Kyle's topic is on fine-tuning best practices to get far higher quality LLM results vs prompting alone, and when to explore this optimization technique. check it out! lu.ma/jfsyz0xc

I write about this shift and the tools I expect to be part of the permanent core dev stack: Together AI, Fireworks AI , Groq Inc, OpenPipe, Martian, Unstructured, LangChain , LlamaIndex 🦙 , Pinecone and Qdrant. Check out the article here:

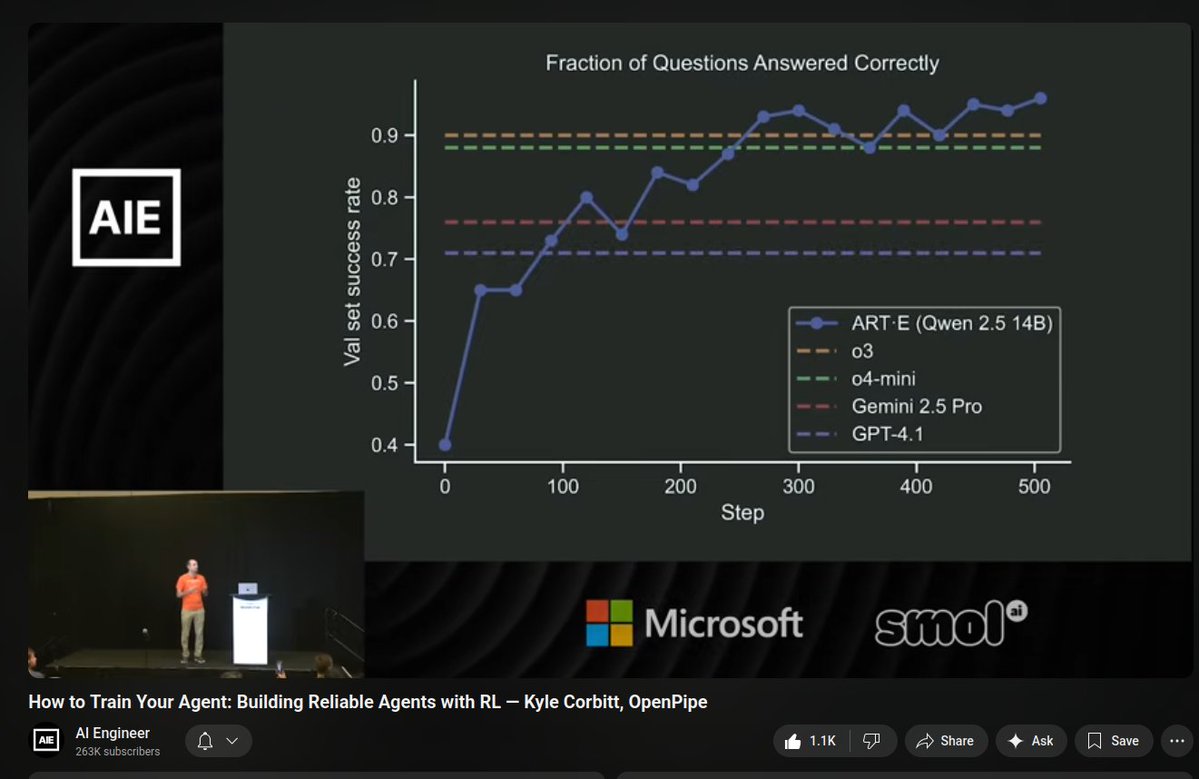

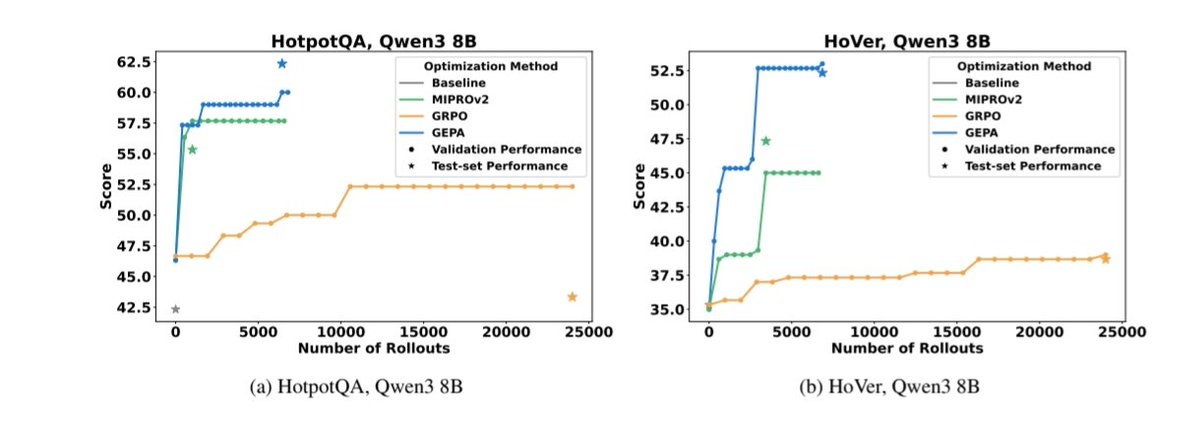

Yacine Mahdid One thing that surprised me pretty much is how well feedback from LLM is for automatic improvement. OpenPipe is one example with RL, but it also works well with prompt optimization (sometimes outperforming RL), see GEPA paper. arxiv.org/abs/2507.19457

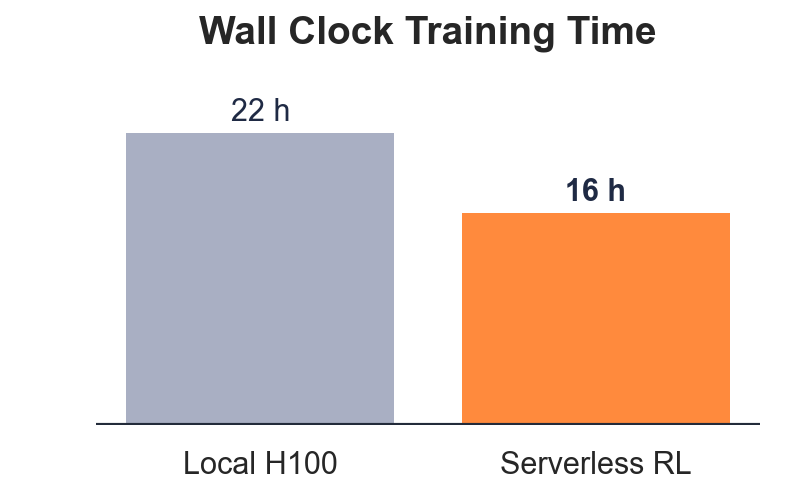

Maziyar PANAHI Trelis Research Weights & Biases Serverless RL from the OpenPipe crew (now also at Coreweave) with W&B inference is pretty sweet - new models being added soon too openpipe.ai/blog/serverles…

Morgan McGuire Trelis Research Weights & Biases OpenPipe i am looking forward to OpenPipe next week on Alex Volkov (Thursd/AI) podcast, see what's new and what's ahead. awesome! looking forward to it.

The Custom SLMs era is upon us 🙌 - Nanochat by Andrej Karpathy - Thinker (PEFTaaS) by Thinking Machines - Tunix (Post-train in Jax) by Google AI - Art (Agent RL) by OpenPipe - Environments Hub by Prime Intellect - NeMo Microservices by NVIDIA