Or Patashnik

@opatashnik

PhD student @ Tel-Aviv University

ID: 1125521043932758017

http://orpatashnik.github.io 06-05-2019 22:01:42

266 Tweet

1,1K Takipçi

418 Takip Edilen

Inbar Huberman-Spiegelglas #ICCV2025 Vova Kulikov matan kleiner Happy to share that FlowEdit received the Best Student Paper Award at #ICCV2025 🎉 Huge congrats to the team: Vova Kulikov, matan kleiner, Inbar Huberman-Spiegelglas

Wow, really impressive. Looking forward to the open sourcing of the video-audio model! Big congrats to the LTX team! ⭐ Yoav HaCohen

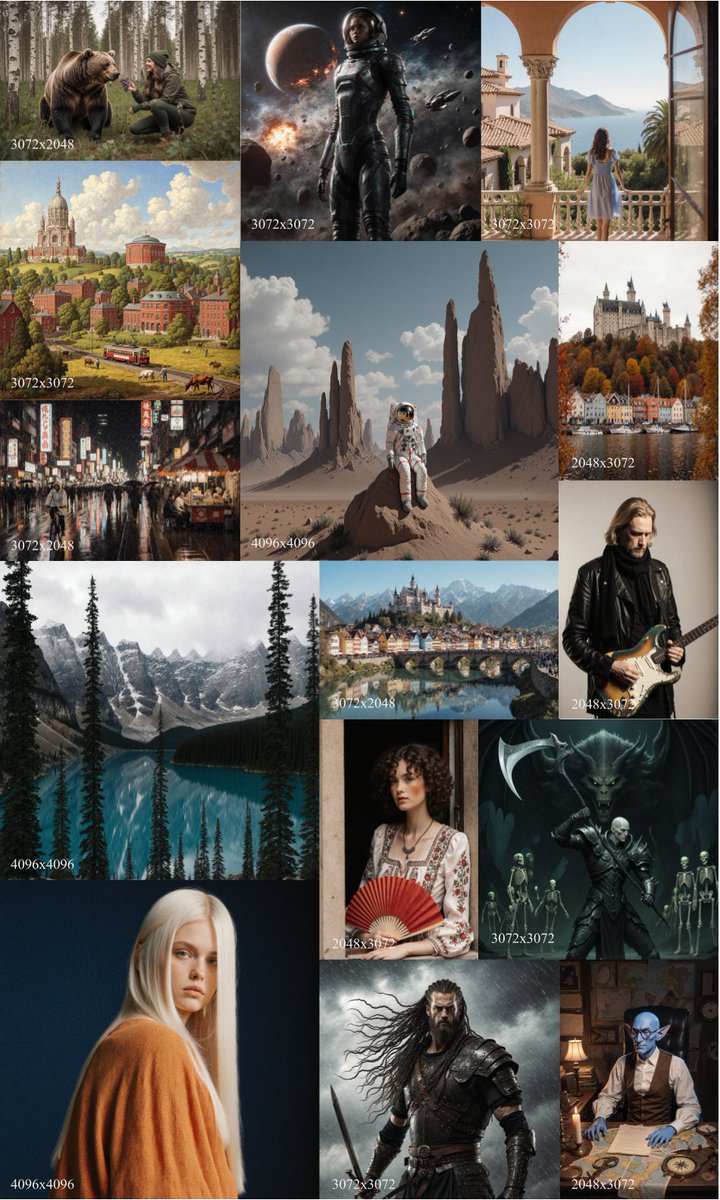

![Ron Mokady (@mokadyron) on Twitter photo Generating an image from 1,000 words.

Very excited to release Fibo 😃, the first ever open-source model trained exclusively on long, structured captions.

Fibo sets a new standard for controllability and disentanglement in image generation

[1/6] 🧵 Generating an image from 1,000 words.

Very excited to release Fibo 😃, the first ever open-source model trained exclusively on long, structured captions.

Fibo sets a new standard for controllability and disentanglement in image generation

[1/6] 🧵](https://pbs.twimg.com/media/G4cM-ovWYAAvL05.jpg)