Michael Poli

@michaelpoli6

AI, numerics and systems @StanfordAILab. Founding Scientist @LiquidAI_

ID: 1027766058390716416

https://zymrael.github.io/ 10-08-2018 03:58:18

379 Tweet

2,2K Followers

347 Following

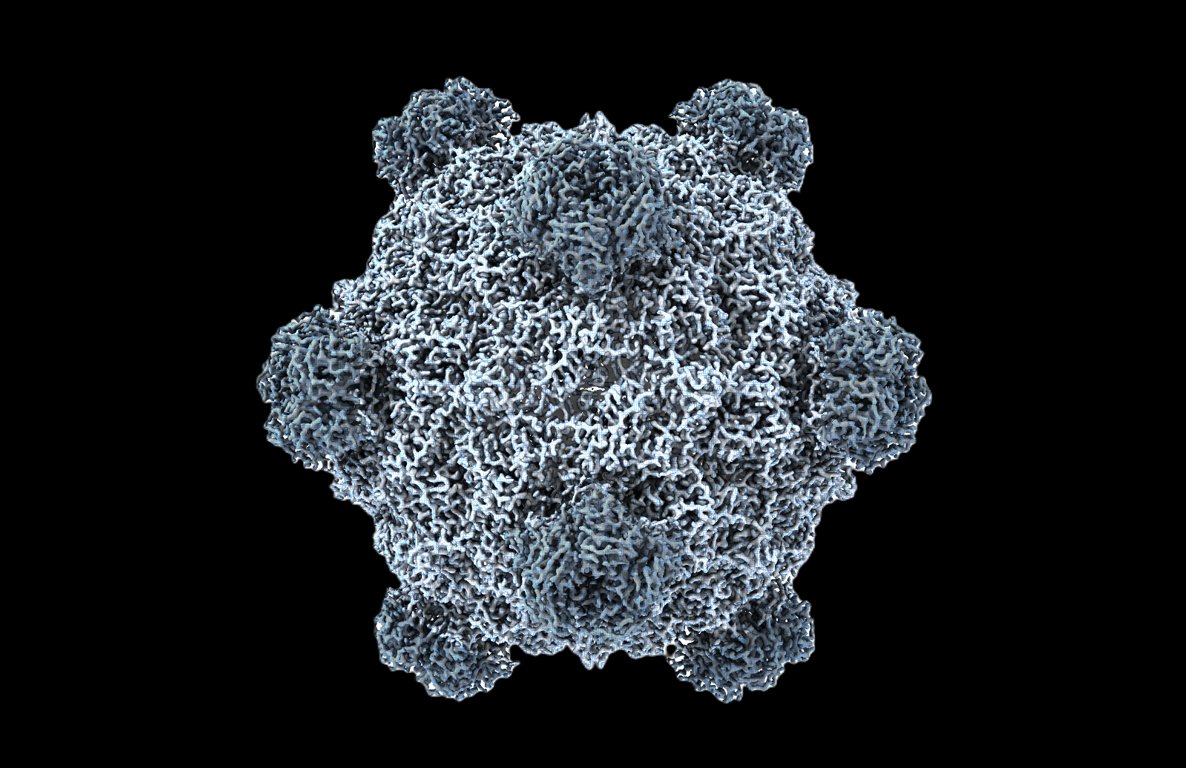

Welcome to the age of generative genome design! In 1977, Sanger et al. sequenced the first genome—of phage ΦX174. Today, led by Samuel King, we report the first AI-generated genomes. Using ΦX174 as a template, we made novel, high-fitness phages with genome language models. 🧵

Thank you for having me, Delta Institute! We touched on a few topics, including the story behind Radical Numerics