Jonibek Mansurov

@m_jonibek

ID: 1658226525831852035

15-05-2023 21:43:41

2 Tweet

12 Followers

69 Following

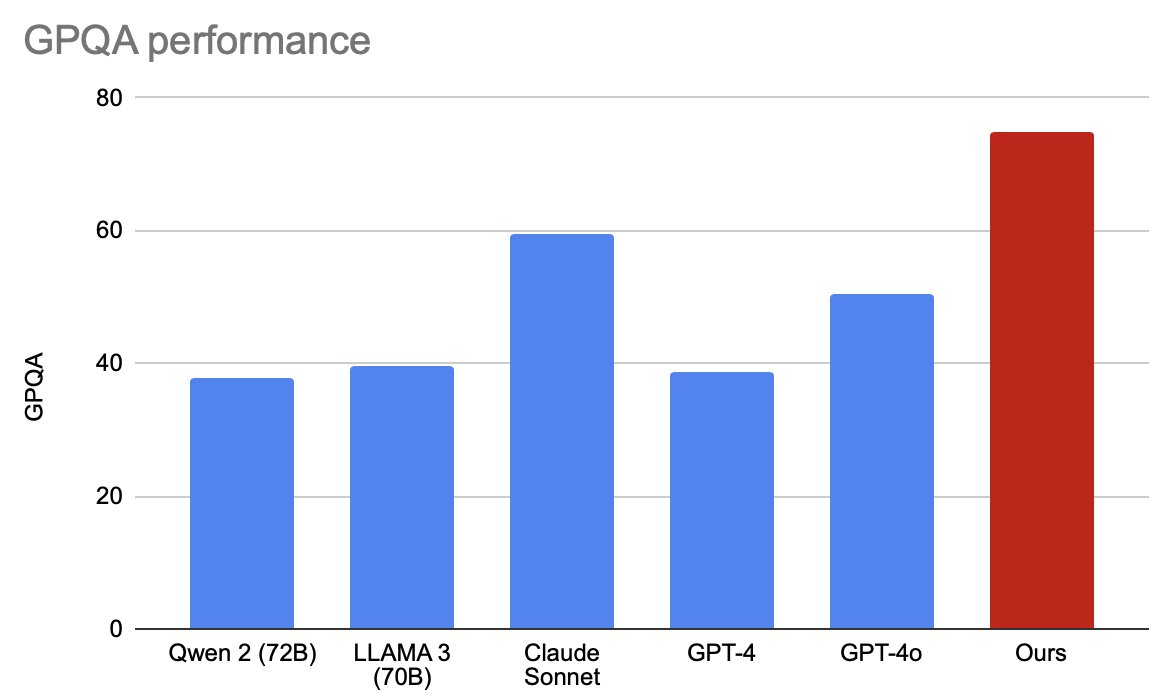

Final work promotion in 2024, by Jonibek Mansurov! We managed to achieve ~75% on a challenging GPQA with only 2 layers of transformers(~ 40M params) that were trained on different data; in our case, MedMCQA. Introducing...

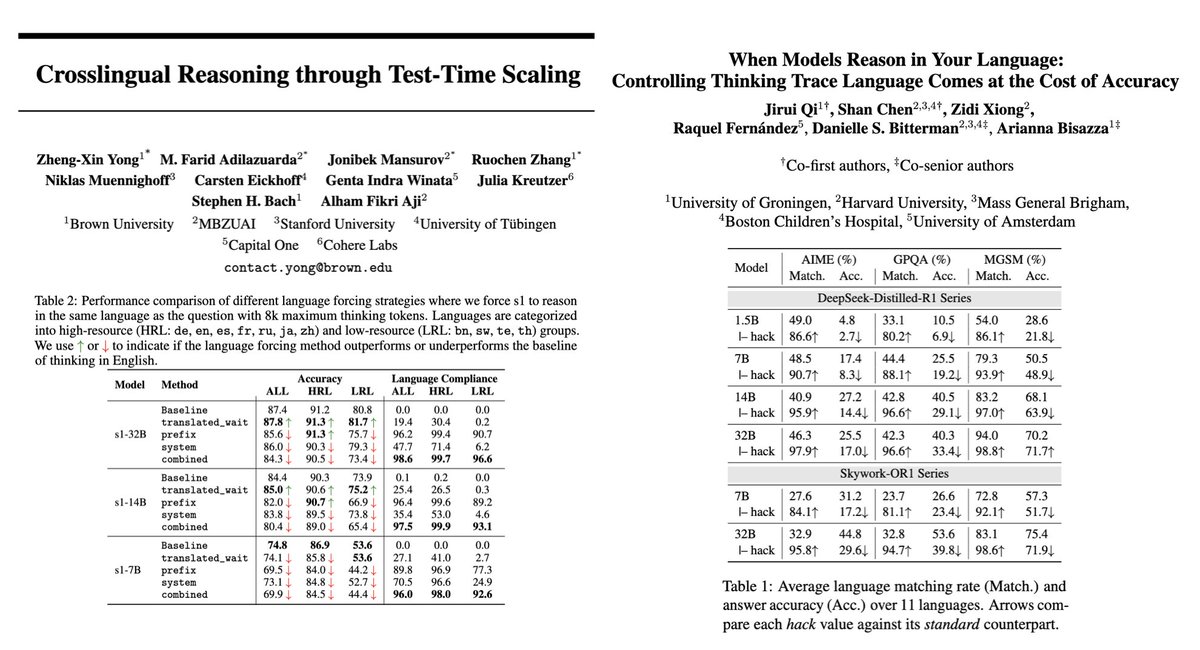

⭐️Reasoning LLMs trained on English data can think in other languages. Read our paper to learn more! Thank you Yong Zheng-Xin (Yong) for leading the project and team! It was an exciting colab! farid Jonibek Mansurov Ruochen Zhang Niklas Muennighoff Carsten Eickhoff Julia Kreutzer

![Yong Zheng-Xin (Yong) (@yong_zhengxin) on Twitter photo 📣 New paper!

We observe that reasoning language models finetuned only on English data are capable of zero-shot cross-lingual reasoning through a "quote-and-think" pattern.

However, this does not mean they reason the same way across all languages or in new domains.

[1/N] 📣 New paper!

We observe that reasoning language models finetuned only on English data are capable of zero-shot cross-lingual reasoning through a "quote-and-think" pattern.

However, this does not mean they reason the same way across all languages or in new domains.

[1/N]](https://pbs.twimg.com/media/GqgyX4mWMAAbdQ9.png)