Michael Zollhoefer

@mzollhoefer

I am a Director, Research Scientist in the Codec Avatar Lab (@RealityLabs @Meta) in Pittsburgh working on fully immersive remote communication and interaction.

ID: 1355287783326310402

http://zollhoefer.com/ 29-01-2021 22:52:53

108 Tweet

3,3K Takipçi

236 Takip Edilen

Drivable 3D Gaussian Avatars paper page: huggingface.co/papers/2311.08… present Drivable 3D Gaussian Avatars (D3GA), the first 3D controllable model for human bodies rendered with Gaussian splats. Current photorealistic drivable avatars require either accurate 3D registrations during

Looking forward to the next seminar by Michael Zollhoefer! Michael and his team at Reality Labs Research have developed insanely cool technology on Codec Telepresence! Students at UMD Department of Computer Science UMD Center for Machine Learning UMIACS, don't miss the talk!

HybridNeRF: Efficient Neural Rendering via Adaptive Volumetric Surfaces paper page: huggingface.co/papers/2312.03… Neural radiance fields provide state-of-the-art view synthesis quality but tend to be slow to render. One reason is that they make use of volume rendering, thus requiring

Haithem Turki is also presenting PyNeRF at NeurIPS Conference 2023 next week. Talk to him to find out more! :) x.com/RadianceFields…

(1/3) 𝐕𝐨𝐱𝐞𝐥 𝐇𝐚𝐬𝐡𝐢𝐧𝐠 received the Test-of-Time Award SIGGRAPH Asia ➡️ Hong Kong! What an honor together with Michael Zollhoefer Shahram Izadi @mcstammi Voxel hashing is a sparse & efficient data structure for 3D scenes/grids! What's the core idea and why is still relevant today? ⬇️

The "Edge Gradients" paper was accepted to #ECCV2024 🥳! European Conference on Computer Vision #ECCV2026 "Rasterized Edge Gradients: Handling Discontinuities Differentiably" arxiv.org/abs/2405.02508

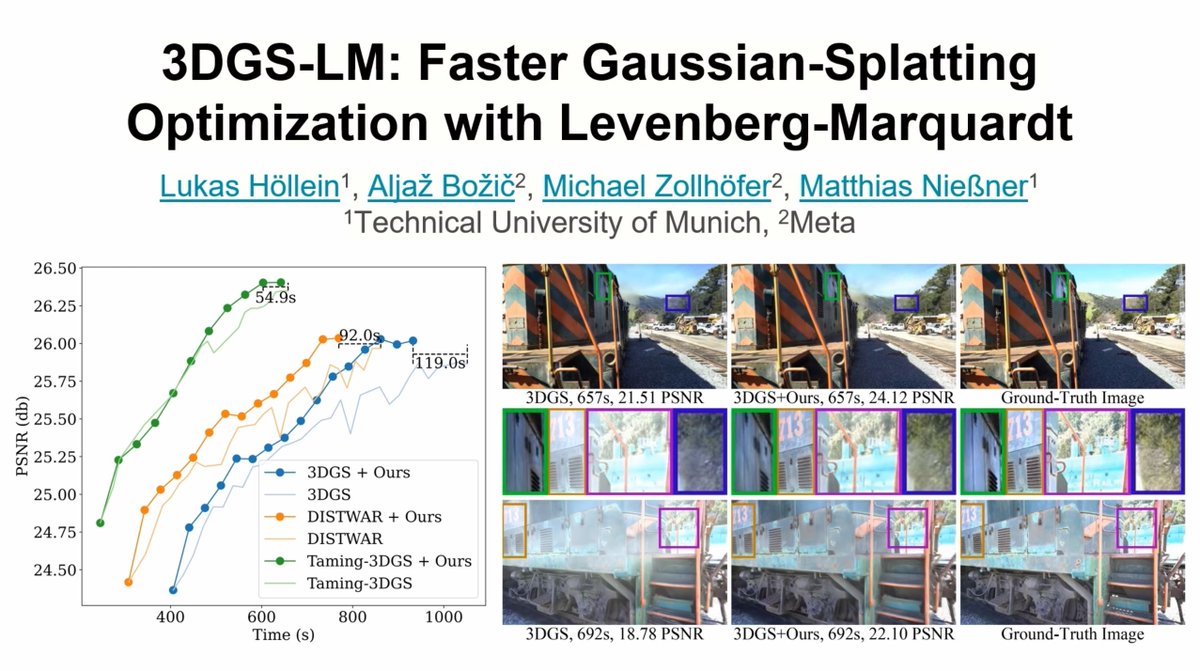

If you'd like to accelerate 3DGS training, check out a recent work of Lukas Höllein leveraging Levenberg-Marquardt algorithm!

I'm excited to present "Fillerbuster: Multi-View Scene Completion for Casual Captures"! This is work with my amazing collaborators Norman Müller, Yash Kant, Vasu Agrawal, Michael Zollhoefer, Angjoo Kanazawa, Christian Richardt during my internship at Meta Reality Labs. ethanweber.me/fillerbuster/