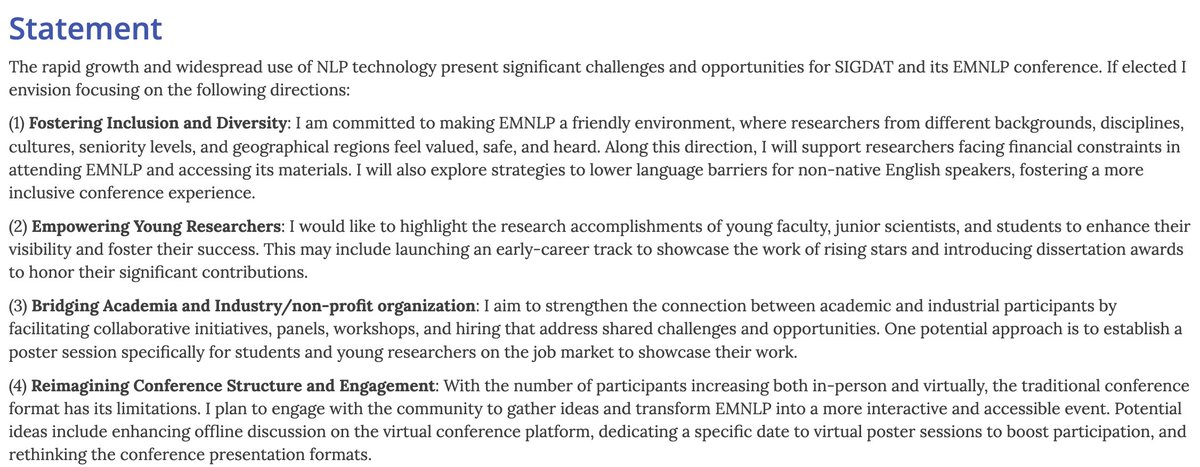

Liunian Harold Li

@liliunian

ID: 1160794781884149760

https://liunian-harold-li.github.io/ 12-08-2019 06:06:57

121 Tweet

841 Followers

469 Following

Excited to share LRM (large reconstruction model), which views 2D->3D as a multimodal problem and learns transformer from large-scale data. A cornerstone of our 3D foundation model efforts at Adobe Research. Nice work by intern (also upcoming full-time) Yicong Hong.

📢 📽✍️We introduce VideoCon, a video-text dataset for training SOTA alignment model. It resolves a typical issue in video-text alignment models that struggles with robustness. w/ leah bitton, Idan Szpektor, Kai-Wei Chang , Aditya Grover video-con.github.io 🧵 1/

Checkout this awesome work on early pandemic detection by Tanmay Parekh and his team using NLP tools!

I am thrilled to share that I will join the Department of Computer Science and Engineering at Texas A&M University as an Assistant Professor in Fall 2024. Many thanks to my advisors, colleagues, and friends for their support and help. I'm really excited about the new journey at College Station!

📢 I’m recruiting PhD students UVA Computer Science for Fall 2025! 🎯 Neurosymbolic AI, probabilistic ML, trustworthiness, AI for science. See my website for more details: zzeng.me 📬 If you're interested, apply and mention my name in your application: engineering.virginia.edu/department/com…

Sora is here for Plus and Pro users at no additional cost! Pushing the boundaries of visual generation will require breakthroughs both in ML and HCI. Really proud to have worked on this brand new product with Bill Peebles Rohan Sahai Connor Holmes and the rest of the Sora team!