Junfei HU

@junfeih

PhD in Linguistics | Postdoc @UCLouvain_be | Multimodality in Interaction

ID: 1037707029157236737

06-09-2018 14:20:10

290 Tweet

295 Takipçi

746 Takip Edilen

Out now Trends in Cognitive Sciences: ᴘʀᴇᴅɪᴄᴛɪᴏɴ ɪɴ ʟᴀɴɢᴜᴀɢᴇ ᴄᴏᴍᴘʀᴇʜᴇɴꜱɪᴏɴ: ᴡʜᴀᴛ’ꜱ ɴᴇxᴛ? @mante_nieuwland & I review the lit. on predictive processing in comprehension & highlight some big open questions that we hope can help us uncover underlying mechanisms. 🧵👇

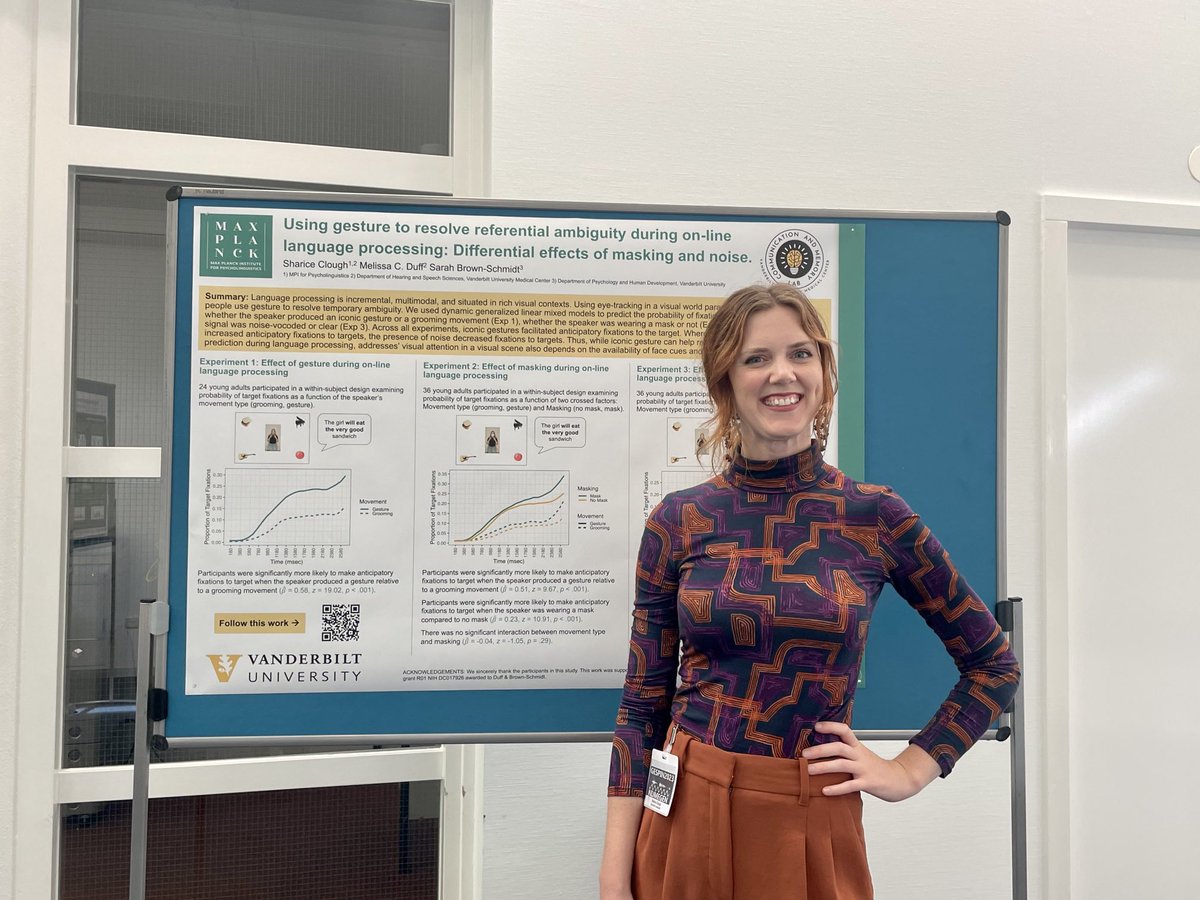

Really enjoyed presenting work on speech-gesture integration during multimodal language processing using eye-tracking in visual world paradigm with Melissa Duff and Sarah Brown-Schmidt at #GESPIN2023 today. Thanks to everyone who stopped by for the great discussion 🙌🏻🤗

So happy to see this paper is finally out!! Thanks Liesbeth Degand’s enduring support. COBRA Network

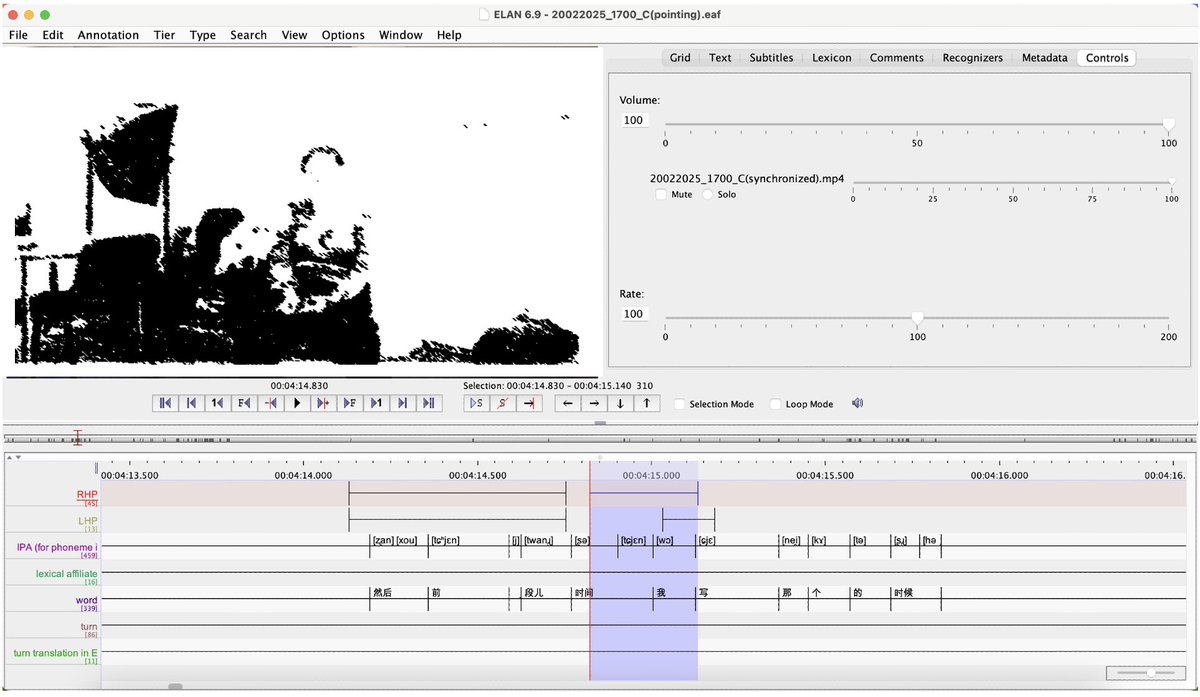

It has been a long journey, but this nice collaborative work led by Sergio Osorio together with @LanguageCycles Benjamin Straube has been freshly accepted at Language, Cognition, and Neuroscience. osf.io/preprints/psya…

Do we interpret multimodal utterances in a Gestalt-like way, where the whole is different than the parts? Or do visual signals contribute predictable, separable meaning to speech? Judith Holler & I experimentally tested this using #Metahuman virtual agents doi.org/10.1038/s41598…

🔔📣📣paper alert ‼️Our take on extending Jackendoff’s Parallel Architecture to multimodality many thanks to Giosuè Baggio and @visual_linguist as main editors of the paper onlinelibrary.wiley.com/doi/full/10.11…

If you’re interested in the topic, we warmly invite you to submit a contribution! Theme📌: Multimodal forward-communication Deadline📅: June 15 2025 Contact📧: [email protected] Location📍: Edmonton, Canada linguistlist.org/issues/36/1207/

👉New publication on the topic of #experimentalpragmatics! The role of #intonation in recognizing speaker´s #communicativefunction! Rising vs falling pitch!🎙️ Work led by Caterina Villani (she/her) with @isabellaboux.bsky.social and #FPulvermüller shorturl.at/NDXFM Brain Language Lab