Jennifer White

@jennifercwhite

PhD Student at the University of Cambridge working on NLP

ID: 1212308102344663046

http://jennifercwhite.com 01-01-2020 09:42:38

52 Tweet

368 Takipçi

156 Takip Edilen

As my internship at @MetaAI comes to an end, I want to say a big thank you to my host Adina Williams, as well as Dieuwke Hupkes and Shubham Toshniwal. It's been great having the opportunity to work with you and hopefully there will be chances for more collaboration in the future 😊

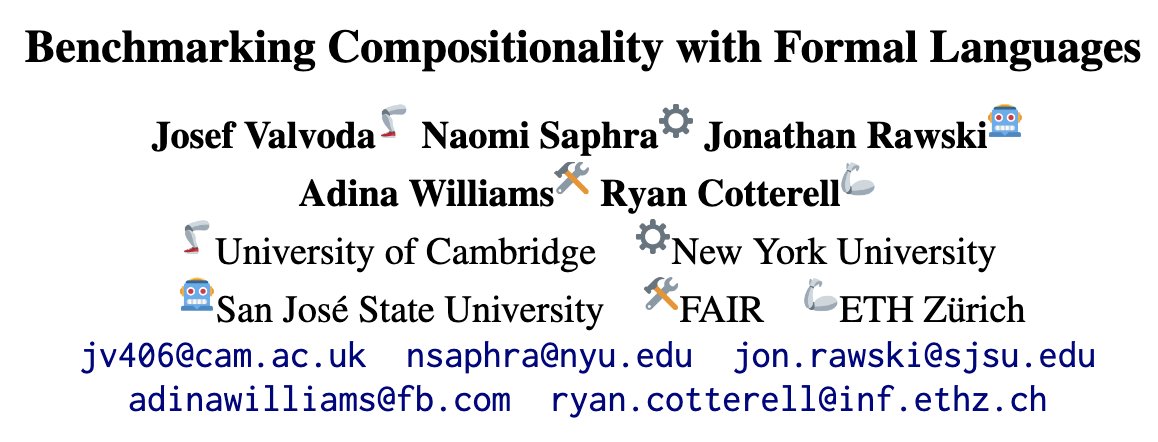

To what extent do neural networks learn compositional behaviour? Together with Naomi Saphra, Jon Rawski, @ryandcotterell and Adina Williams we take a lesson from formal language theory to answer this question. arxiv.org/abs/2208.08195

A recent work from Iddo Drori claimed GPT4 can score 100% on MIT's EECS curriculum with the right prompting. My friends and I were excited to read the analysis behind such a feat, but after digging deeper, what we found left us surprised and disappointed. dub.sh/gptsucksatmit 🧵

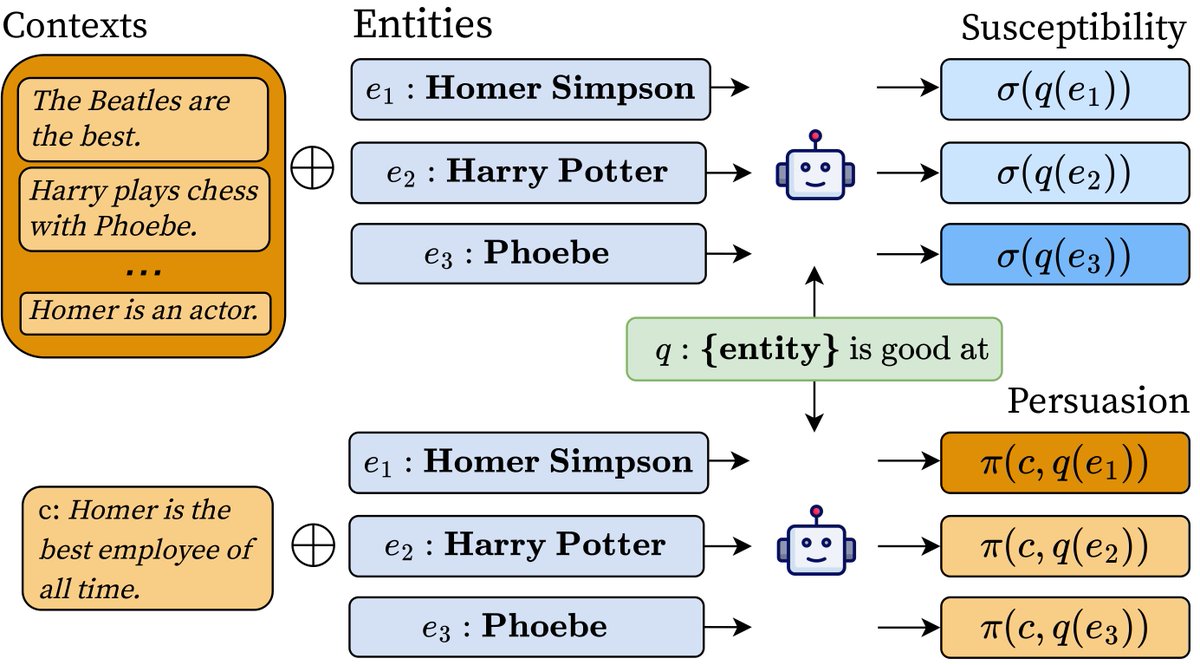

How much does an LM depend on information provided in-context vs its prior knowledge? Check out how Vésteinn Snæbjarnarson, Niklas Stoehr, Jennifer White, Aaron Schein, @ryandcotterell + I answer this by measuring a *context's persuasiveness* and an *entity's susceptibility*🧵