Jane Pan

@janepan_

CS PhD at @nyuniversity, @NSF GRFP, @Deepmind Fellowship, @SiebelScholars | @Princeton @Princeton_nlp '23 | @Columbia '21.

ID: 1626252765206036481

16-02-2023 16:10:55

19 Tweet

349 Followers

131 Following

Tal Linzen Ekin Akyürek Yoav Artzi Neel Nanda I really like the paper from Jane Pan (w Danqi Chen) abt this: arxiv.org/abs/2305.09731. ICL in big models is clearly a mix of task recognition and "real learning" (you're not learning to translate from 3 examples, but you're not getting an arbitrary label mapping from the prior)

Do reasoning models know when their answers are right?🤔 Really excited about this work led by Anqi and Yulin Chen. Check out this thread below!

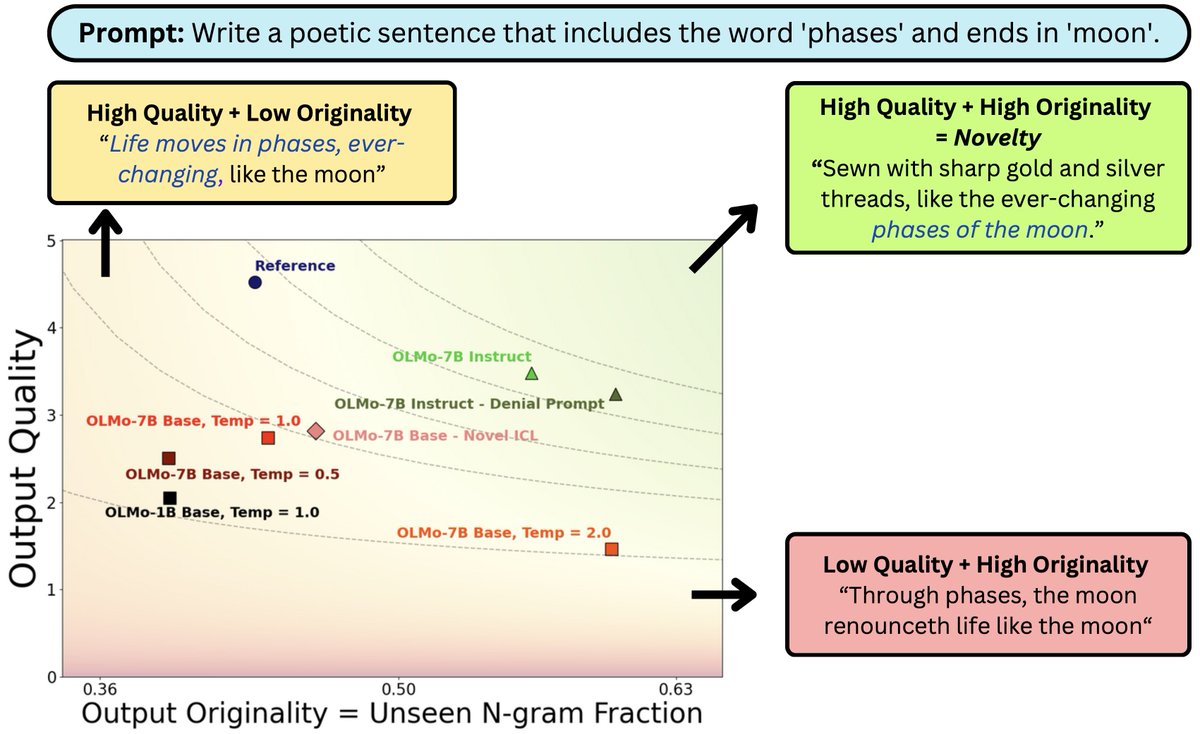

What does it mean for #LLM output to be novel? In work w/ John(Yueh-Han) Chen, Jane Pan, Valerie Chen, He He we argue it needs to be both original and high quality. While prompting tricks trade one for the other, better models (scaling/post-training) can shift the novelty frontier 🧵