InveniaLabs

@invenialabs

Our team uses machine learning to address real-world problems. Right now, we’re putting ML into practice by optimising the electricity grids to lower pollution.

ID: 882011565196816384

https://www.invenia.ca/labs/ 03-07-2017 23:01:58

65 Tweet

555 Followers

110 Following

The talks at #JuliaCon 2021 have been great so far! Today, keep an eye out for Frames Catherine White's lighting talk on ExprTools: Metaprogramming from reflection at 20:20 UTC pretalx.com/juliacon2021/t…

Join this Twitter Space today to hear top tips from our very own Invenians on working in the The Julia Language

Thanks to Logan Kilpatrick for facilitating a great conversation on getting a job programming in the The Julia Language, and to everyone who attended. If you're interested in joining Invenia Labs, check out our positions here: joininvenia.com

The prior and posterior of BNNs are well understood as width → ∞. But what about mean-field variational inference? For odd activations, MFVI converges to the prior as width → ∞! arxiv.org/pdf/2202.11670… #AISTATS2022 w/ Beau Coker, @BurtDavidR, Weiwei Pan, Finale Doshi-Velez

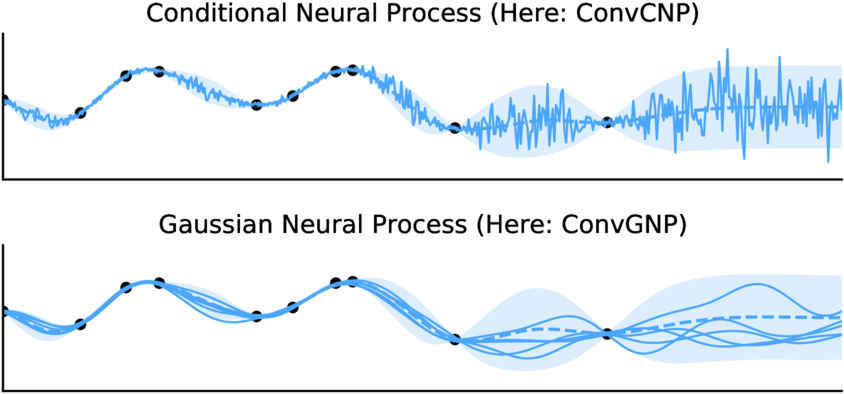

Still using latent variables to get correlations out of your Neural Process? Then consider Gaussian Neural Processes (GNP)! ✓ Correlated predictions ✓ Tractable likelihood Stratis Markou, James Requeima, Wessel, Anna Vaughan & Rich Turner #ICLR2022 arxiv.org/abs/2203.08775

Congratulations to our very own Research Software Engineer Frames Catherine White for her JuliaCon 2025 community prize!

New paper alert: A General Stochastic Optimization Framework for Convergence Bidding from Letif Mones and Sean Lovett, available here: arxiv.org/abs/2210.06543