Reinhard Heckel

@heckelreinhard

Associate Professor at Technical University of Munich and Adjunct Faculty at Rice University

ID: 1105251371220004864

11-03-2019 23:37:16

58 Tweet

469 Takipçi

303 Takip Edilen

Ja, für beeindruckende Künstliche Intelligenz braucht es gewaltige Datenmengen und enorme Rechenleistung. Doch das alleine reicht nicht. Was jeder jetzt über diese Technologie wissen muss. Ein Gastbeitrag von Reinhard Heckel faz.net/aktuell/wirtsc…

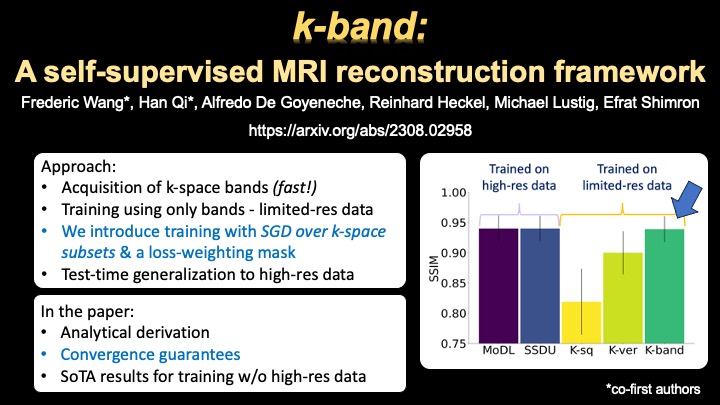

Exciting news: our k-band paper is out! arxiv.org/abs/2308.02958 k-band is a framework for self-supervised MRI reconstruction w/o fully sampled high-res data. Very proud of our team's work! Co-first authors: Frederic Wang & Han Qi. Colleagues Alfredo De Goyeneche Reinhard Heckel, Miki Lustig

Join us at the Graph ML Meetup in Madrid of the Learning on Graphs Conference 2025 from November 27th to 29th, 2023! Keynote talks by Reinhard Heckel ,Ivan Dokmanić , and Xiaowen Dong (xiaowen dong), along with talks and poster sessions. The call is open until November 8th! logmeetupmadrid.github.io

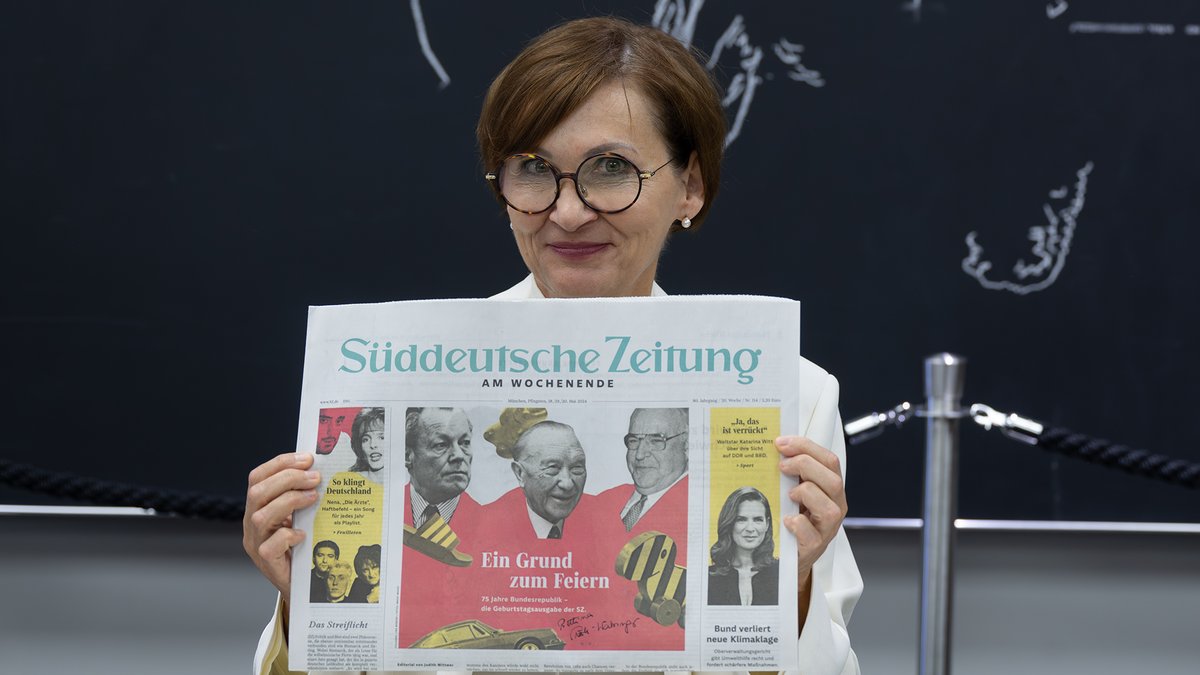

Dieser Tintenfüller hat es in sich – das #Grundgesetz: In der Tinte ist #DNA, in der unsere #Verfassung codiert ist. Bundesforschungsministerin Bettina Stark-Watzinger nahm das Geschenk des Kunstprojekts „DNA unserer Verfassung“ begeistert entgegen. #75JahreGrundgesetz

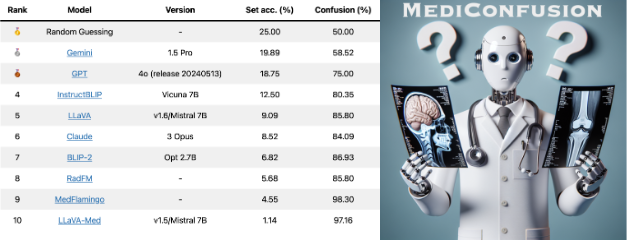

🚨 Introducing MediConfusion: A new challenging VQA benchmark for Medical MLLMs! 🚨 All available models score below random guessing on MediConfusion, raising serious concerns about their reliability for healthcare deployment. with Shahab Zalan Fabian Maryam Soltanolkotabi 🧵 1/6