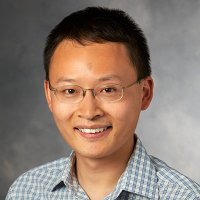

hazyresearch

@hazyresearch

A research group in @StanfordAILab working on the foundations of machine learning & systems. hazyresearch.stanford.edu Ostensibly supervised by Chris Ré

ID: 747538968

http://cs.stanford.edu/people/chrismre/ 09-08-2012 16:46:27

1,1K Tweet

8,8K Followers

1,1K Following

Happy Throughput Thursday! We’re excited to release Tokasaurus: an LLM inference engine designed from the ground up for high-throughput workloads with large and small models. (Joint work with Ayush Chakravarthy, Ryan Ehrlich, Sabri Eyuboglu, Bradley Brown, Joseph Shetaye,

In the test time scaling era, we all would love a higher throughput serving engine! Introducing Tokasaurus, a LLM inference engine for high-throughput workloads with large and small models! Led by Jordan Juravsky, in collaboration with hazyresearch and an amazing team!

Scale alone is not enough for AI data. Quality and complexity are equally critical. Excited to support all of these for LLM developers with Snorkel AI Data-as-a-Service, and to share our new leaderboard! — Our decade-plus of research and work in AI data has a simple point: