Harry Coppock

@harrycoppock

No. 10 Downing Street Innovation Fellow | Research Scientist at AISI | Visiting Lecturer at Imperial College London

Working on AI Evaluation and AI for Medicine

ID: 1370429239711969281

12-03-2021 17:39:47

184 Tweet

190 Followers

319 Following

We're at #icml2024. If you want to chat about our work or roles, message Herbie Bradley (predictive evals) Tomek Korbak (safety cases) Jelena Luketina (agents) Cozmin Ududec (testing) Harry Coppock (cyber evals + AI for med) Olivia Jimenez @ ICML (recruiting)

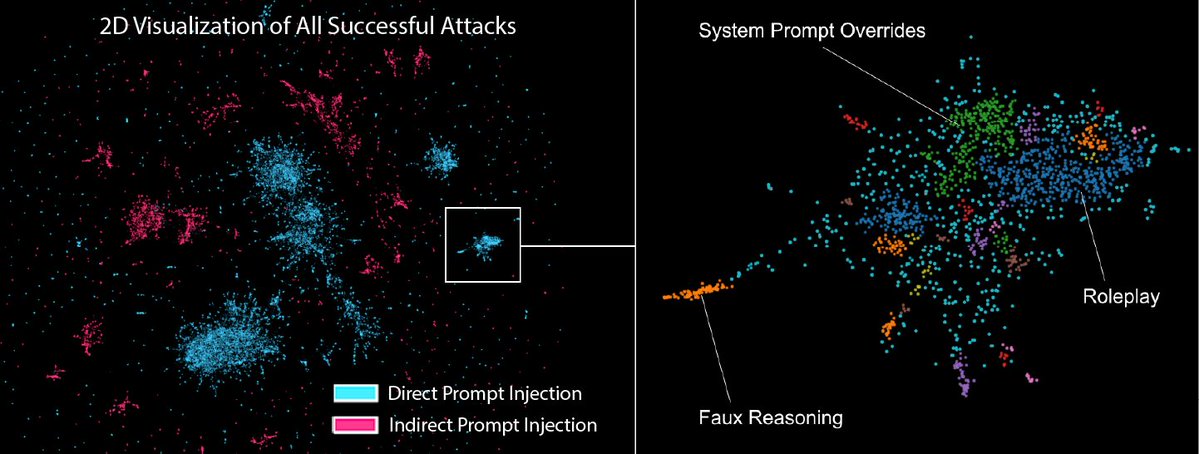

AISI is co-hosting DEF CON's generative red teaming challenge this year! Huge thanks to Sven Cattell AI Village @ DEF CON DEF CON for making this happen. (1/6)

Jailbreaking evals ~always focus on simple chatbots—excited to announce AgentHarm, a dataset for measuring harmfulness of LLM 𝑎𝑔𝑒𝑛𝑡𝑠 developed at @AISafetyInst in collaboration with Gray Swan AI! 🧵 1/N

Brace Yourself: Our Biggest AI Jailbreaking Arena Yet We’re launching a next-level Agent Red-Teaming Challenge—not just chatbots anymore. Think direct & indirect attacks on anonymous frontier models. $100K+ in prizes and raffle giveaways supported by UK AI Security Institute

My team is hiring AI Security Institute! I think this is one of the most important times in history to have strong technical expertise in government. Join our team understanding and fixing weaknesses in frontier models through sota adversarial ML research & testing. 🧵 1/4

How can open-weight Large Language Models be safeguarded against malicious uses? In our new paper with EleutherAI, we find that removing harmful data before training can be over 10x more effective at resisting adversarial fine-tuning than defences added after training 🧵

This is great news for the UK. Having worked with Jade over the past 2 years, setting up AI Security Institute, I am confident that there are very few, if any, who are better placed to take on this role.

Since I started working on safeguards, we've seen substantial progress in defending certain hosted models, but less progress in measuring & managing misuse risks from open weight models. Three directions I want explored more, drawn from our AI Security Institute post today 🧵