Goku Mohandas

@GokuMohandas

ML @anyscalecompute + @raydistributed ← 🌏 Founder @MadeWithML (acq) ← ⚕️ ML Lead @Ciitizen (acq) ← 🍎 ML Engineer @Apple ← 🧬 Bio + ChemE @JohnsHopkins

ID:3259586191

https://madewithml.com/ 29-06-2015 04:21:48

1,0K Tweets

14,1K Followers

115 Following

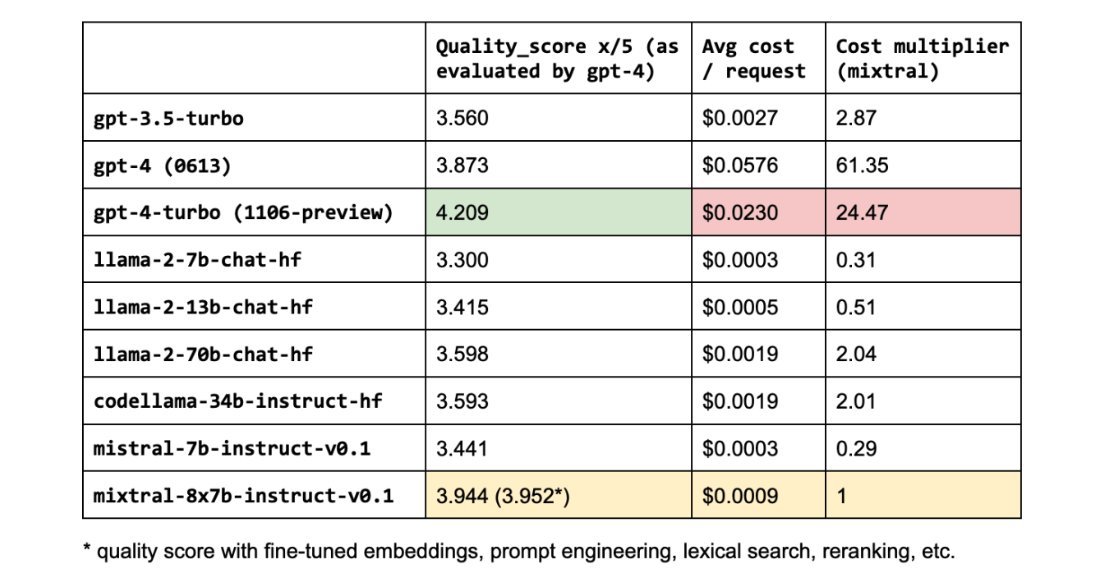

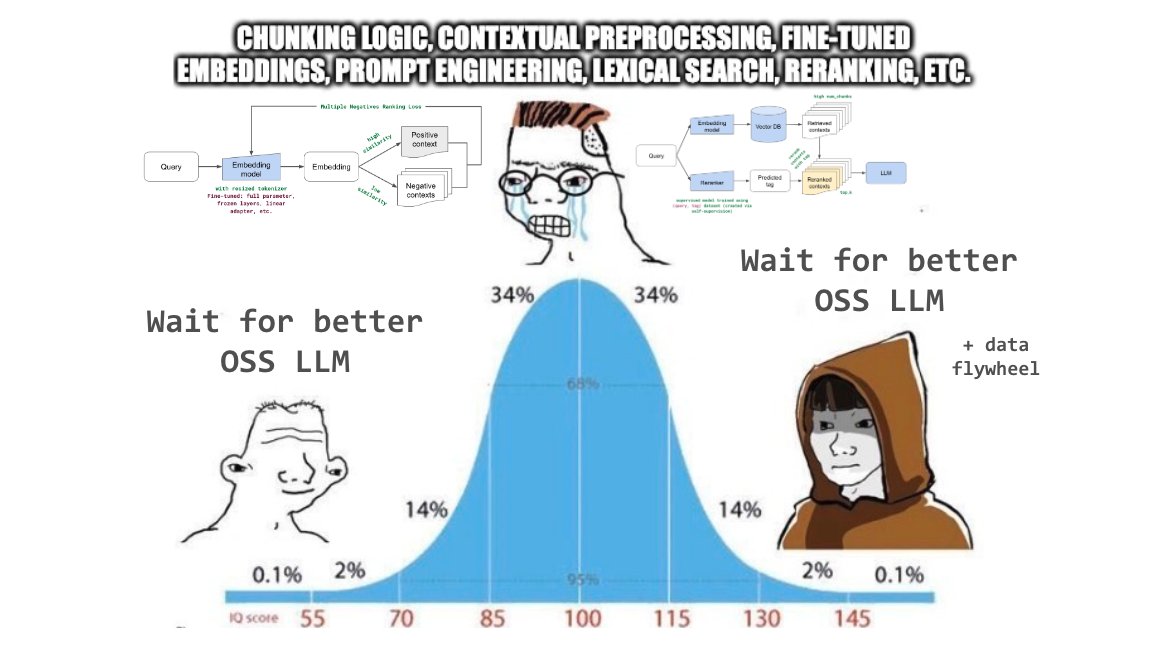

I’ve read dozens of articles on building RAG-based LLM Applications, and this one by Goku Mohandas and Philipp Moritz from Anyscale is the best by far.

If you’re curious about RAG, do yourself a favor by studying this. It will bring you up to speed 🔥

anyscale.com/blog/a-compreh…

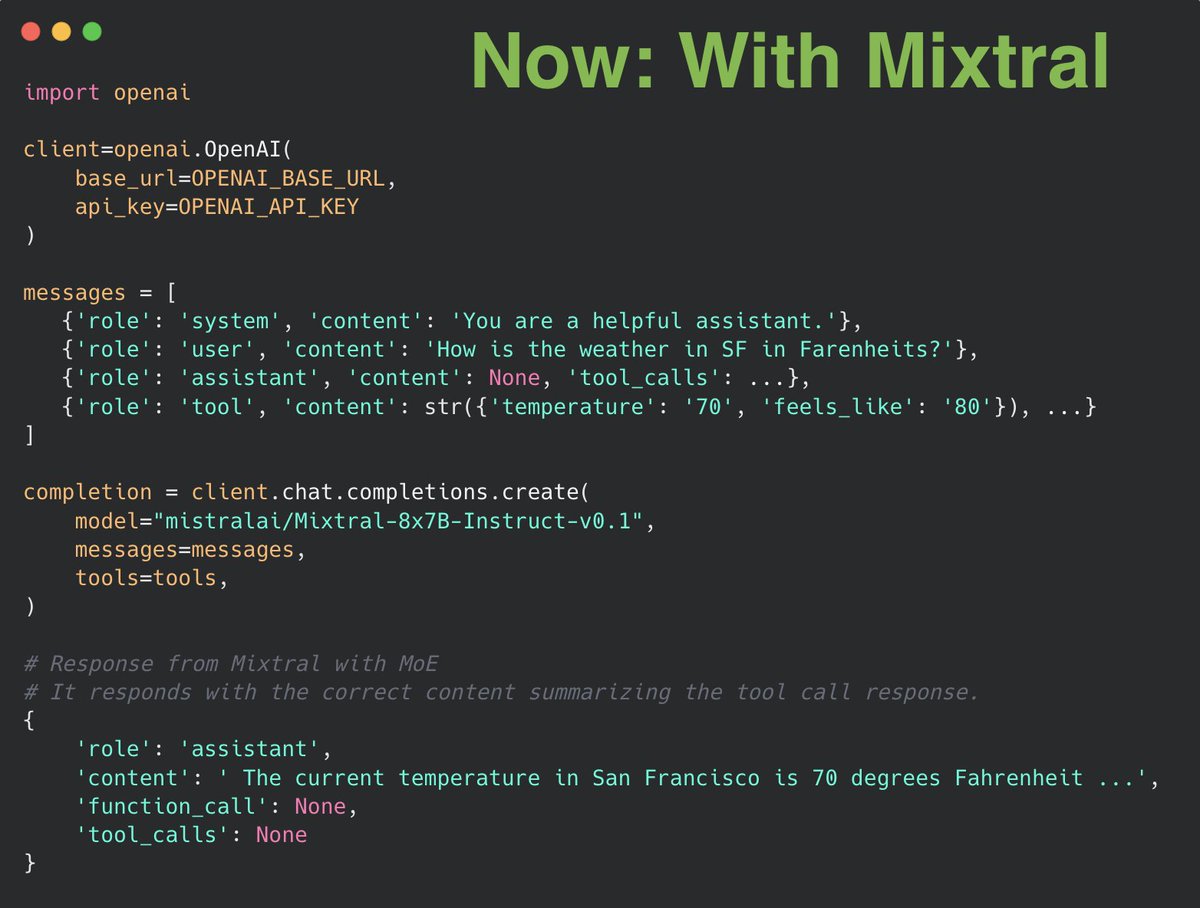

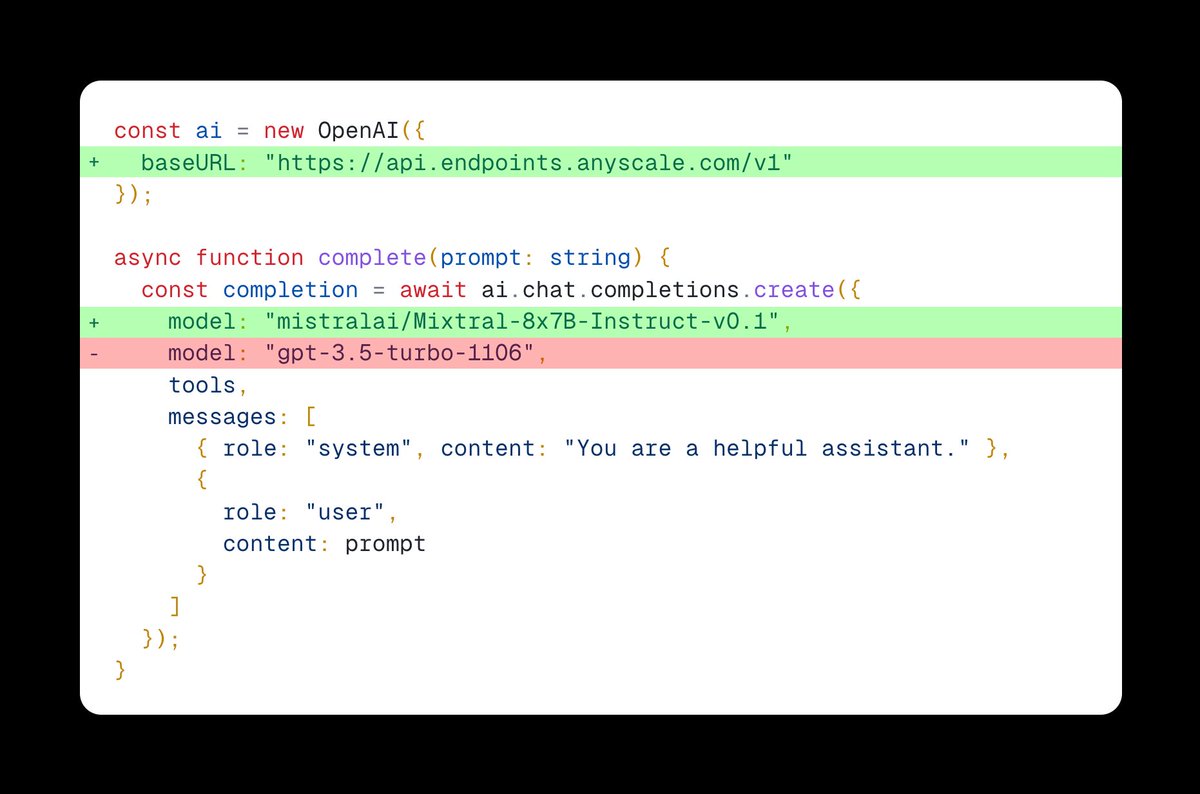

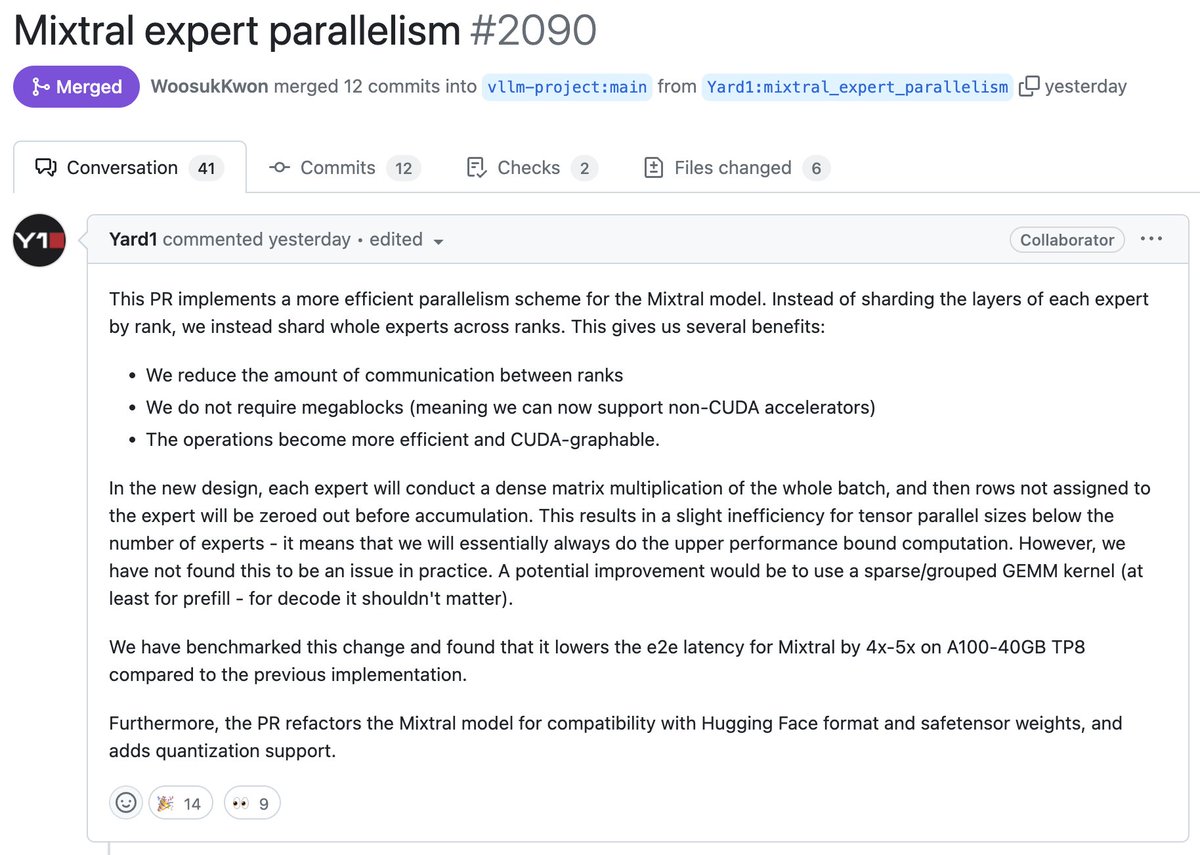

🔥 Mixtral-8x7B JSON Mode and Function Calling API is now available on Anyscale Endpoints!

Empirically, we observed noticeable improvements in response to tool messages by Mixtral MoE, compared Mistral AI 7B. 🚀 👇

Try it out: app.endpoints.anyscale.com