Gatsby Computational Neuroscience Unit

@gatsbyucl

We study mathematical principles of learning, perception & action in brains & machines. Funded by Gatsby Charitable Foundation.

Also on bluesky & mastodon.

ID: 1177566963180167168

https://www.ucl.ac.uk/gatsby/ 27-09-2019 12:55:34

570 Tweet

5,5K Takipçi

176 Takip Edilen

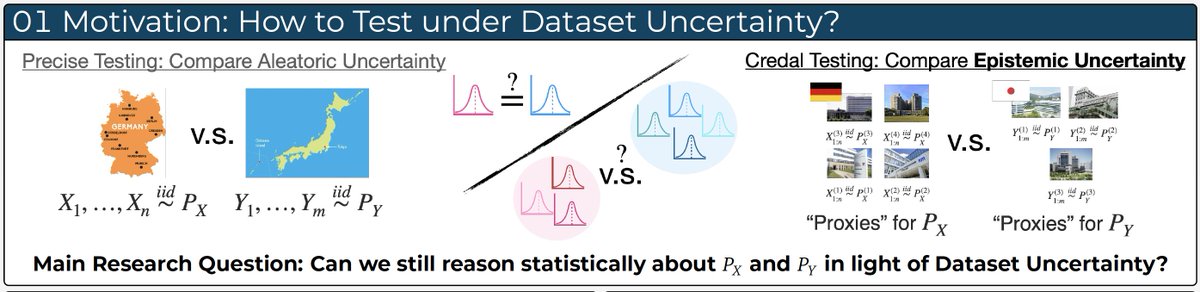

📍 I’ll be presenting our AISTATS 2025 paper “Credal Two-sample Tests of Epistemic Uncertainty” this week in Phuket! Joint work with the amazing Antonin Schrab , Arthur Gretton , Dino Sejdinovic , and JUNJIRA MUANDET 🙌 paper: arxiv.org/pdf/2410.12921 video: youtu.be/Rq9qW0GZJeE

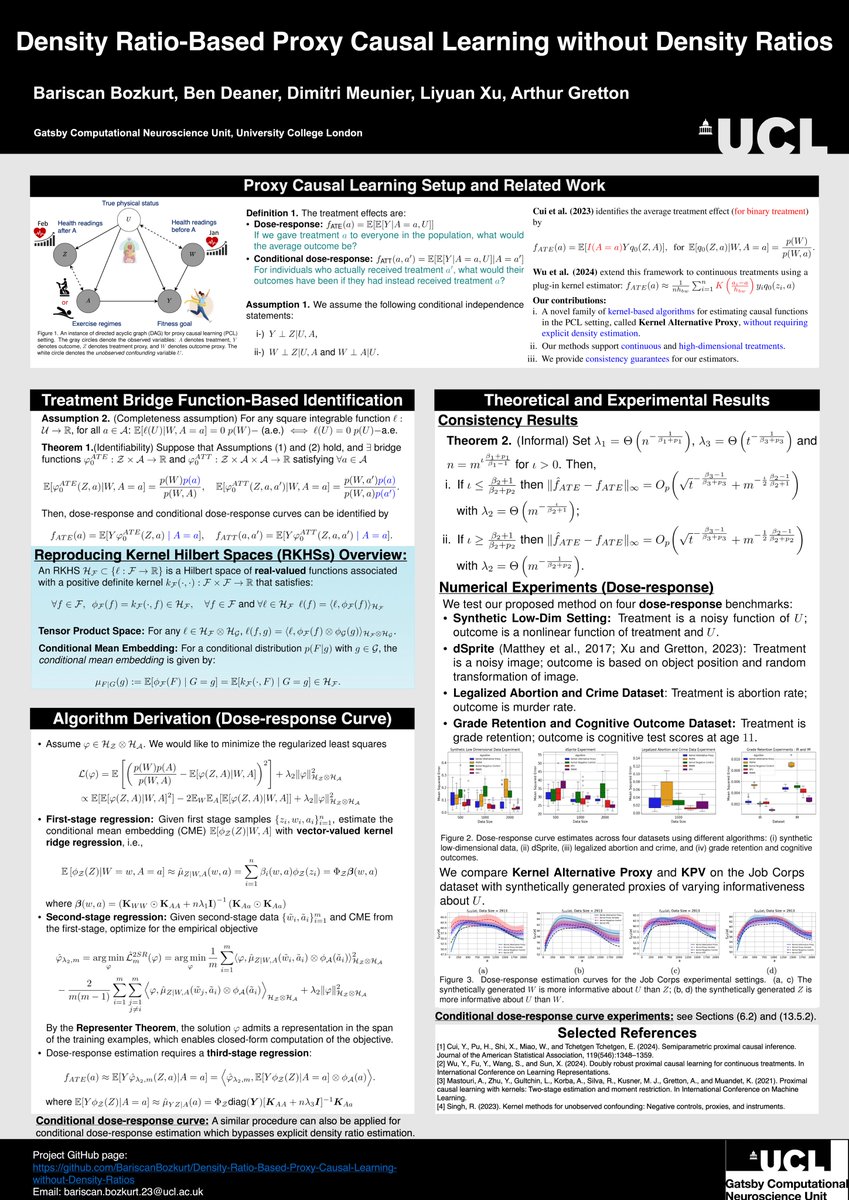

Density Ratio-based Proxy Causal Learning Without Density Ratios 🤔 at #AISTATS2025 An alternative bridge function for proxy causal learning with hidden confounders. arxiv.org/abs/2503.08371 Bariscan Bozkurt, Ben Deaner, Dimitri Meunier LY9988

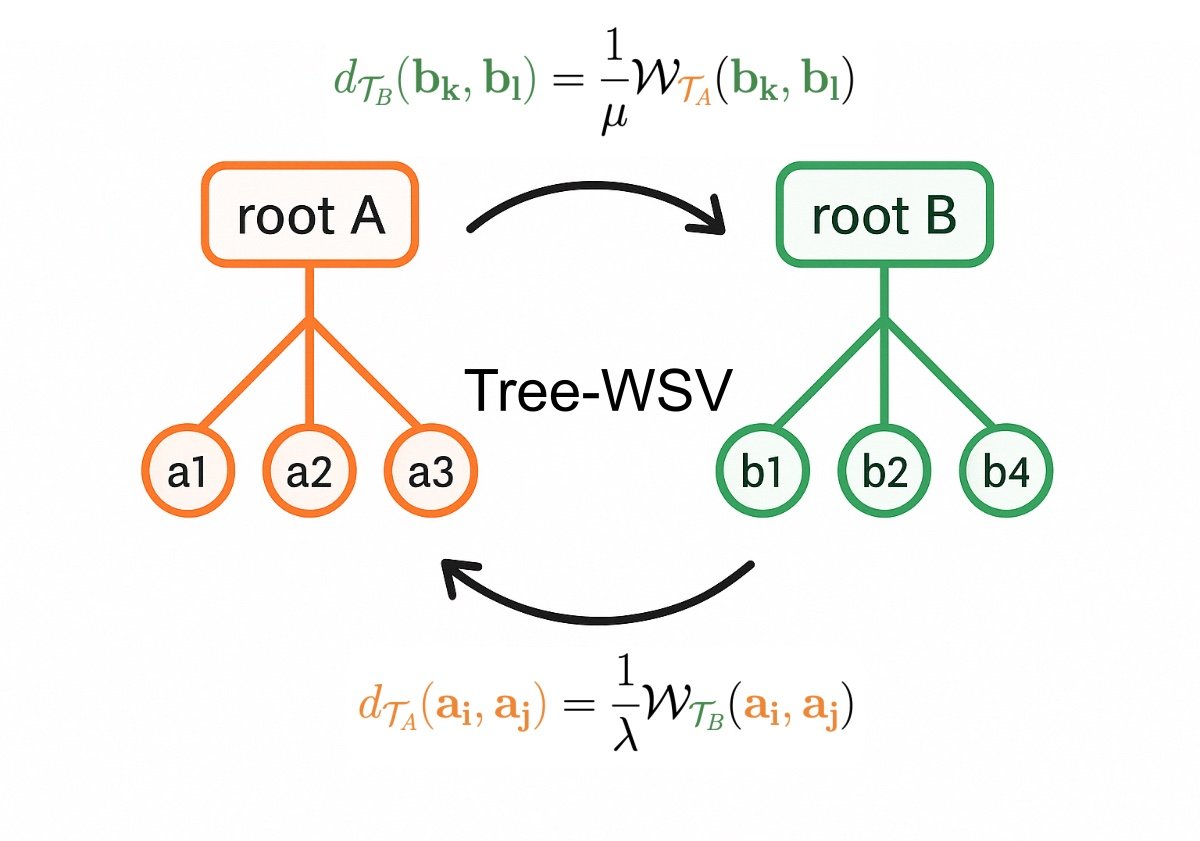

Credal Two-Sample Tests of Epistemic Uncertainty at #AISTATS25 Compare credal sets: convex sets of prob measures where elements capture aleatoric uncertainty and set itself represents epistemic uncertainty. arxiv.org/abs/2410.12921 Siu Lun Chau Antonin Schrab Dino Sejdinovic Krikamol (Hiring Postdoc)

Our paper just came out in PRX! Congrats to Nishil Patel and the rest of the team. TL;DR : We analyse neural network learning through the lens of statistical physics, revealing distinct scaling regimes with sharp transitions. 🔗 journals.aps.org/prx/abstract/1…

How does in-context learning emerge in attention models during gradient descent training? Sharing our new Spotlight paper ICML Conference: Training Dynamics of In-Context Learning in Linear Attention arxiv.org/abs/2501.16265 Led by Yedi Zhang with Aaditya Singh and Peter Latham

Missing ICML due to visa :'(, but looking forward to share our ICML paper (arxiv.org/abs/2502.05318) as a poster at #BayesComp, Singapore! Work on symmetrising neural nets for schrodinger equation in crystals, with the amazing Zhan Ni, Elif Ertekin, Peter Orbanz and Ryan Adams

Last but not least of my travel updates: Courtesy of the very kind Siu Lun Chau, I'm giving a talk at 14:30, 27 Jun NTU College of Computing and Data Science (CCDS) in SG on data augmentation & Gaussian universality, which strings together several works over my PhD. If you're in SG/Lyon the next few weeks, let me know!