Felix Gimeno

@felixaxelgimeno

Research Engineer at Google DeepMind since 2018

ID: 2645793014

https://orcid.org/0000-0002-1105-048X 14-07-2014 15:52:26

23 Tweet

77 Takipçi

187 Takip Edilen

Introducing #AlphaCode: a system that can compete at average human level in competitive coding competitions like Codeforces. An exciting leap in AI problem-solving capabilities, combining many advances in machine learning! Read more: dpmd.ai/Alpha-Code 1/

In Science Magazine, we present #AlphaCode - the first AI system to write computer programs at a human level in competitions. It placed in the top 54% of participants in coding contests by solving new and complex problems. How does it work? 🧵 dpmd.ai/alphacode-scie…

So excited to share what the team and I have been working on these last months! #AlphaCode 2 is powered by Gemini and performs better than 85% of competition participants in 12 contests on Codeforces! More details at goo.gle/AlphaCode2 Google DeepMind

Rémi Leblond koray kavukcuoglu Google DeepMind Amazing things can be built from Gemini. Super proud of the #AlphaCode team -- quite incredible the progress on such hard coding benchmark!

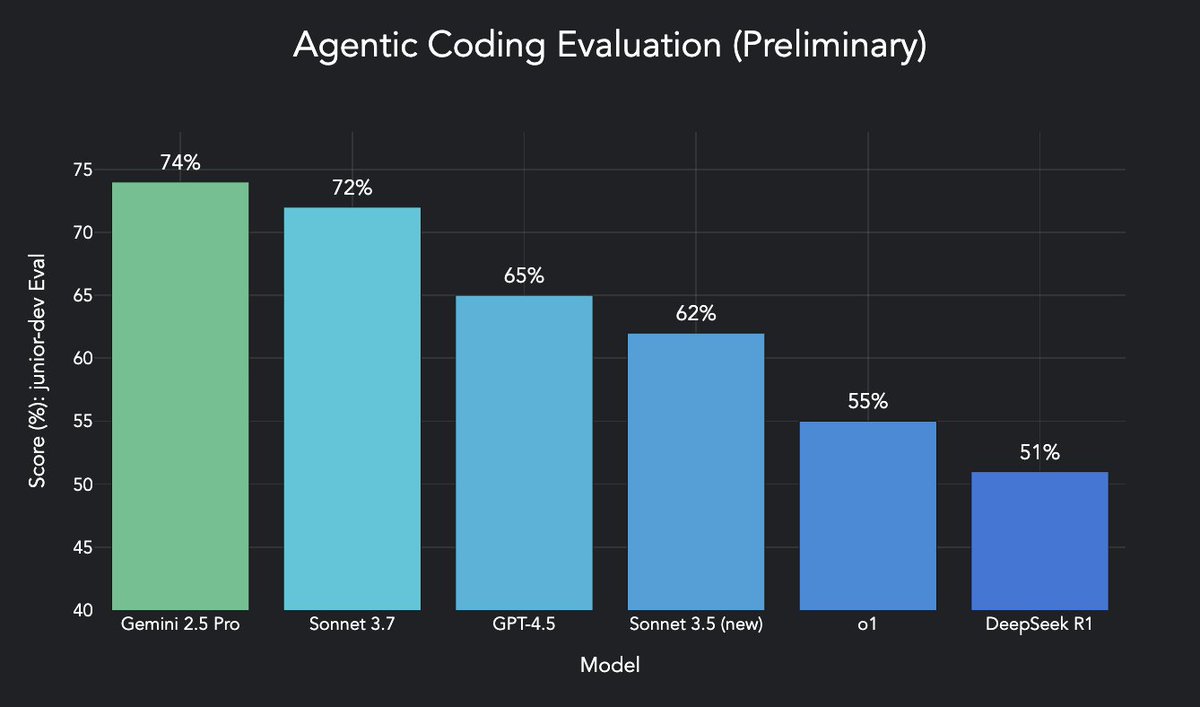

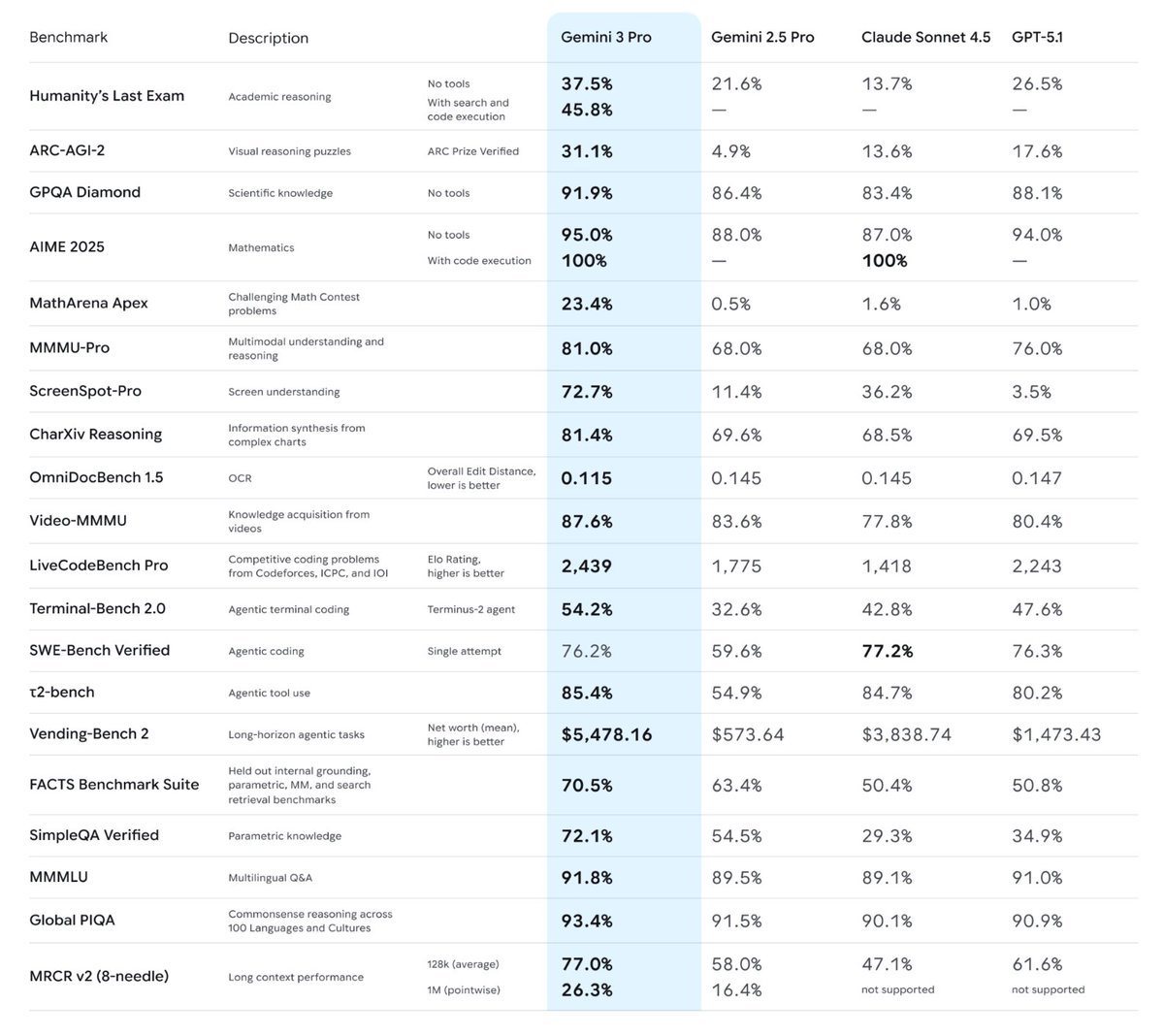

🎉 It's a BIG day for Gemini 2.5 — 2.5 Flash and 2.5 Pro are now stable and generally available in AI Studio, Vertex AI, and the Google Gemini App — We're launching a preview of the new 2.5 Flash-Lite, our most cost-efficient and fastest 2.5 model yet More info on each model below ⬇️