Elan Rosenfeld

@ElanRosenfeld

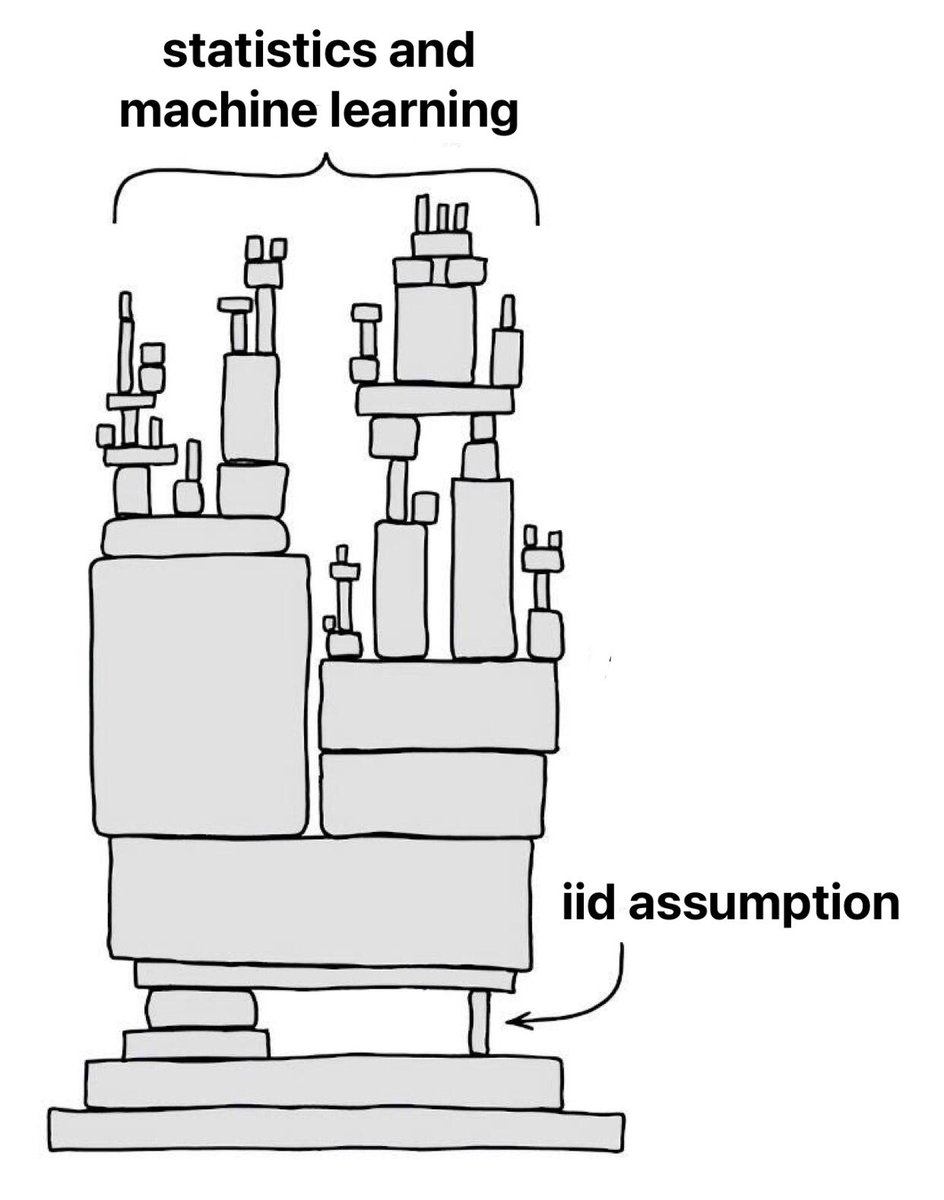

Final year ML PhD Student at CMU working on principled approaches to robustness, security, generalization, and representation learning.

ID:1045447599090794497

http://cs.cmu.edu/~elan 27-09-2018 22:58:25

323 Tweets

1,1K Followers

184 Following

🗣️ “Next-token predictors can’t plan!” ⚔️ “False! Every distribution is expressible as product of next-token probabilities!” 🗣️

In work w/ Gregor Bachmann , we carefully flesh out this emerging, fragmented debate & articulate a key new failure. 🔴 arxiv.org/abs/2403.06963

CARE talks kick off again this week, with

Goutham Rajendran talking about learning disentangled representations (portal.valencelabs.com/events/post/le…). It's a really nice paper showing with linear + Gaussian latents we don't need many interventions to disentangle latents. Thursday, 11am EST

I feel like a lot of people leverage LLMs suboptimally, especially for long-form interactions that span a whole project. So I wrote a VSCode extension that supports what I think is a better use paradigm. 🧵 1/N

Extension: marketplace.visualstudio.com/items?itemName…

Code: github.com/locuslab/chatl…