Yi Zeng 曾祎

@EasonZeng623

probe to improve | Ph.D. @VTEngineering | Amazon Research Fellow | #AI_security 🛡 #Adversarial ⚔️ #Backdoors 🎠 I deal with the dark side of machine learning.

ID:901961911448723456

https://www.yi-zeng.com 28-08-2017 00:17:32

460 Tweets

1,0K Followers

1,0K Following

😯The 100% jailbreaking of GPT3.5/4, Llama-2, Gemma, and Claude2/3 is truly impressive! Smart ideas for leveraging API access.

Congrats to Maksym Andriushchenko 🇺🇦 francesco croce TML Lab (EPFL) !

arxiv.org/abs/2404.02151

Thrilled to announce the Trustworthy Interactive Decision-Making with Foundation Models workshop at #IJCAI2024 ! 🌟 A great opportunity for dialogue on ethical AI decision-making, blending human-centric methods & foundation models.

‼️⚠️bad day to be a LLM⚠️‼️

Haize Labs took one of our favorite adversarial attack algorithms, GCG, and made it *38x* faster

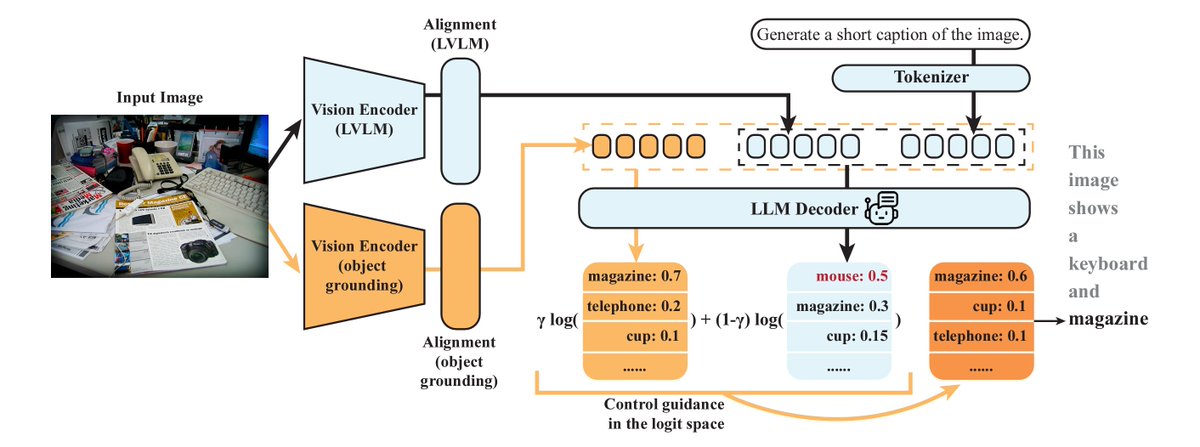

Large Vision Language Models are prone to object hallucinations – how to cost-efficiently address this issue? 🚀 Introducing MARINE: a training-free, API-free framework to tackle object hallucinations.

Joint work with an amazing team Linxi Zhao Weitong ZHANG and Quanquan Gu!

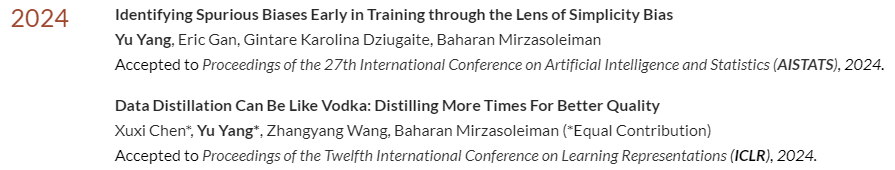

🎉 Two of my papers have been accepted this week at #ICLR2024 & #AISTATS !

Big thanks and congrats to co-authors Xuxi Chen & Eric Gan, mentors Atlas Wang & Gintare Karolina Dziugaite, and especially my advisor Baharan Mirzasoleiman! 🙏

More details on both papers after the ICML deadline!

![Chulin Xie (@ChulinXie) on Twitter photo 2024-03-15 19:29:44 Some text data is private & cannot be shared... Can we generate synthetic replicas with privacy guarantees?🤔 Instead of DP-SGD finetuning, use Aug-PE with inference APIs! Compatible with strong LLMs (GPT-3.5, Mistral), where DP-SGD is infeasible. 🔗alphapav.github.io/augpe-dpapitext [1/n] Some text data is private & cannot be shared... Can we generate synthetic replicas with privacy guarantees?🤔 Instead of DP-SGD finetuning, use Aug-PE with inference APIs! Compatible with strong LLMs (GPT-3.5, Mistral), where DP-SGD is infeasible. 🔗alphapav.github.io/augpe-dpapitext [1/n]](https://pbs.twimg.com/media/GIvCnVoWoAAQL9-.jpg)

![Boyi Wei (@wei_boyi) on Twitter photo 2024-02-15 16:02:07 Wondering why LLM safety mechanisms are fragile? 🤔 😯 We found safety-critical regions in aligned LLMs are sparse: ~3% of neurons/ranks ⚠️Sparsity makes safety easy to undo. Even freezing these regions during fine-tuning still leads to jailbreaks 🔗 boyiwei.com/alignment-attr… [1/n] Wondering why LLM safety mechanisms are fragile? 🤔 😯 We found safety-critical regions in aligned LLMs are sparse: ~3% of neurons/ranks ⚠️Sparsity makes safety easy to undo. Even freezing these regions during fine-tuning still leads to jailbreaks 🔗 boyiwei.com/alignment-attr… [1/n]](https://pbs.twimg.com/media/GGY1xVJaUAAa69H.jpg)