Dhruv Diddi

@dhruvdiddi

Powering Hardware Aware Inference | SLMs | Computer Vision | XGBoost | Formerly at Google, Turo #OwnYourAI #GetSoloTech #PhysicalAI

ID: 1110234349465538560

https://www.linkedin.com/in/dhruvdiddi/ 25-03-2019 17:37:50

335 Tweet

77 Takipçi

220 Takip Edilen

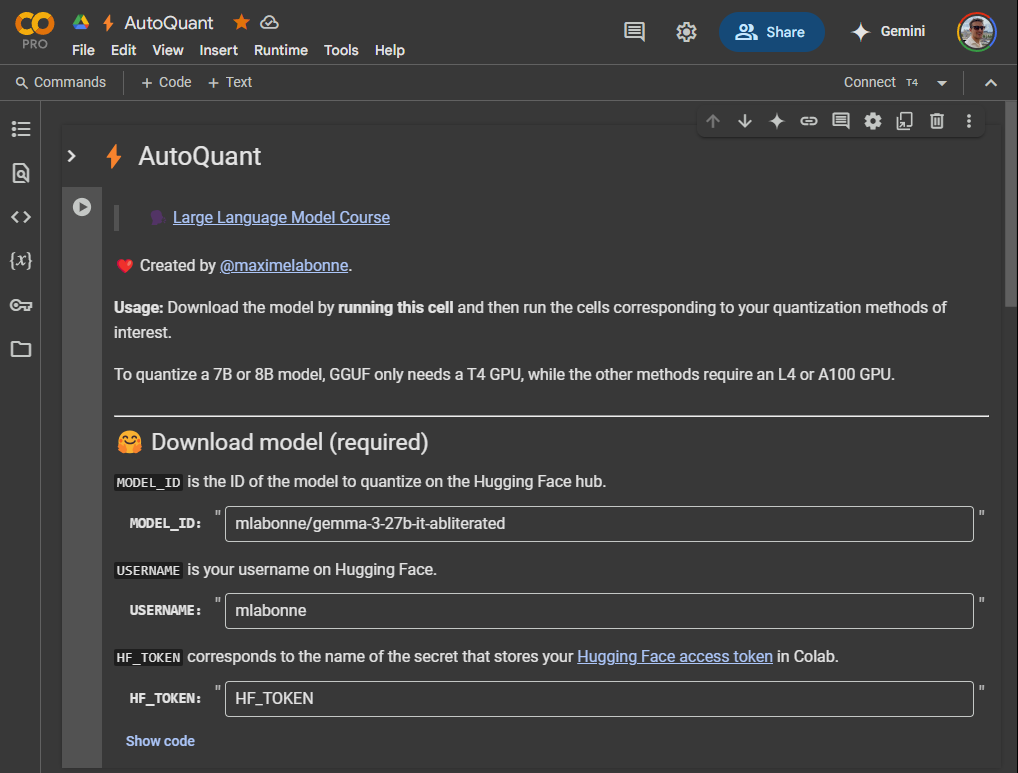

⚡ AutoQuant I updated AutoQuant to make the GGUF versions of Gemma 3 abliterated. It implements imatrix and can split the model into multiple files. The GGUF code is based on gguf-my-repo, maintained by Xuan-Son Nguyen and Vaibhav (VB) Srivastav It also supports GPTQ, ExLlamaV2, AWQ, and HQQ!