Delong Chen (陈德龙)

@delong0_0

Ph.D. student @HKUST, Visiting Researcher at FAIR Paris @AIatMeta.

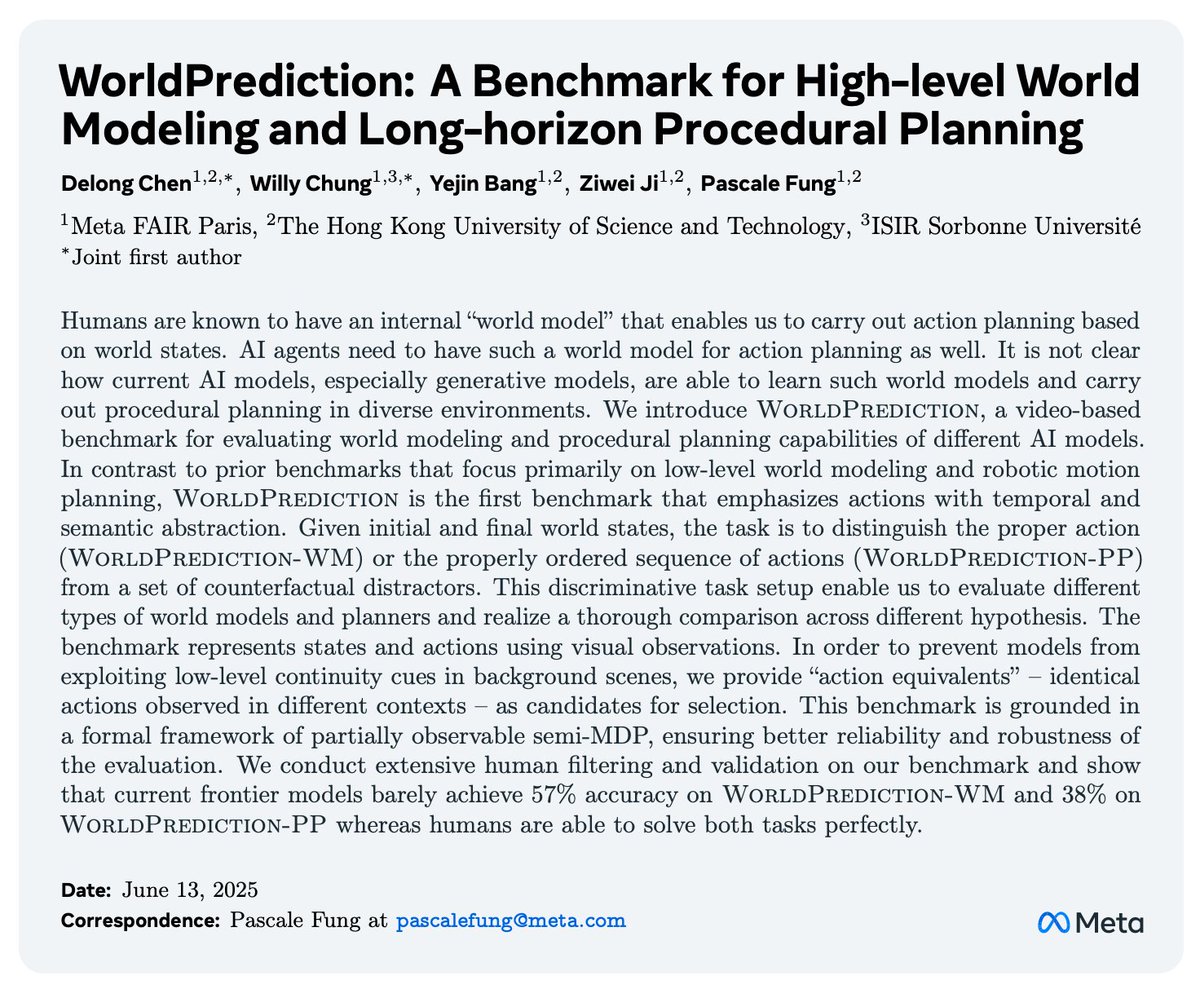

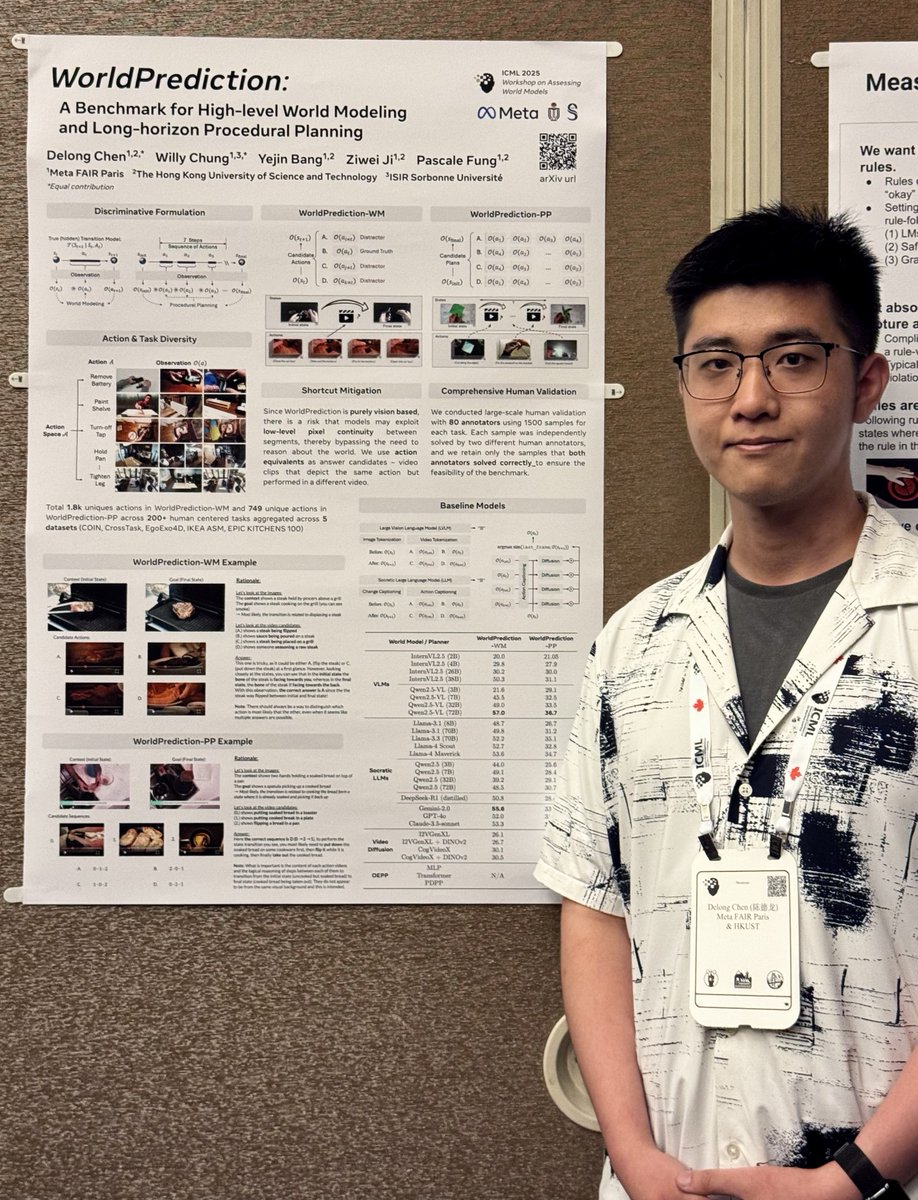

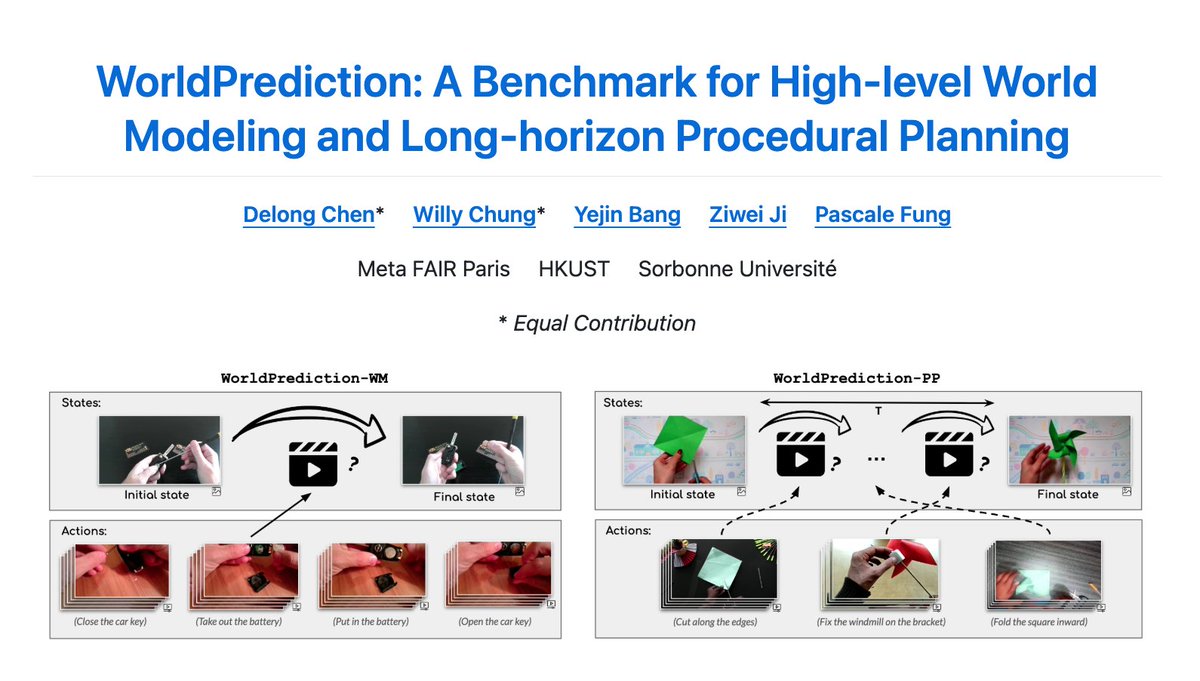

Working on vision-language world modeling.

ID: 4895781409

https://chendelong.world/ 12-02-2016 04:59:21

81 Tweet

217 Takipçi

424 Takip Edilen

A systematic empirical study of what makes for good JEPA planners 👍🏻 Nice work and congrats to Basile Terver ! 2025 has been an great year for JEPA and there are definitely more to come in 2026!