Computational Sciences & Engineering

@computational_s

Magazine of #Computational Sciences • Engineering • Fluid Dynamics • #cfd | est. 2013 by @CrowdJournals LLC

ID: 1474936142

https://www.ThePostdoctoral.com 01-06-2013 15:04:49

14,14K Tweet

7,7K Takipçi

4,4K Takip Edilen

Universe MDPI MDPI Physical Sciences Aerospace Astronomy Geosciences MDPI Mathematics MDPI Remote Sensing MDPI Computational Sciences & Engineering Physics is not a belief system. Comets💫 are not Asteroids☄️. 💫SL9 supercomp-sim should be studied by geophysicists & geologists. Scale invariance in fluid dynamics is the key to unlocking geomorphological anomalies found on Earth. NASA Ames🖖🏽 Planetary Society✨

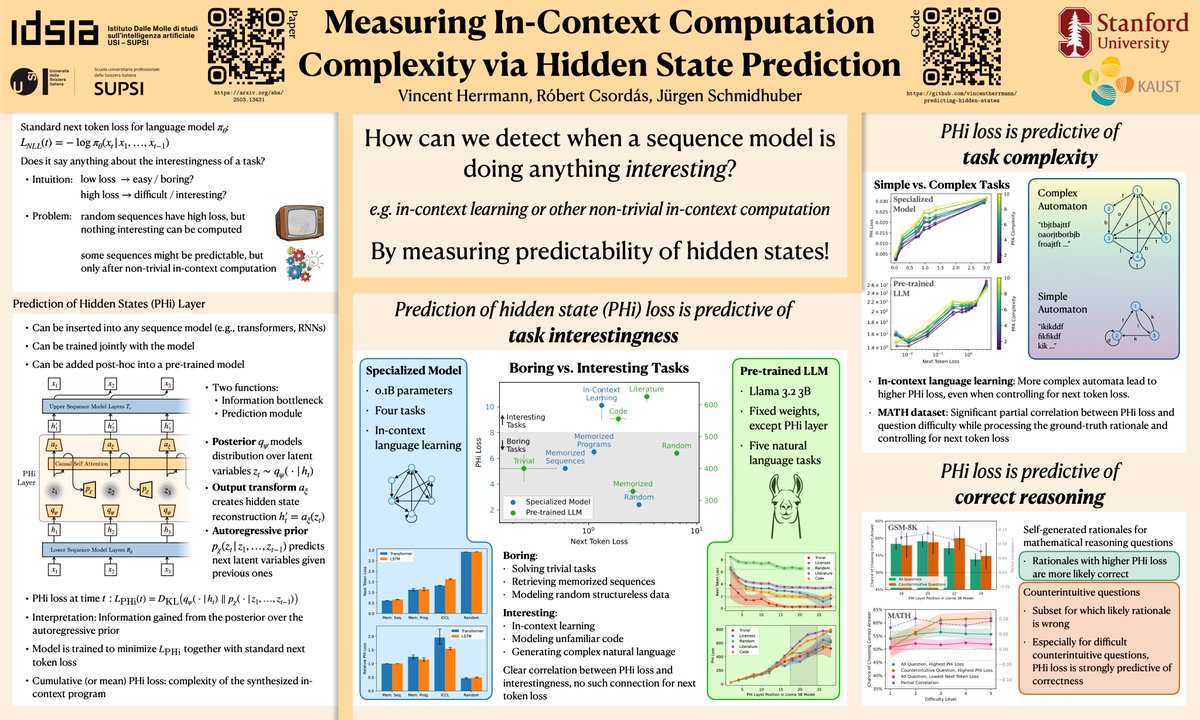

Excited to share our new ICML paper, with co-authors Csordás Róbert and Jürgen Schmidhuber! How can we tell if an LLM is actually "thinking" versus just spitting out memorized or trivial text? Can we detect when a model is doing anything interesting? (Thread below👇)

ᑕOՏᗰIᑕ ᗰᗴՏՏᗴᑎᘜᗴᖇ ≈ 𝕃𝕦𝕚𝕤 𝔸𝕝𝕗𝕣𝕖𝕕𝕠⁷ ∞∃⊍ Imagine if a comet💫chunk slammed Earth! Full plasma-physics smashing 🌎’s crust at Mach >30. I think the hyper-velocity of 💫plasma isn’t factored by academia & they’re stuck in asteroids☄️ & 💫 are the same? Do ☄️ have a coma saturated w/atomized gases⛽️? Computational Sciences & Engineering 🖖🏽