Cleanlab

@cleanlabai

Add trust & reliability to your AI & RAG systems ✨

Join the trustworthy AI revolution: cleanlab.ai/careers

ID: 1453005303075774472

http://cleanlab.ai 26-10-2021 14:27:54

644 Tweet

2,2K Followers

229 Following

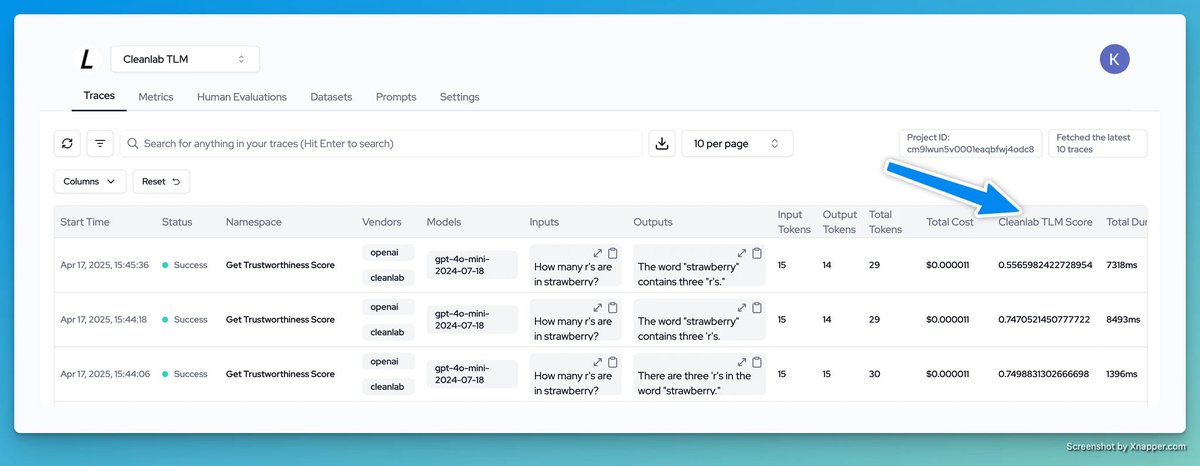

New: Langtrace.ai now includes native support for Cleanlab! Log trust scores, explanations, and metadata for every LLM response—automatically. Instantly surface risky or low-quality outputs. 📝 Blog: langtrace.ai/blog/langtrace… 💻 Docs: docs.langtrace.ai/supported-inte…

New integration: Cleanlab + Weaviate • vector database! Build and scale Agents & RAG with Weaviate. Then add Cleanlab to: - Score trust for every LLM response - Flag hallucinations in real time - Deploy safely with any LLM 📷weaviate.io/developers/int…

Introducing the fastest path to AI Agents that don't produce incorrect responses: - Power them with your data using LlamaIndex 🦙 - Make them trustworthy using Cleanlab