Chen Shani

@chenshani2

NLP Postdoc @ Stanford

ID: 1200741300645052416

30-11-2019 11:40:26

261 Tweet

226 Followers

302 Following

Yann LeCun and I have been pondering the concept of optimal representation in self-supervised learning, and we're excited to share our findings in a recently published paper! 📝🔍 arxiv.org/abs/2304.09355

2/2 papers submitted to EMNLP'23 have been accepted, should be the highlight of my PhD! But, I can't be happy when there's a war... EMNLP 2025 #IsraelUnderAttack

🎉Excited to announce our paper's acceptance at #EMNLP2023! We explore a fascinating question: When faced with (un)answerable queries, do LLMs actually grasp the concept of (un)answerability?🧐 This work is a collaborative effort with Avi Caciularu Shauli Ravfogel omer goldman and Ido Dagan 1/n

1. I'm officially a Ph.D! Thank you for everything Hyadata Lab (Dafna Shahaf)! 2. I'll present 2 papers at EMNLP: Towards Concept-Aware LLMs (lnkd.in/dji73KXj) FAME: Flexible, Scalable Analogy Mappings Engine (lnkd.in/dxX8YsUP) Jilles Vreeken Hyadata Lab (Dafna Shahaf) Hebrew University האוניברסיטה העברית בירושלים

The language people use when they interact with each other changes over the course of the conversation. 🔍 Will we see a systematic language change along the interaction of human users with a text-to-image model? #EMNLP23 arxiv.org/abs/2311.12131 W Leshem (Legend) Choshen 🤖🤗 @NeurIPS Omri Abend 🧵👇

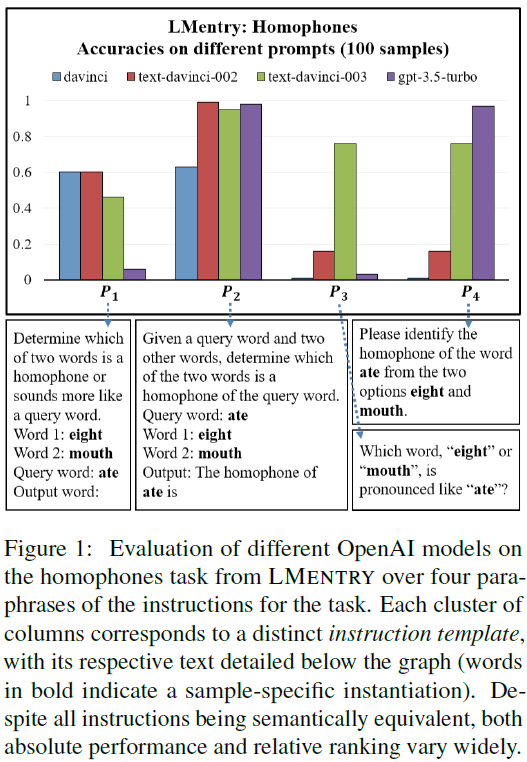

🚀 Excited to share our latest paper about the sensitivity of LLMs to prompts! arxiv.org/abs/2401.00595 Our work may partly explain why some models seem less accurate than their formal evaluation may suggest. 🧐 Guy Kaplan, Dan H.M 🎗, Rotem Dror, Hyadata Lab (Dafna Shahaf), Gabriel Stanovsky

Stanford NLP Retreat 2024! Ryan Louie and I organized a PowerPoint Karaoke 🎤 My favorite part is Chris' answer: Q: What is the first principle component for both babies and undergrads? Chris Manning: HUNGER! Chris Manning Stanford NLP Group

#ELSCspecialseminar with Dr. Chen Shani on the topic of “Designing Language Models to Think Like Humans” will take place on Tuesday, June 25, at 14:00 IST. Come hear the lecture at ELSC: Room 2004, Goodman bldg. Chen Shani

You know all those arguments that LLMs think like humans? Turns out it's not true. 🧠 In our paper "From Tokens to Thoughts: How LLMs and Humans Trade Compression for Meaning" we test it by checking if LLMs form concepts the same way humans do Yann LeCun Chen Shani Dan Jurafsky

New research shows LLMs favor compression over nuance — a key reason they lack human-like understanding. By Stanford postdoc Chen Shani, CDS Research Scientist Ravid Shwartz Ziv, CDS Founding Director Yann LeCun, & Stanford professor Dan Jurafsky. nyudatascience.medium.com/the-efficiency…

How can we help LLMs move beyond the obvious toward generating more creative and diverse ideas? In our new TACL paper, we propose a novel approach to enhance LLM creative generation! arxiv.org/abs/2504.20643 Chen Shani Gabriel Stanovsky Dan Jurafsky Hyadata Lab (Dafna Shahaf) Stanford NLP Group HUJI NLP