CambridgeLTL

@cambridgeltl

Language Technology Lab (LTL) at the University of Cambridge. Computational Linguistics / Machine Learning / Deep Learning. Focus: Multilingual NLP and Bio NLP.

ID: 964208941977792512

http://ltl.mml.cam.ac.uk/ 15-02-2018 18:45:00

245 Tweet

2,2K Takipçi

85 Takip Edilen

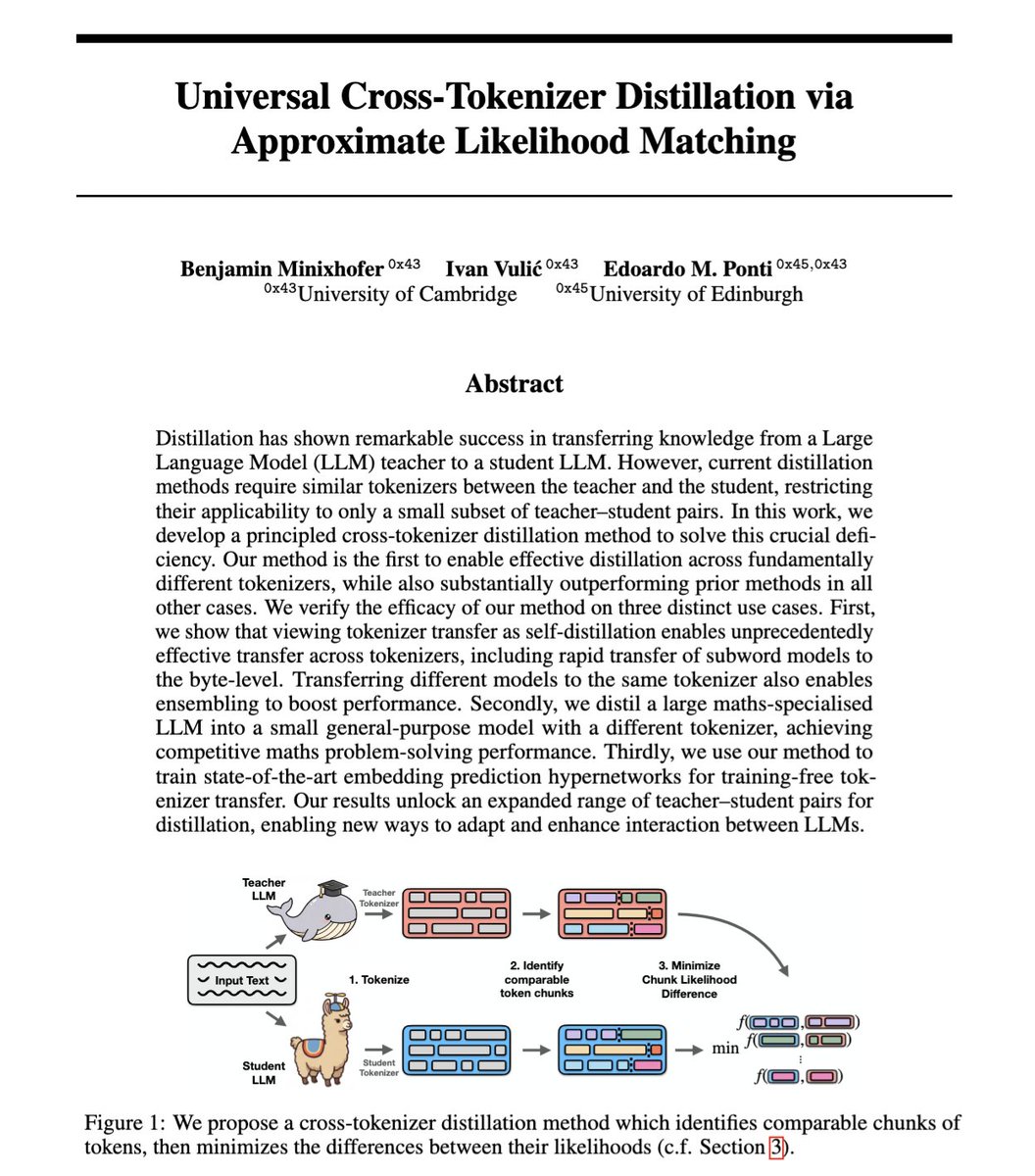

Our LTL team had an amazing time at M2L school in Croatia 🇭🇷! 🏆 Hannah won Best Poster Award! → arxiv.org/html/2509.1481… 🎤 Ivan Vulić delivered an amazing lecture → drive.google.com/file/d/1feqnLe…