Stella Biderman

@blancheminerva

Open source LLMs and interpretability research at @BoozAllen and @AiEleuther. My employers disown my tweets. She/her

ID: 1125849026308575239

http://www.stellabiderman.com 07-05-2019 19:44:59

11,11K Tweet

15,15K Followers

743 Following

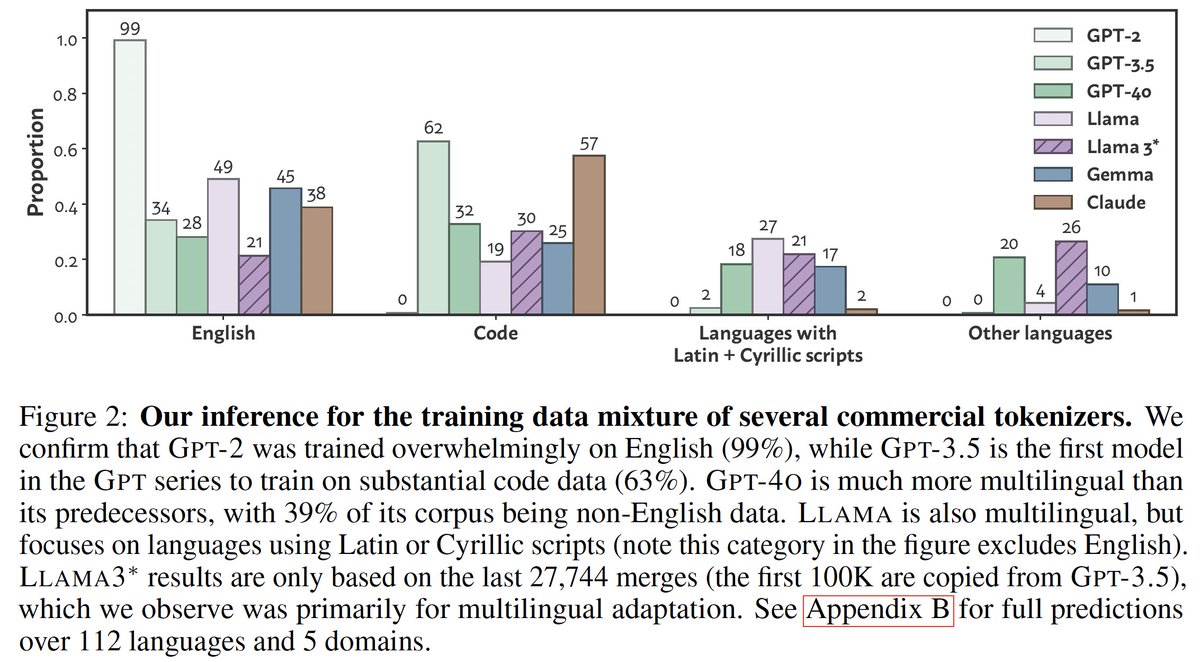

What do BPE tokenizers reveal about their training data?🧐 We develop an attack🗡️ that uncovers the training data mixtures📊 of commercial LLM tokenizers (incl. GPT-4o), using their ordered merge lists! Co-1⃣st Jonathan Hayase arxiv.org/abs/2407.16607 🧵⬇️

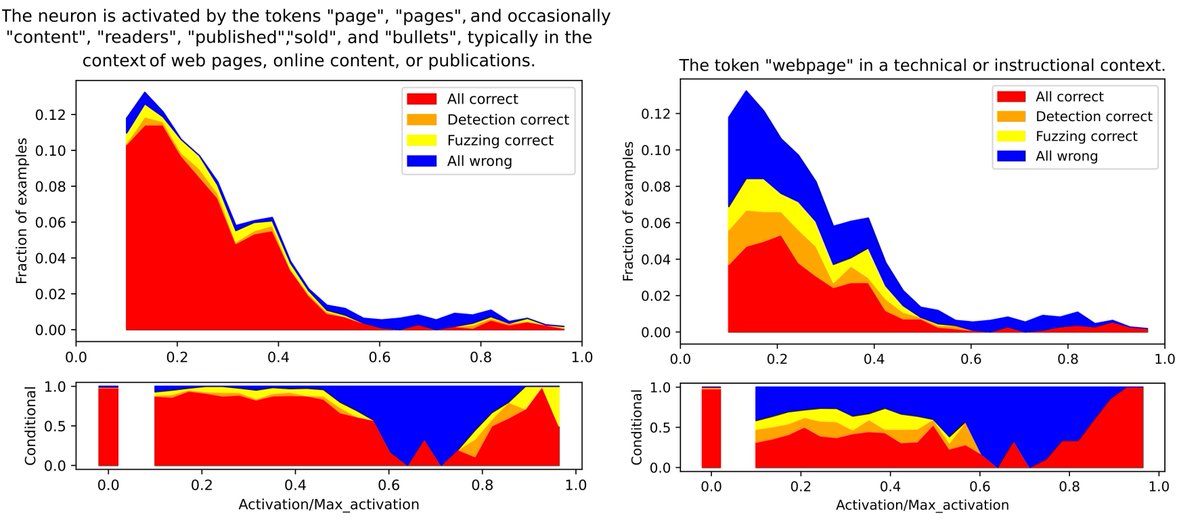

Sparse autoencoders recover a diversity of interpretable features but present an intractable problem of scale to human labelers. We build new automated pipelines to close the gap, scaling our understanding to GPT-2 and LLama-3 8b features. @goncaloSpaulo Jacob Drori Nora Belrose

Very cool paper that shows impressive performance with ternary LLMs. Discovering new papers that use EleutherAI's GPT-NeoX library in the wild is always a treat as well :D

If you're looking to learn about training large language models, this cookbook lead by Quentin Anthony details essential information often glossed over in papers and resources for learning.

The RWKV v6 Finch lines of models are here Scaling from 1.6B all the way to 14B Pushing the boundary for an Attention-free transformer, and Multi-lingual models. Cleanly licensedm Apache 2, under The Linux Foundation Find out more from the writeup here: blog.rwkv.com/p/rwkv-v6-finc…