Sagie Benaim

@benaimsagie

Assistant Professor @CseHuji | Computer Vision and Machine Learning.

ID: 852149937823506432

https://sagiebenaim.github.io/ 12-04-2017 13:22:32

94 Tweet

470 Followers

938 Following

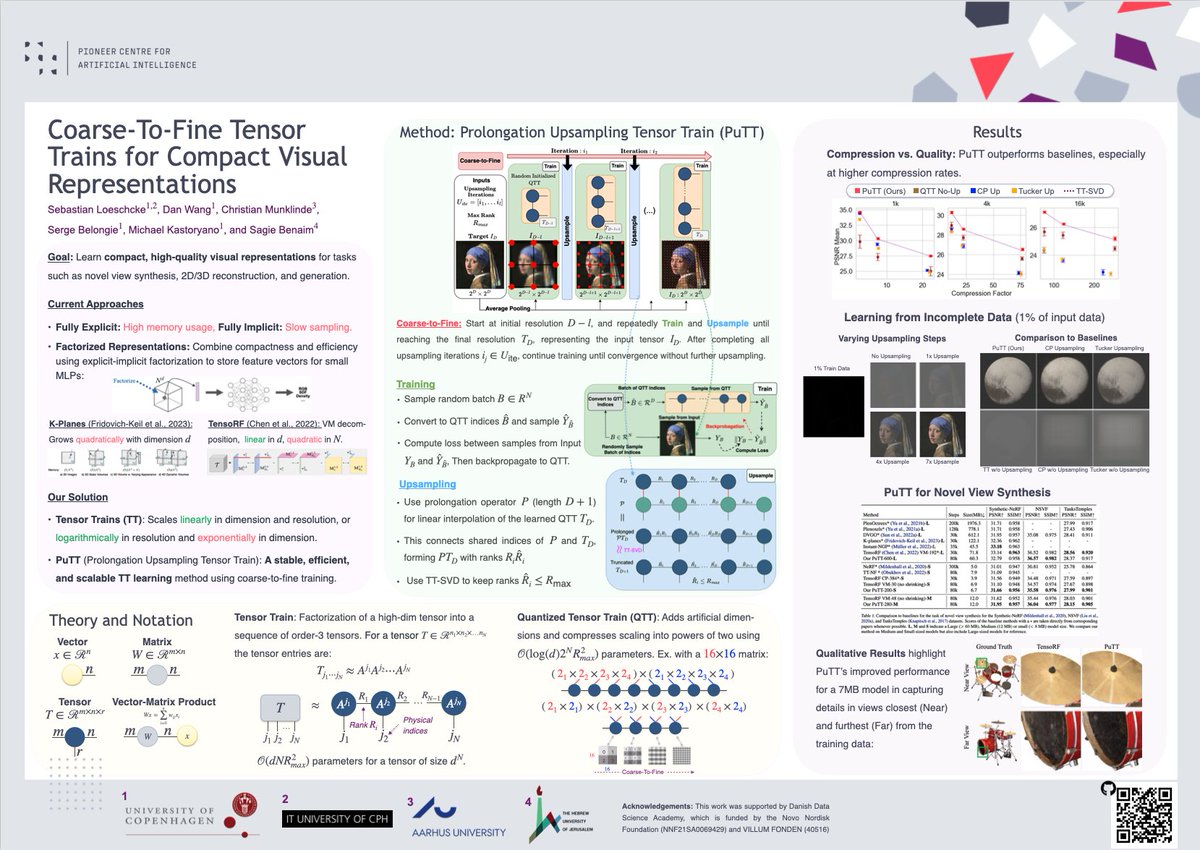

Excited to share PuTT, accepted to #ICML2024 🎉. PuTT efficiently learns high-quality visual representations with tensor trains, achieving SOTA performance in various 2D/3D compression tasks. Great collab with D. Wang, C. Leth-Espensen, Serge Belongie, Michael Kastoryano, Sagie Benaim

Excited for ICML Conference in Vienna! DM me if you want to chat. I’ll be presenting: -LoQT: Low Rank Adapters for Quantized Pre-Training (𝐎𝐑𝐀𝐋) - 27 Jul, 10:10-10.30, Hall A1 -Coarse-To-Fine Tensor Trains for Compact Visual Representations - 25 Jul, 11:30 -13.00, Hall C 4-9 #203

Visit poster #203 today at 11:30 in Hall C ICML Conference: “Coarse-To-Fine Tensor Trains for Compact Visual Representations.” We present a novel method for training Quantized Tensor Trains, achieving highly compressed and high-quality results for view synthesis and 2D/3D compression.

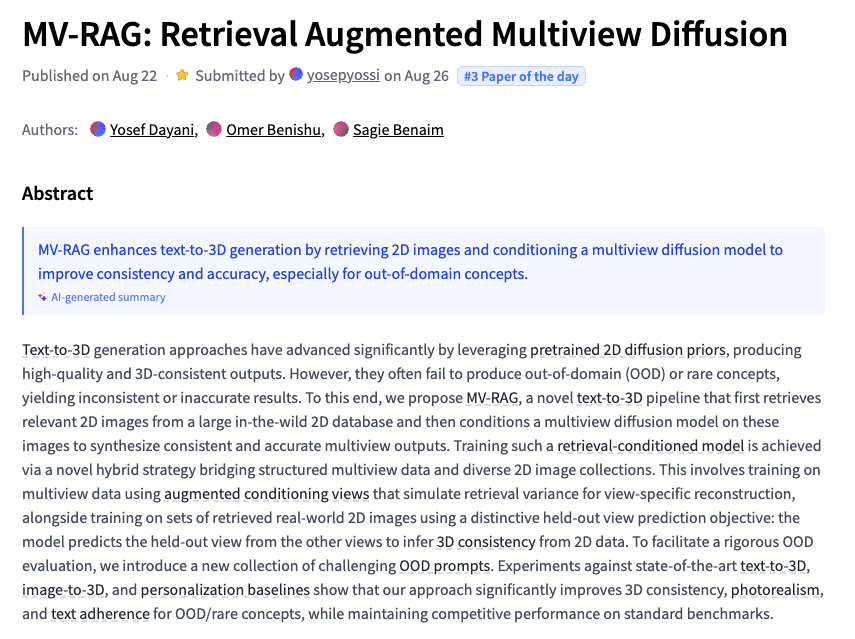

![Yosef Dayani (@yosefday) on Twitter photo [1/10] 🤔 What if you wanted to generate a 3D model of a “Bolognese dog” 🐕 or a “Labubu doll” 🧸?

Try it with existing text-to-3D models → they collapse.

Why? These concepts are rare or new, and the model has never seen them.

🚀 Our solution: MV-RAG

See details below ⬇️ [1/10] 🤔 What if you wanted to generate a 3D model of a “Bolognese dog” 🐕 or a “Labubu doll” 🧸?

Try it with existing text-to-3D models → they collapse.

Why? These concepts are rare or new, and the model has never seen them.

🚀 Our solution: MV-RAG

See details below ⬇️](https://pbs.twimg.com/media/GzQrO_TXQAEIOb7.jpg)