Xiaodong Liu

@allenlao

Deep Learning and NLP Researcher: interested in machine learning, nlp, dog and cat. Opinions are my own.

ID: 535040353

https://github.com/namisan 24-03-2012 05:43:31

150 Tweet

470 Followers

254 Following

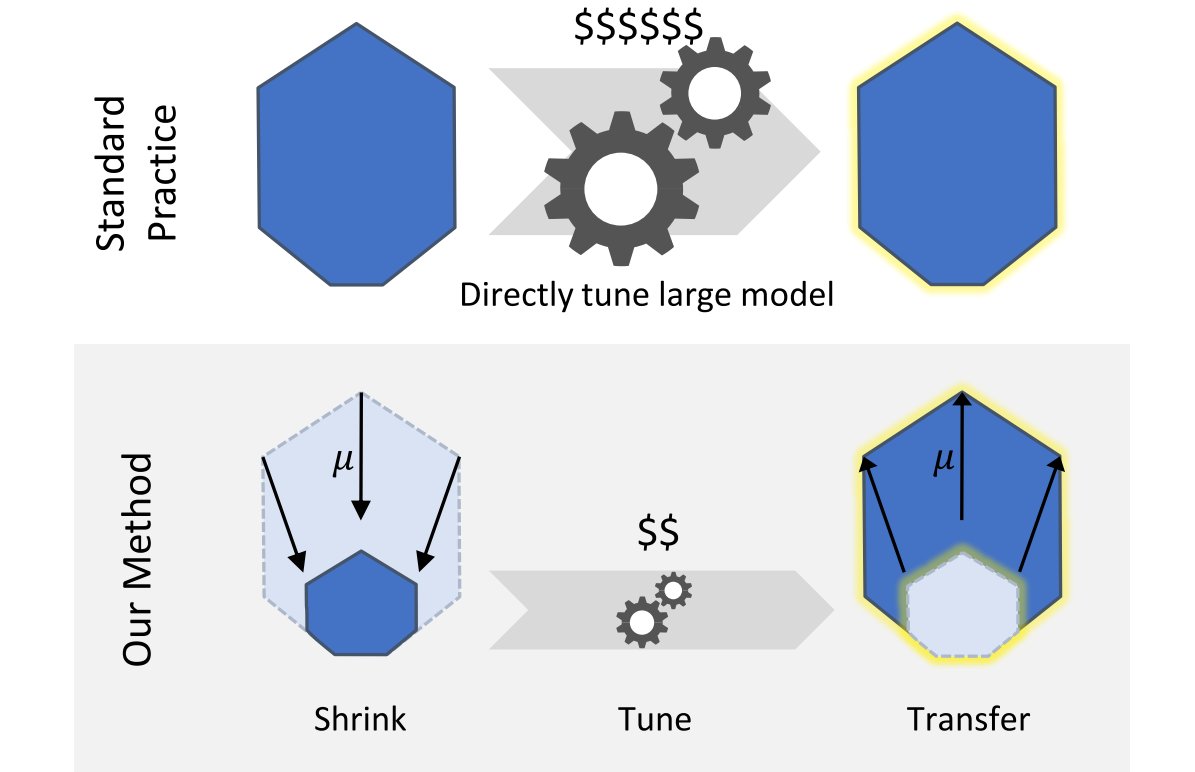

[🥳new video🧠] "Tensor Programs V: Tuning Large Neural Networks via Zero-Shot Hyperparameter Transfer" (μTransfer) paper explained! YT: youtu.be/MNOJQINH-qw Greg Yang Edward Hu @ibab_ml Szymon Sidor Xiaodong Liu Jakub Pachocki Weizhu Chen Jianfeng Gao Microsoft Research OpenAI

![Aleksa Gordić (水平问题) (@gordic_aleksa) on Twitter photo [🥳new video🧠] "Tensor Programs V: Tuning Large Neural Networks via Zero-Shot Hyperparameter Transfer" (μTransfer) paper explained!

YT: youtu.be/MNOJQINH-qw

<a href="/TheGregYang/">Greg Yang</a> <a href="/edwardjhu/">Edward Hu</a> @ibab_ml <a href="/sidorszymon/">Szymon Sidor</a> <a href="/AllenLao/">Xiaodong Liu</a> <a href="/merettm/">Jakub Pachocki</a> <a href="/WeizhuChen/">Weizhu Chen</a> <a href="/JianfengGao0217/">Jianfeng Gao</a> <a href="/MSFTResearch/">Microsoft Research</a> <a href="/OpenAI/">OpenAI</a> [🥳new video🧠] "Tensor Programs V: Tuning Large Neural Networks via Zero-Shot Hyperparameter Transfer" (μTransfer) paper explained!

YT: youtu.be/MNOJQINH-qw

<a href="/TheGregYang/">Greg Yang</a> <a href="/edwardjhu/">Edward Hu</a> @ibab_ml <a href="/sidorszymon/">Szymon Sidor</a> <a href="/AllenLao/">Xiaodong Liu</a> <a href="/merettm/">Jakub Pachocki</a> <a href="/WeizhuChen/">Weizhu Chen</a> <a href="/JianfengGao0217/">Jianfeng Gao</a> <a href="/MSFTResearch/">Microsoft Research</a> <a href="/OpenAI/">OpenAI</a>](https://pbs.twimg.com/media/FOC9SjRX0AYPzoo.jpg)

Today, an exciting paper from Microsoft Research: Tensor Programs V: Tuning Large Neural Networks via Zero-Shot Hyperparameter Transfer arxiv.org/abs/2203.03466 While it's too early to say, this may be remembered as the single biggest efficiency advancement in hyperparameter tuning.

🤓In 2017, Google researchers introduced the Transformer in "Attention is all you need", which took AI by storm. 5 startups were born: Adept (🏦 Air Street Capital), Inceptive, NEAR Protocol, @CohereAI, CharacterAI. Only 1/8 authors remain Google AI, another is at OpenAI. 😉

🚨[New Paper] Check out our recent work on parameter-efficient fine-tuning. We introduce a new method to boost the performance of Adapter to outperform full model fine-tuning. Great collaboration with Subhabrata Mukherjee, Xiaodong Liu, Jing Gao, Ahmed Awadallah and Jianfeng Gao.

Today we're releasing all Switch Transformer models in T5X/JAX, including the 1.6T param Switch-C and the 395B param Switch-XXL models. Pleased to have these open-sourced! github.com/google-researc… All thanks to the efforts of James Lee-Thorp, Adam Roberts, and Hyung Won Chung

Q: ACM FAccT (main AI Ethics conf) was $10,000 short. They also turned down Google sponsorship due to G's continued refusal to address structural discrimination & trauma to me & @timnitGebru (@dair-community.social/bsky.social) specifically. Is there any issue w/ me starting a GoFundMe to make up the diff?

Kudo to co-first authors Yu Gu, Robert Tinn, Hao Cheng for driving the project to a big success, and big congrats to all the co-authors Michael R. Lucas, Naoto Usuyama, Xiaodong Liu, Tristan Naumann, Jianfeng Gao.