Adrien Bardes

@AdrienBardes

PhD Student at @MetaAI & @Inria with Yann LeCun and Jean Ponce, interested in self-supervised learning and computer vision.

ID:787639668

http://adrien987k.github.io 28-08-2012 19:01:18

42 Tweets

538 Followers

231 Following

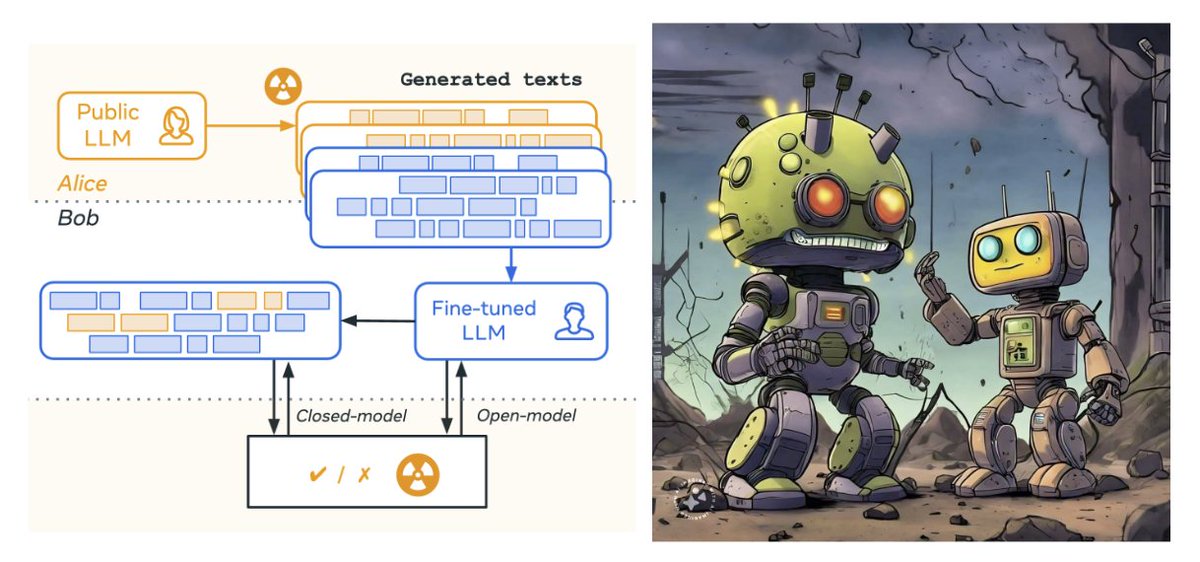

OpenAI may secretly know that you trained on GPT outputs!

In our work 'Watermarking Makes Language Models Radioactive', we show that training on watermarked text can be easily spotted ☢️

Paper: arxiv.org/abs/2402.14904

Pierre Fernandez AI at Meta École polytechnique Inria

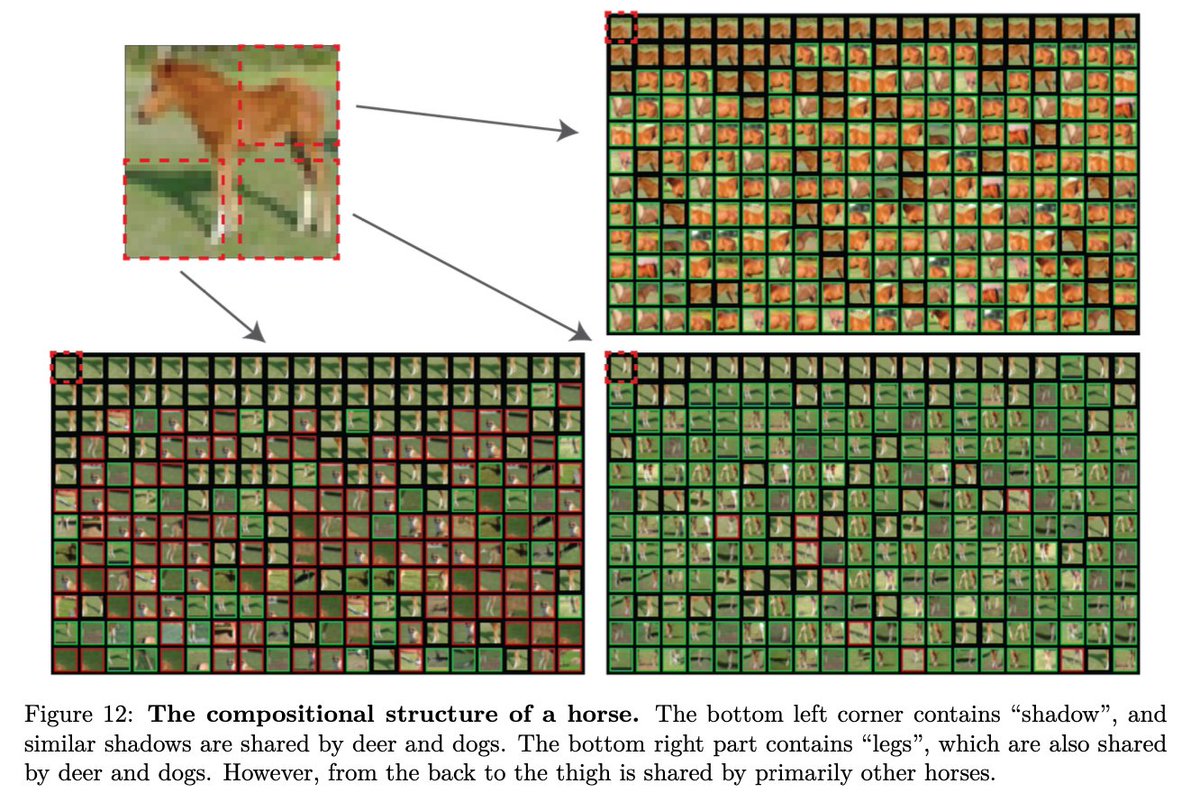

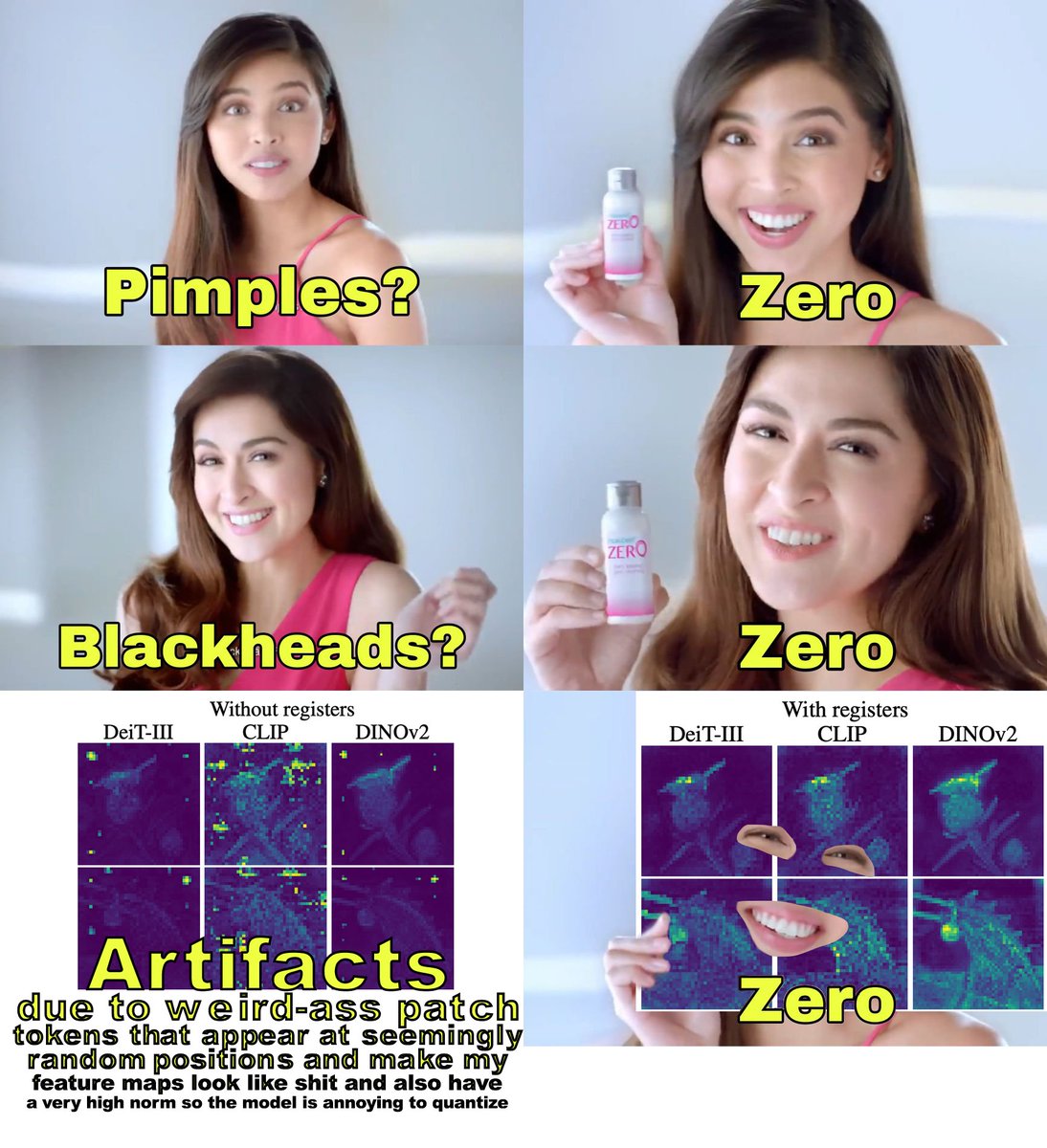

Bag of Image Patch Embedding Behind the Success of Self-Supervised Learning

Yubei Chen, Adrien Bardes, @LiZengy, Yann LeCun

tl;dr: bag of patches and compositional structure of the horse is all you need.

(except where is Dinov2 comparison?) and

openreview.net/pdf?id=r06xREo…