andrew ilachinski

@ai_ilachinski

I straddle two worlds: science/complexity/AI and photography, andy-ilachinski.com. This page is focused on the more scientific pursuits.

ID: 931967049789792257

https://www.cna.org/news/AI-Podcast 18-11-2017 19:27:14

5,5K Tweet

880 Followers

3,3K Following

Interesting review paper by Tomoya Nakai, Tatsuya Daikoku (大黒達也), and Yohei Oseki. They argue that there are hierarchical and predictive mechanisms shared across language, music, and mathematics. sciencedirect.com/science/articl…

🚨 New today in Science Magazine !!🚨 We’re publishing the results of the largest AI persuasion experiments to date: 76k participants, 19 LLMs, 707 political issues We examine “levers” of AI persuasion: model scale, post-training, prompting, personalization, & more… 🧵:

Human learns from unique data -- everyone's OWN life -- but our visual representations eventually align. In our recent work "Unique Lives, Shared World" Google DeepMind, we train models with "single-life" videos from distinct sources, and study their alignment and generalisation.

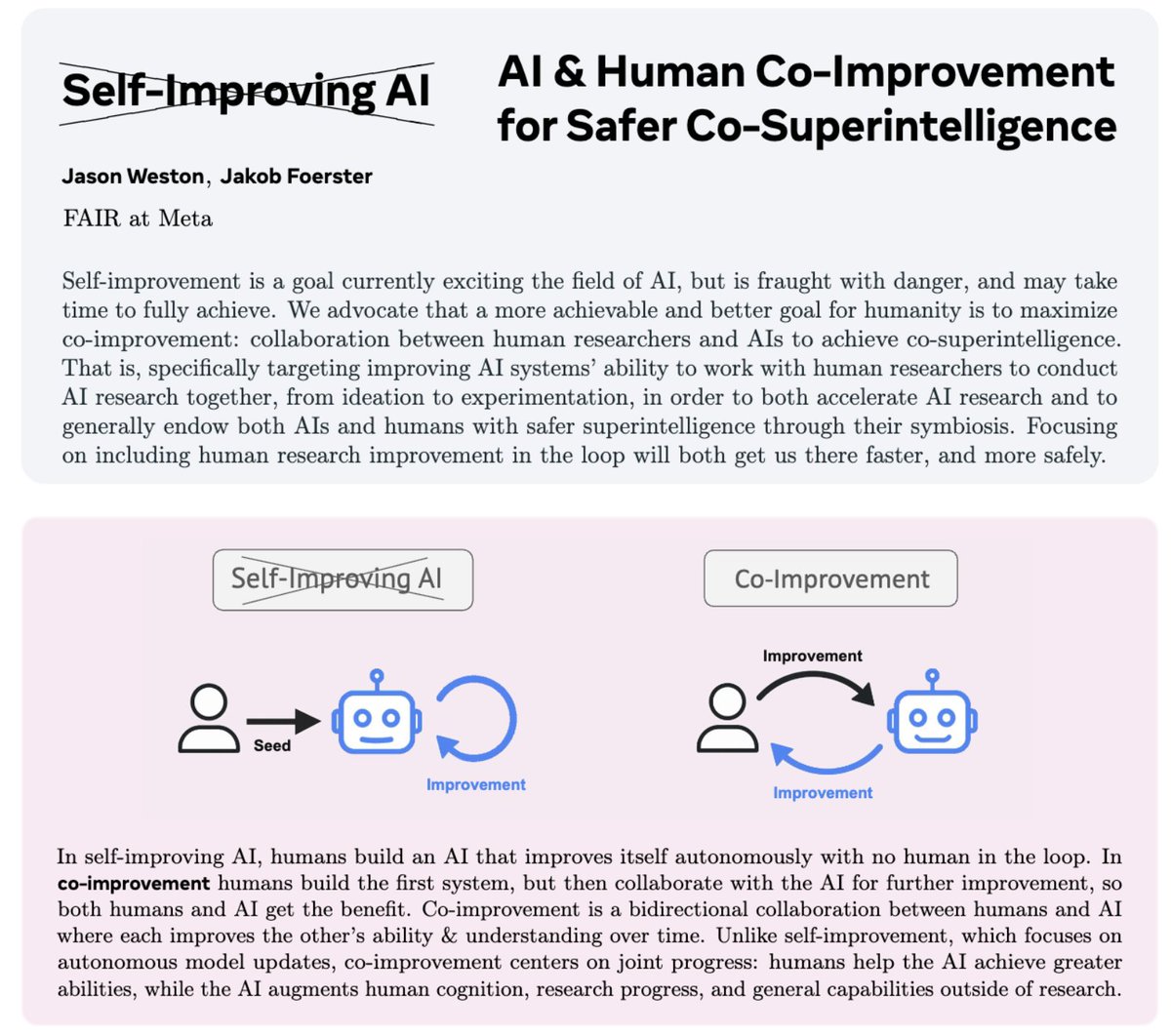

🤝 New Position Paper !!👤🔄🤖 Jakob Foerster and I wrote a position piece on what we think is the path to safer superintelligence: co-improvement. Everyone is focused on self-improving AI, but (1) we don't know how to do it yet, and (2) it might be misaligned with humans.

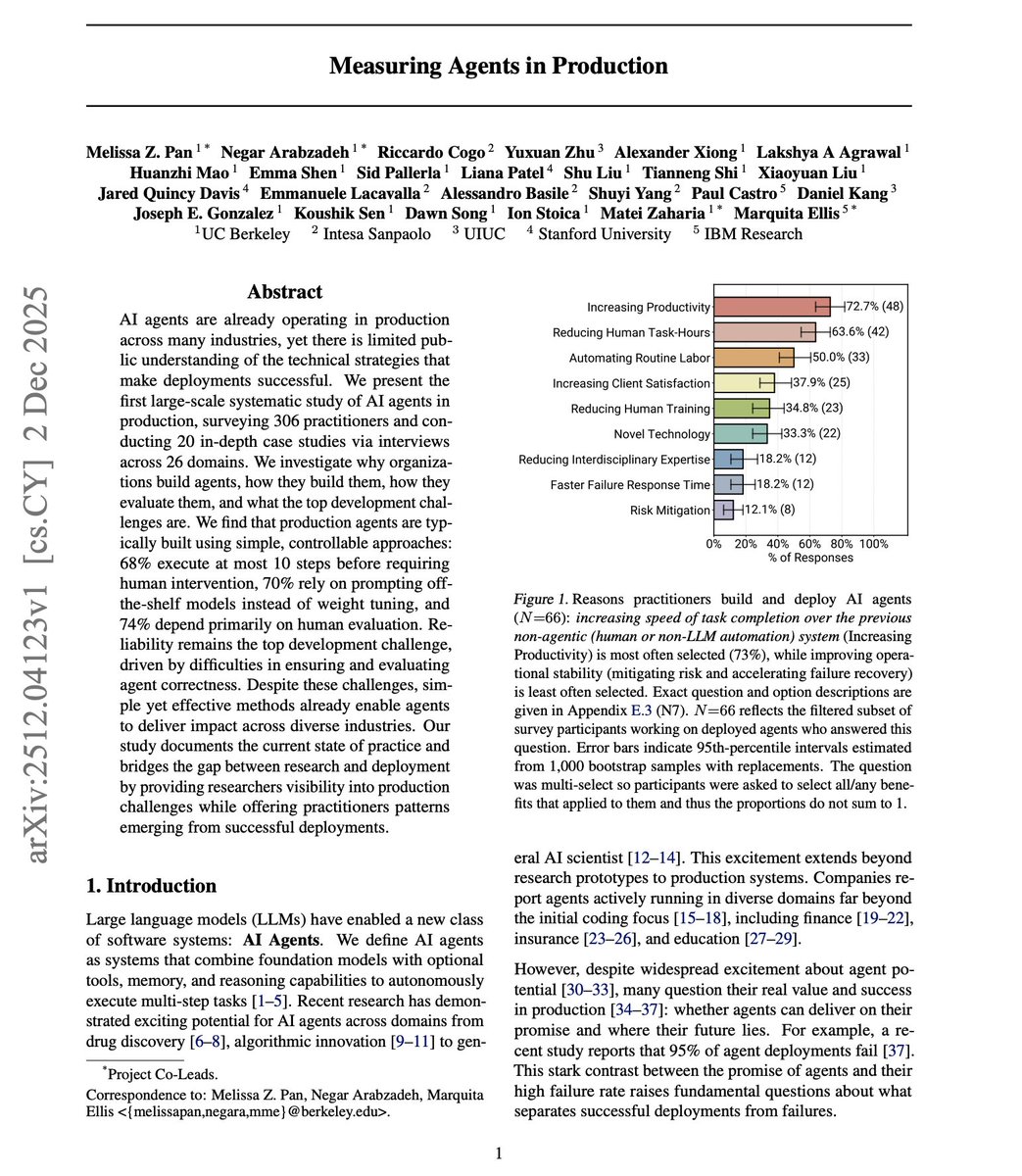

Measuring Agents in Production Melissa Pan ✈️ NeurIPS et al. investigate how AI agents are built and deployed across 26 industries, finding that 73% aim to boost productivity through automation while relying on simple, controllable methods over complex autonomy. 📝 arxiv.org/abs/2512.04123