yihan

@0xyihan

rational optimist | SCS@CMU

@GreenpillCN @PlanckerDao |

rep @gitcoin GR15 GCC UDC |

AI alignment, longevity

zhihu.com/people/zh3036

ID: 1570074700000432128

http://linktr.ee/zh3036 14-09-2022 15:39:22

266 Tweet

1,1K Takipçi

733 Takip Edilen

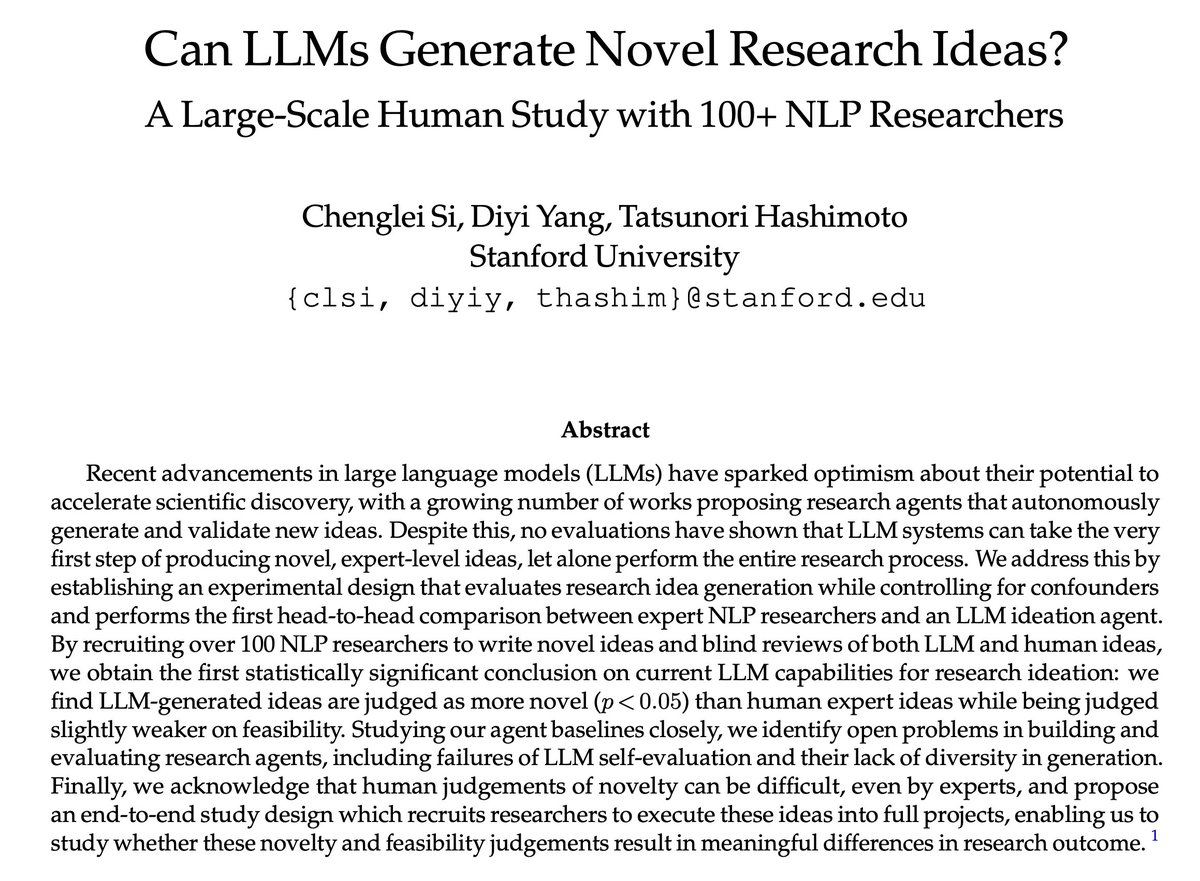

Yann LeCun A linguistic act is related to its performative nature, which allows for the modification of the state of things. Many speech acts are not describing doing: it’s to do it. Not every utterances is either true or false or reports. LLM are trained on a small part of a speech act.

First author Kanishk Gandhi's post: x.com/gandhikanishk/… Arxiv: arxiv.org/abs/2404.03683

Evals are emphasized so much at AI Engineer. As foundation model becomes more powerful, system design paradigm will shift from imperative to declarative. The ability to clearly declare a measurable and operational goal will become more important.

I recall in one session of AI Engineer, the speaker mentioned that there are more searches with long keywords these days than before. I suspect this is because searches made by Perplexity tend to have very long keywords.

📢Super excited that our workshop "System 2 Reasoning At Scale" was accepted to #NeurIPS24, Vancouver! 🎉 🎯 how can we equip LMs with reasoning, moving beyond just scaling parameters and data? Organized w. Stanford NLP Group Massachusetts Institute of Technology (MIT) Princeton University Ai2 UW NLP 🗓️ when? Dec 15 2024