Evgeny Kuzyakov

@ekuzyakov

Co-founder of @fast_near, founder of @NearSocial_, Ex-@proximityfi, Ex-@NearProtocol, Ex-@google, ex-@facebook

ID:1706052276

https://github.com/evgenykuzyakov 28-08-2013 02:04:02

2,3K Tweet

21,1K Takipçi

153 Takip Edilen

1400 receipts per second on NEAR Protocol mainnet.

I think it's the new record.

Including 500 function calls per second

In a recent release Brave Software added 'Pretty-print' to display JSON with indents. Always appreciated how Firefox displayed JSON.

Just wanted to say: 'Thank you!'

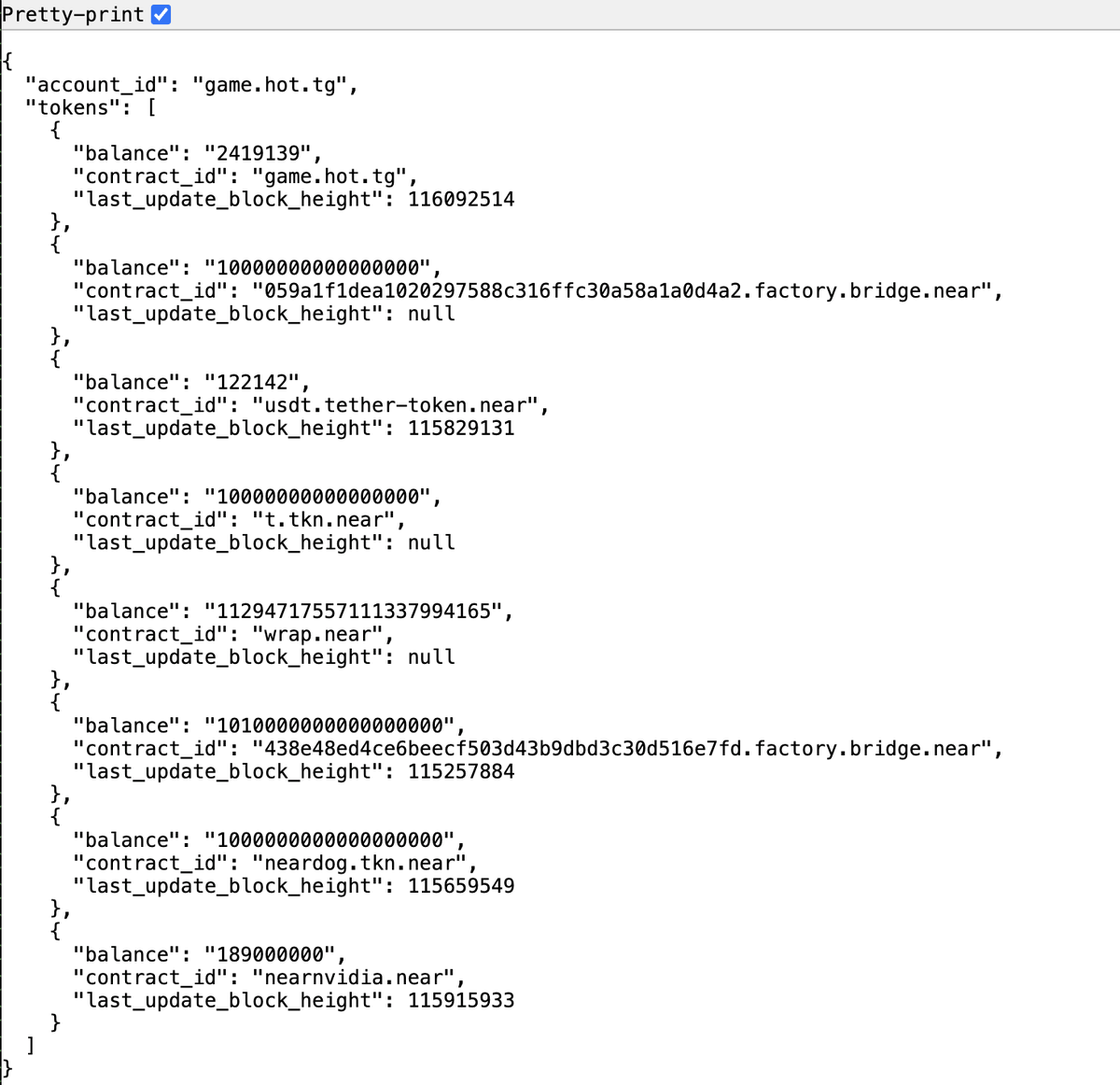

The latest FASTNEAR API returns FT balances.

Try it yourself: api.fastnear.com/v1/account/v2.…

Latest balances for all 357 tokens on Ref Finance contract in under 100ms.