Dan Hendrycks

@DanHendrycks

• Director of the Center for AI Safety (https://t.co/ahs3LYCpqv)

• GELU/MMLU/MATH

• PhD in AI from UC Berkeley

https://t.co/rgXHAnYAsQ

https://t.co/nPSyQMaY9b

ID:68538286

http://danhendrycks.com 24-08-2009 23:08:53

600 Tweet

17,3K Takipçi

80 Takip Edilen

Follow People

martin_casado Radical Ventures If you leave it to companies to decide what is safe you get the Boeing 737 max.

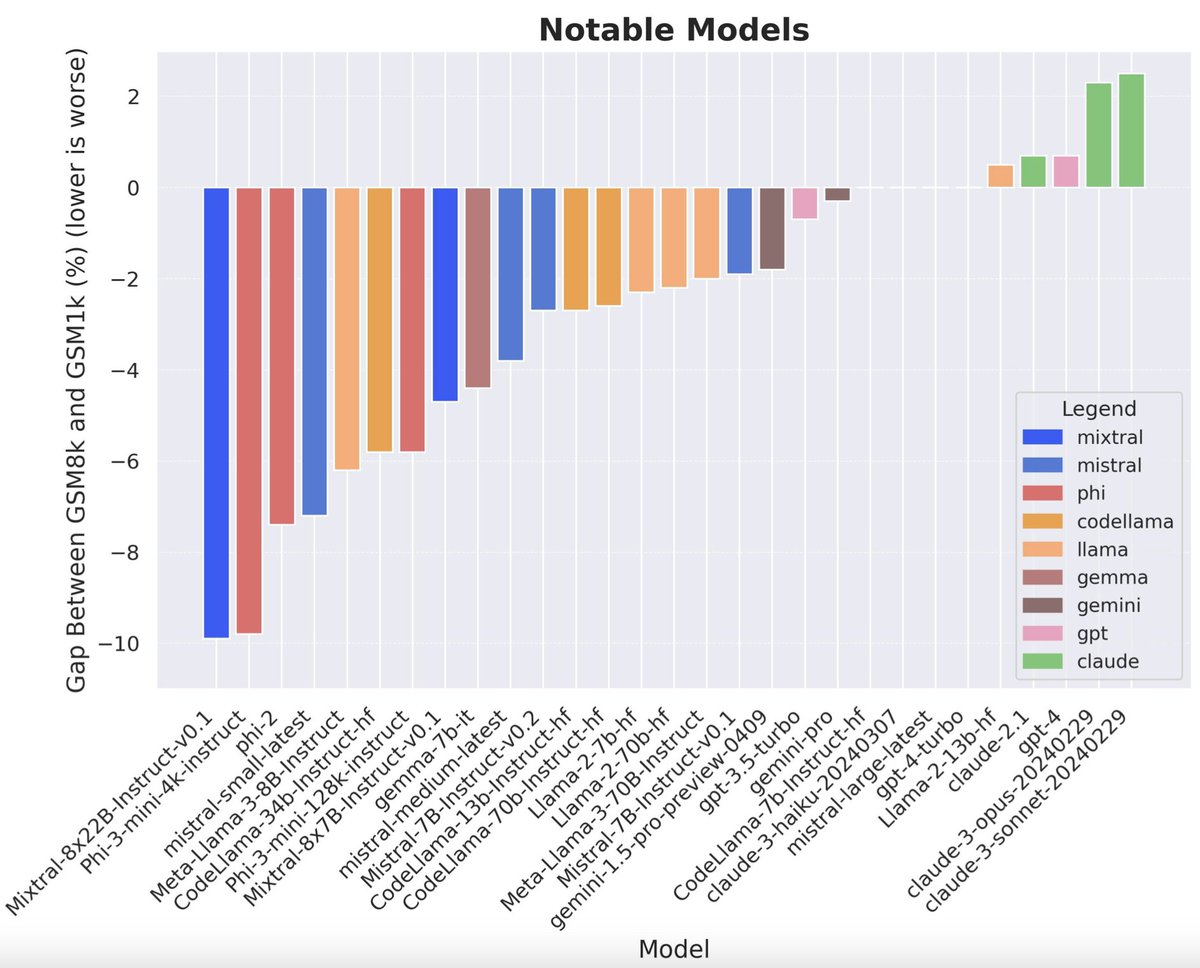

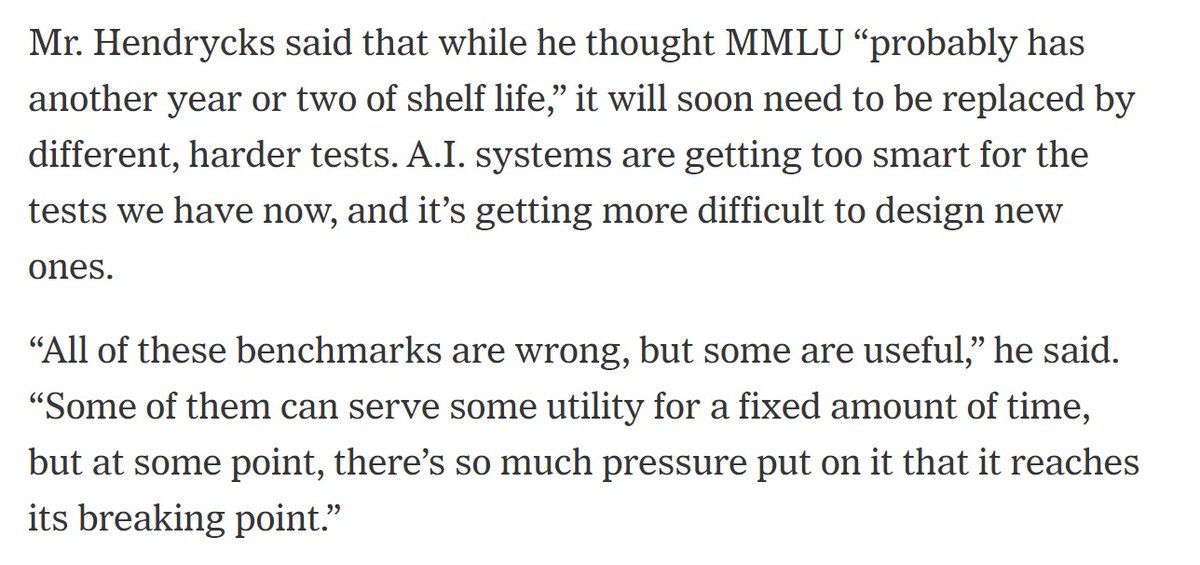

AI researchers like Dan Hendrycks, who helped create the MMLU (essentially the SAT for chatbots) told me that leading benchmark tests have reached 'saturation' -- basically, they're too easy for today's LLMs -- and that we will soon need to develop harder tests to gauge model

We've released a post about the looming risk of AI cyberattacks on critical infrastructure.

It notes that we are living under a 'cyberattack overhang.'

Advances in defensive techniques are of no help if defenders are not keeping up to date.

safe.ai/blog/cybersecu… by Steve Newman