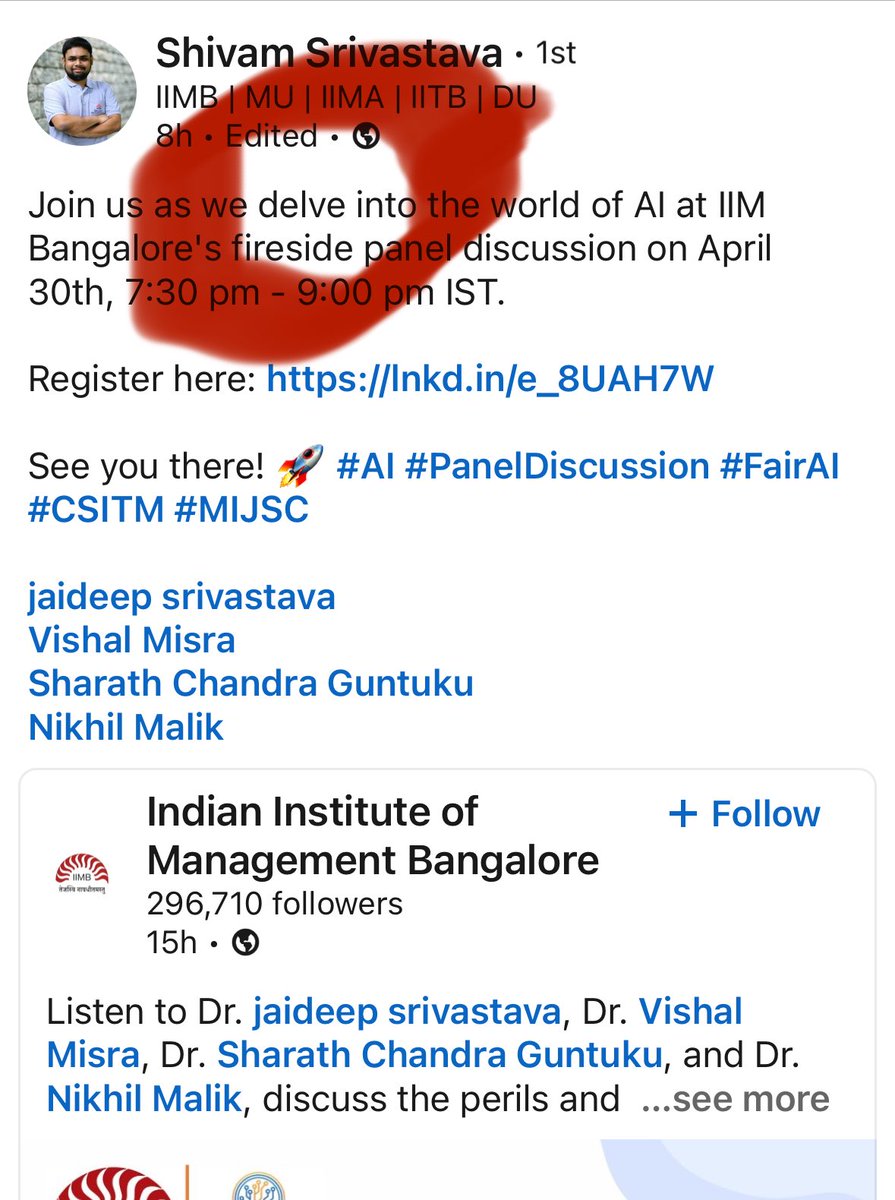

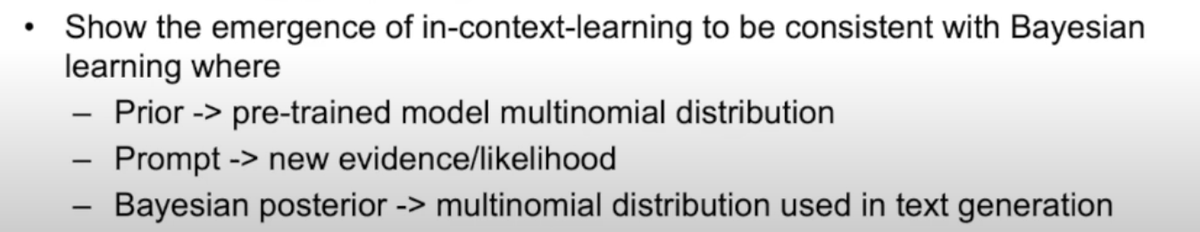

This talk Vishal Misra was an eye-opener to how few shot prompting works under the hood to change output token probability distributions. I forgot LLMs don't produce tokens directly. They produce embeddings from which nearest neighbors tokens are chosen.

youtu.be/sLodkyHlQhY

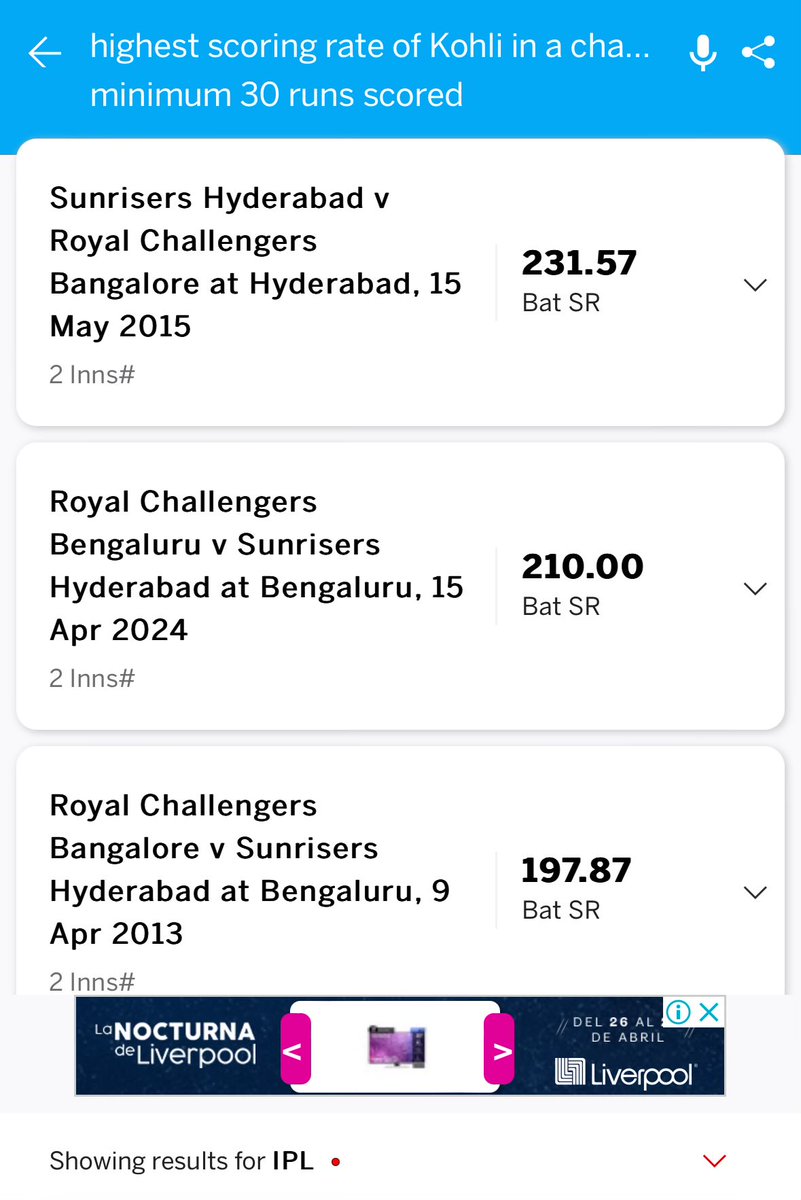

tl;dr LLMS as Bayesian learning : Given a prompt, looks for something close in training set, then uses prompt for new evidence. Then computes a posterior using this new evidence. This posterior distribution is what is used to generate the new text. (Vishal Misra)

It's so crazy…

Awesome 💕 😘 Love Song From A Bollywood Romantic Movie Scene.

#ChalTereIshqMein 😍 💕 ✴️

#Gadar2

#VishalMishra

#Mithoon

youtube.com/shorts/jPiWIRt…

#YouTube

#youtubeshorts

#ZeeMusic

#Bollywood

#HinfiRomanticSong